This article is more than 1 year old

Watson beats humans in Jeopardy! dry run

'They say it got smart, a new order of intelligence'

If you were betting on humanity in the upcoming grand challenge clash between humanity and IBM's Watson question-answer (QA) supercomputer, you might want to start reconsidering your wager.

As El Reg previously reported, IBM's Watson QA box took the same Jeopardy! entrance exam a month ago and qualified to take on past champions of the popular game show in a throwdown pitting man – well, actually two men – against machine.

Ken Jennings, who has held the top rank in the game the longest (with 74 wins), and Brad Rutter, who has racked up the most cash ($3.25m) on the show during his reign earlier in the decade, are representing humanity.

IBM is representing its own business interests, of course, which include doing away with analysts of all sorts and replacing them with supercomputers with no freaking sense of humor whatsoever. How much of a change this will actually mean in the world remains to be seen if Watson QA systems become commercialized, as they most certainly will. (We may not notice.) I personally cannot wait until there is a Watson voice-activated interface for Google, Bing, and Yahoo! that makes a Webby search engine screen and trolling through a zillion page-links looking for an answer utterly obsolete. As a matter of fact, a Watson QA system properly programmed could even replace Wikipedia and maybe Wikileaks, too, if we teach it to hack into government systems. But I digress. (It is Friday, after all.)

IBM Watson: The avatar face of SkyNet

The Watson QA system is designed to process (I hesitate to use the word understand) puns, slang, and double-entendre, all of which are necessary to play the Jeopardy! game. In that game, host Alex Trebek makes a statement with some clever phrasing and players have to formulate a question for which that statement is the answer. (Jeopardy! was created by Merv Griffin in the wake of game show scandals in the 1950s, where contestants in popular quiz shows were given answers to questions ahead of the broadcast so they could cheat, look smart, and boost show ratings.)

In a dry run that took place at a clone of the Jeopardy! game show set up at IBM's TJ Watson Research Center, humanity didn't do so well. (What did you expect?) In the practice round, Watson won $4,400 by stating questions, while Jennings won $3,400 and Rutter only won $1,200.

While humanity is ganging up two against one on Watson to play the game, the match-up is not exactly fair. Watson doesn't get rattled. Perhaps IBM should invent artificial electronic glands that cause it to overheat or shut down memory access for a microsecond or two. Or better still, give the Watson QA system a grouchy mother-in-law and it can start second-guessing all of its answers.

The dry run was clearly designed to give Jennings and Rutter a chance to play against Watson ahead of the Jeopardy! smackdown, which will run from 14 to 16 February. One of the things that freaked out chess champion Gary Kasparov when he initially played IBM's Deep Blue supercomputer more than a decade ago was that he couldn't "feel" the way the machine was thinking. While IBM wants Watson to win the Jeopardy! challenge, it wants a fair fight and that means giving Jennings and Rutter, who do have glands and who will get flustered if Watson starts crushing them on TV in front of millions of people, a feel for the competitor they are going up against. Watson sparred with 50 Jeopardy! champions back in the fall of 2010 to fine-tune its game, and giving the humans a chance to warm up seems only fair.

10 racks versus four lobes

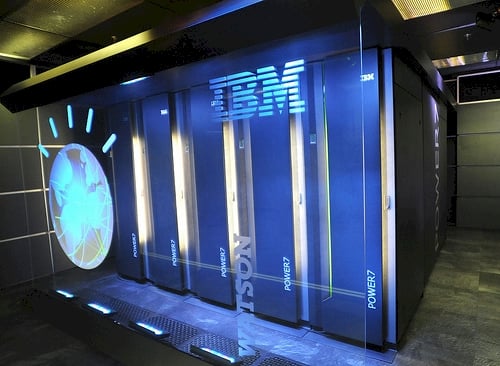

IBM this week also cleared up some confusion regarding the server iron upon which the Watson QA system is based. The prototype machine that IBM Research talked about back in April 2009 was based on a BlueGene/P parallel supercomputer. But the production machine that will play against humanity is going to be based on a cluster of Power7-based Power 750 midrange servers – thereby turning the game show grand challenge into a big commercial for IBM's latest Power Systems servers.

Specifically, the Watson QA software is running on 10 racks of these machines, which have a total of 2,880 Power7 cores and 15 TB of main memory spread across this system. The Watson QA system is not linked to any external data sources, but has a database of around 200 million pages of "natural language content," which IBM says is roughly equivalent to the data stored in 1 million books. This data is stored in the main memory of the Watson machine, and the secret sauce is the software that allows Watson to listen to the statement, rummage around through its in-memory database, come up with the probable answer, hit the buzzer, and speak the question the statement answers. All in under three seconds. The Power 750 cluster is rated at 80 teraflops, which is a healthy amount of number-crunching power, but not outrageous by modern multi-petaflops standards.

To give Watson a face, IBM came up with an avatar that shows the earth and the graphical representation of IBM founder Thomas Watson's "Think" admonition, which is the hash marks that denote a lightbulb going off. (The Watson super is named after Big Blue's founder, and it is no accident that the Jeopardy! Grand Challenge is taking place during the company's centennial year.) As Watson is thinking, the satellite lines on the avatar – what IBM calls "thought rays" – move faster and as the system is more confident of its answer, these lines turn green. The thought rays turn orange when Watson is wrong.

I wouldn't bet on seeing a lot of orange thought rays in February. ®