This article is more than 1 year old

Cloud box does virtualization sans SAN

Practice safe SOCS

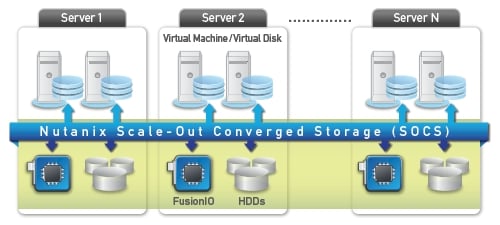

Cloud-computing appliance maker Nutanix is tackling a problem that has dogged the deployment of virtual servers and desktops: all the key hypervisors require storage area networks and centralized storage.

To overcome this limitation, Nutanix has created a virtualized controller that implements a clustered file system and embeds it in a cluster-compute appliance – the compute nodes essentially become a virtual SAN.

The beauty of the Nutanix Complete Cluster, says company cofounder and CEO Dheeraj Pandey, is that the server virtualization hypervisors that run atop the appliances still think they are talking to a SAN – all the nifty high-availability, snapshotting, and live-migration features baked into these hypervisors and their management consoles continue to work just as they did before.

By banning the SAN, says Pandey, Nutanix can simplify the rollout of compute clouds at large enterprises that are familiar with complexity and high cost, and therefore have been happy – however begrudgingly – to invest in SANs. In addition, it can make cloudy infrastructure more affordable for small and medium businesses that want high-end virtualization features that used to require SANs.

Nutanix was founded in 2009 by file-system experts from Google and cluster experts from Oracle. Pandey managed the early incarnations of the Exadata product and also created the storage engine for Oracle's database. More recently, he was vice president of engineering at Aster Data Systems, which was acquired by Teradata in March of this year for $263m so the data warehouser could get its mitts on the company's nCluster hybrid row-column database and SQL-MapReduce big-data chewer.

Nutanix cofounder Mohit Aron also hails from Aster Data – he was the chief architect at the firm and did a lot of the work on nCluster. Prior to his stint at Aster, he was at Google, leading the design and development of the Google File System, the original incarnation of Google's distributed file system that supported its MapReduce big-data crunching techniques.

Pandey and Aron were joined by Ajeet Singh as the third cofounder and the company's chief products officer. Also from Aster Data, where he was director of product management, Singh had previously been part of Oracle's early cloud computing efforts. The three cofounders got their seed funding from private investors in May 2010, and pulled in $13.2m in Series A funding in April 2011 from Lightspeed Venture Partners and Blumberg Capital.

How to fool an unsuspecting server

The Nutanix Complete Cluster begins with the basic building block in today's data center: a two-socket x64 server. Nutanix currently sources two-socket tray servers from Dell and Super Micro, which cram four nodes into a 2U rack-mounted chassis – they plan to source machines from HP once it delivers on-board 10 Gigabit Ethernet ports.

Pandey says that it only supports its software stack on select hardware configurations because Nutanix has to do a lot of tuning on the server, flash storage, and disk storage that goes into its appliances – customers can't just run the Nutanix stack on whatever servers they have lying around their data center.

Each server node in the cluster is configured with two six-core Xeon 5600 processors, with eight cores allocated to run hypervisors and virtual machines, and four cores allocated to run the Nutanix virtual storage controller, called Scale-Out Converged Storage – SOCS for short. This controller virtualizes a pool of Intel and Fusion-io solid state disks and Seagate SATA drives, and presents virtual machines with block and file I/O access to data spread across these disks inside the cluster.

"The architecture is hypervisor agnostic," explains Pandey, with VMware's ESXi 4.1 hypervisor being the first one to get support on the cloud appliance. "ESX thinks it is talking to SAN storage and it is not."

The Nutanix Complete Cluster can fool multiple servers into thinking they're connected to a SAN

The storage cluster implemented by SOCS on the compute nodes uses 10GE ports to link the nodes to each other, and has Gigabit Ethernet links to provide access to VMs and their workloads. The 10GE link is necessary to make use of ESXi hypervisor features such as live migration, high availability, fault tolerance, and distributed resource management, which create a lot of chatter on the network. On a SAN, can you just flip some pointers to make a VM's file point to a different physical server during a live migration, but on a Nutanix appliance, you need to move data.

Stirring up some secret HOT sauce

The SOCS storage software includes Cluster RAID, which stripes data across disk drives within a server node for high performance. What Nutanix calls Heat-Optimized Tiering cache, or HOTcache, caches data in each cluster node on a local SSD and also puts a copy on a different node in the cluster as a backup. SOCS also includes a distributed metadata service, called Medusa, that spreads the metadata around to multiple nodes for performance and fault tolerance reasons. "The secret sauce in all of this is the metadata, and it is globally addressable," says Pandey.

The Nutanix Complete Cluster appliance, complete with massive logo

SOCS sports a distributed data maintenance service called Curator that uses MapReduce techniques to figure out what bits of data are being used by what VM when and where, and automatically migrates the coldest data to disks and the hottest data to the Fusion-io and Intel SSDs.

Curator also rebalances data when nodes are added to the Nutanix cluster and moves data along with a VM when they are live-migrated, thus keeping data used by a VM as close to it as possible. SOCS includes snapshotting features like a real SAN, plus filers (called QuickClone) as well as thin provisioning and converged backup, which makes backups of files onto the file system and allows them to be pushed out to external online backup services.

Name your hypervisor – eventually

Each Nutanix cloud server node has two Xeon 5600 processors, one 320GB Fusion-io flash disk for metadata and data that's plugged into a PCI Express 2.0 peripheral slot, one 300GB Intel SSD housing system software that's slid into a SATA disk bay, and five 1TB Seagate 2.5-inch SATA drives for customer data.

Each node comes with a base 48GB of DDR3 main memory, which can be expanded to 192GB as workloads dictate. At the moment, early customers are using 10GE switches from Arista Networks and Super Micro to link Nutanix appliances together, but any 10GE switch should work.

Exploded view of a Nutanix cloud appliance

A four-node block with eight processors and 48 cores that can be allocated to VMs, 192GB of memory (expandable to 768GB), 1.25TB of user-accessible SSD storage, and 20TB of disk capacity costs $115,000. Too much? Well, there's also a starter kit with three server nodes in the Nutanix cloud appliance has a slightly discounted price at $75,000.

If you need more oomph, a full rack of Nutanix appliances – 18 blocks and 72 server nodes totaling 576 cores, 3.4TB of main memory, 18TB of SSD capacity, and 360TB of disk capacity – will run you just over $2m.

That may sound a bit pricey, but Pandey points out that compared to a rack of servers and external SANs, this setup costs 40 to 60 per cent less and delivers somewhere around ten times the bang for the buck because of the flash tiering and other SOCS goodies.

The Nutanix Complete Cluster is available now. Pandey says that the company will listen to customers about whether it should next support Microsoft's Hyper-V or Red Hat's KVM hypervisor, but Nutanix will eventually support both, as well as ESXi from VMware. Xen will also no doubt eventually be supported – if customers ask for it. ®