This article is more than 1 year old

AMD previews Piledriver, Ivy Bridge SeaMicro microservers

Stretches Freedom interconnect fabric out to storage

SeaMicro is not longer an independent company, but you would not have guessed that if you were dropped in from outer space to attend the launch of the new SM15000 microserver in San Francisco on Monday afternoon.

Advanced Micro Devices may own SeaMicro, but the company went out of its way to support the latest "Ivy Bridge" Xeon E3-1200 v2 processor from rival Intel as well as its own forthcoming "Piledriver" Opteron processor as new compute nodes in a new SeaMicro chassis.

The other bit of news is that the "Freedom" 3D mesh/torus interconnect has been extended outside of the server node chassis and can now link up to 5PB of disk capacity over that interconnect to the server nodes inside the chassis, creating in effect what is a virtual storage area network for those microservers.

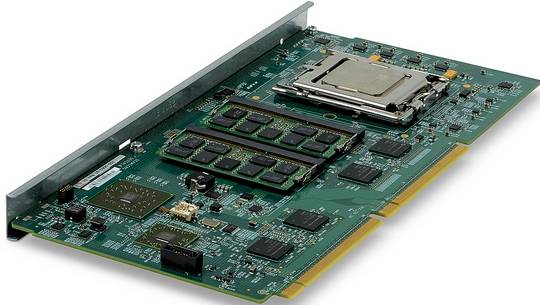

The new Opteron-based server card was not exactly a surprise, given that AMD CTO Mark Papermaster was showing it off at the Hot Chips 24 conference two weeks ago. But today we know a bit more about the feeds and speeds of the forthcoming Opteron server node for the new SM15000 chassis.

The new SeaMicro card will sport either an Opteron 3300, which only works in single-socket machines, or an Opteron 4300, which can be used in machines with one or two sockets.

Andrew Feldman, general manager of AMD's Data Center Solutions Group and one of the co-founders of SeaMicro, said that the Piledriver processor used in the new server nodes would have eight cores and would come in three speeds: 2GHz, 2.3GHz, and 2.8GHz. The Piledriver node can support up to 64GB of main memory, a perfectly reasonable 8GB per core, suitable for virtualization and other heavy workloads where you have reasonably brawny cores. Like prior SM1000 machines, the SM15000 has room for 64 server modules, which hook into the SeaMicro midplane through PCI-Express links.

In a 10U chassis, you will be able to cram in 512 cores and 4TB of memory; that's 2,048 cores and 16TB of memory in a rack, and this is only possible (as El Reg has explained many times in the past) because SeaMicro engineers have wrung ever last unnecessary joule of energy out of the system, leaving more thermal room for processors.

Piledriver Opteron node for the SM15000

You don't have to buy Opteron processors if you want to use the SeaMicro machines just because AMD paid $334m to buy the company earlier this year. SeaMicro started out two years ago with several generations of server cards that had relatively wimpy Atom processors, first 32-bit ones and then 64-bit ones, eventually cramming 768 cores into a chassis.

In January of this year, SeaMicro announced the second generation of its Freedom fabric interconnect as well as its support for Intel's "Sandy Bridge" Xeon E3-1200 v1 processors including optimizations to really bring the power consumption down on those Xeons by actively shutting down parts of the chip when not necessary in ways that Intel's own chipsets cannot do. These Atom and initial Xeon compute nodes are still available for their respective chassis, and you can mix and match different types of compute nodes in a single system (you can bring nodes forward, but you can't go backwards with new boards to older enclosures).

Starting in November, when AMD ships its Piledriver-based compute node for the SM15000 chassis, the company will also roll out a new compute node based on the latest "Ivy Bridge" Xeon E3-1200 v2 processor, which has slightly higher performance and slightly lower power draw than the original Xeon E3 chips.

As in the past, SeaMicro is sticking to the low-volt, low-wattage part, in this case the Xeon E3-1265L v2, which clocks at 2.5GHz. This is only a quad-core part, so that means you can only get 256 of these cores into the same 10U chassis. Intel maxxes out the main memory on all of the Xeon E3 chips to 32GB, so that is the limit that AMD has to work with on this card. The net-net is that in its own microserver chassis, AMD will be able to cram twice as many cores and twice as much main memory driving those cores into the same 10U enclosure.

An Ivy Bridge Xeon E3 server node for the SM15000

"That's their limit, not ours," Feldman said at the announcement, just to be clear. "We wish that it had more."

The Freedom interconnect delivers 10Gb/sec of bandwidth out of each server node up to the fabric ASIC in the midplane; that ASIC is designed by SeaMicro/AMD and etched by Taiwan Semiconductor Manufacturing Corp. The chassis can have 64 Gigabit or 16 10GE uplinks out of the chassis to the outside world. Thus far, SeaMicro/AMD has not provided any kind of coherency across multiple server node enclosures, but we'll get to that in a separate story tomorrow.

A base configuration of the SM15000 with 64 Opteron-based compute nodes plus base main memory, eight disk drives, and sixteen Gigabit Ethernet uplinks out of the chassis costs $139,000. Both nodes can run Windows or Linux operating systems or VMware ESXi or Citrix Systems XenServer hypervisors.

Stretching the fabric across storage

The other big news coming out of AMD in San Francisco ahead of Intel Developer Forum is that th SeaMicro engineers have extended the Freedom interconnect so it can bring up to 1,048 disk drives, in a maximum of sixteen disk drive enclosures, onto the fabric and dynamically configure them as a virtual SAN to specific server nodes in a chassis or all of the nodes, if that's what you want to do.

Because the Freedom interconnect implements a 3D mesh/torus, it has multiple, redundant paths between any two components in the system, be it a compute node or a disk drive. The original SM10000 chassis did the same thing with the 64 drives in the front of the enclosure one for every compute card, but with the new SM15000 chassis, that fabric is being extended to external disk enclosures. Feldman said that there is plenty of bandwidth to spare in the Freedom interconnect, which has a bi-section bandwidth of 1.28Tb/sec, to add up to 1,408 disks or a maximum of 5PM of disk capacity to a single SM15000 chassis.

Because of the storage virtualization features in the Freedom ASIC, you can configure those disks in the enclosures as RAID protected arrays attached to one or more compute nodes, and there is even a way to failover multiple SM15000 arrays to external disks for higher availability and disaster tolerance.

AMD is letting customers use either SATA disk or flash drives in the SM15000 chassis and related disk enclosures, and it is hard to believe that any interconnect has enough bandwidth to drive 1,408 SSDs. AMD has ginned up three different disk enclosures. The FS 5084-L puts two drawers of storage in a 5U chassis, with a total of 84 drives and up to 336TB of disk per 5U enclosure; it can use 3.5-inch SATA or 2.5-inch SAS drives and you have to use fat SATA drives to push up the capacity to 5PB on one SM15000 chassis.

The FS 2012-L is a 2U enclosure with a dozen disks (again, either 3.5-inch SATA or 2.5-inch SAS) and can hold up to 48TB of capacity. With sixteen of these puppies, you max out at 768TB of capacity per SM15000 chassis. And finally, there is the FS 2024-S, which is optimized for performance, using up to 24 peppy 2.5-inch SAS drives and delivering 24TB per enclosure or 384TB per SM15000 machine.

Disk array pricing was not available at press time. ®