This article is more than 1 year old

AMD tops processor evolution with new mobile Kaveri chippery

'Big A' architecture change occurs 'once every 15, 20 years,' says AMD CTO

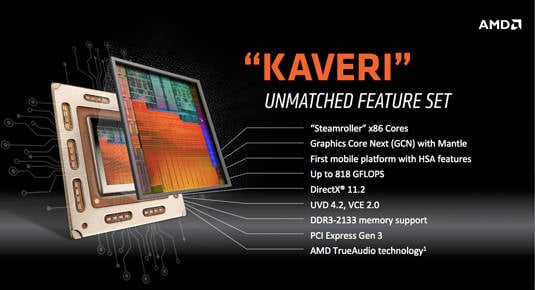

Deep Tech AMD has unveiled the mobile version of its "Kaveri" desktop processor, topping off its series of processors in which CPUs and GPUs not only reside on the same die, but also work together in shared-memory harmony – or heterogeneity, to be more precise.

"This a culmination of five years of work to build out this APU roadmap," AMD senior director of mobility solutions Kevin Lensing told a gaggle of hacks at a briefing last month.

We're not entirely clear where Lensing gets his "five-year" benchmark – perhaps some internal AMD starting gun – but the concept of the APU, or accelerated processing unit, what AMD calls its CPU/GPU/whatever mashup, was first introduced at an AMD financial analysts' event in 2006, first demoed at Computex in June 2010, and first shipped in November of that year in the 18-watt Zacate and 9-watt Ontario parts.

AMD has also been working on the CPU/GPU shared-memory heterogeneous system architecture (HSA) for some time, as well, tossing an OpenCL net over their CPUs and GPUs as early as 2009, and talking heterogeneity up big at their 2011 Fusion Summit. AMD was also one of the founding members of the HSA Foundation in June 2012, along with ARM, Imagination, MediaTek, and TI.

Now, Wednesday's release of the new HSA-enabled Kaveri chip mobilizes AMD's heterogeneous product stable, following this January's release of the desktop Kaveri, April's demo of the HSA-enabled "Berlin" Operton server chip due later this year, and last month's introduction of the HSA-enabled "Bald Eagle" APU for high-end embedded applications.

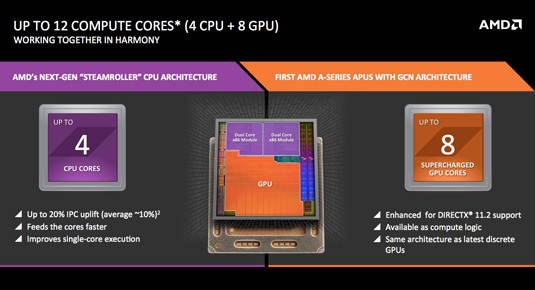

At each of those announcements, AMD asked if we in the computing world would be so kind as to toss the terms CPU and GPU into the lexical Osterizer and blend them into one. "CPU and GPU cores are now sort of equal citizens in terms of their ability to do compute work," Lensing explained at the briefing, "so we'll combine them together into a very simple concept that we'll call 'compute cores'."

But CPUs and GPUs aren't, of course, equal – CPUs excel in linear processing and GPUs show their muscle when handling highly parallel tasks. Lensing is not trying to fool anybody, however – he's merely a marketing guy who needs to simplify concepts when talking to, for example, the retail channel. So let's play along, and think of the equality of CPU and GPU compute cores in a Marxian fashion: "From each according to his ability, to each according to his need."

What allows Kaveri's CPU and GPU compute cores to play well together are one lower-case and upper-case acronym, hUMA (heterogeneous Uniform Memory Access), and one lower-case and upper-case initialism, hQ (heterogeneous Queing).

These two chunks o' tech exist to enable CPUs and GPUs to work together more efficiently. In the bad ol' pre-HSA days, if a CPU recognized that its associated GPU was better-suited to a task, it had to copy the relevant data from its own stash into the GPU's memory, then copy it back after the GPU had finished with it. The GPU was too stupid to manage scheduling, queuing, and the like.

Enter hUMA and hQ. In a nutshell, the hUMA architecture allows for one shared pool of memory for all CPU and GPU compute cores, and hQ assures that any compute core, whatever its specialty, can monitor task queues to accept and schedule tasks, for both itself and its brethren. [Enough of this "compute cores" crapola – just call 'em CPUs and GPUs.—Ed.]

So exactly what cores are in the mobile Kaveri?

Enough about CPU and GPU cores as abstract concepts – how many of the li'l buggers does the mobile Kaveri APU have and how fast do they run? The answer, of course, is "it depends" – cores and clock speeds vary by model.

More on that later, but for now let's just talk about the top-end Kaveri part – prosaically named the AMD A-Series FX-7600P with Radeon R7 Graphics – which has four "Steamroller" CPU cores and eight "Graphics Core Next" (GCN) GPU cores – as was also true with January's top-end desktop Kaveri. The FX-7600P's CPU cores run at a base clock of 2.7GHz and boost to 3.6GHz, and its GPU cores max out at 686MHz.

The new APU, AMD says, offers a big boost over its predecessors, 'Trinity' and 'Richland' (click to enlarge)

Steamroller is the third generation of AMD's Tonka toy–named CPU cores – from Bulldozer to Piledriver to Steamroller.

AMD CTO Joe Macri, also at the briefing, veered a bit off Lansing's "equal citizens" messaging regarding CPU and GPU cores. "I always tell folks that the most important compute device is the CPU core," he said. Macri noted that AMD has over 500 designers on its CPU team alone.

He also noted that Steamroller is an evolutionary design. "CPU design is about a lot of evolution," he said. "There's not a whole lot of revolution left in CPUs, but there's a lot of evolution left."

One area in which Kaveri's CPU cores are evolving is in the basic metric of instructions per cycle (or clock), usually expressed as IPC. "There is no glass ceiling in IPC," Macri said. "We used to think there was one, that a little bit above 1 was as much as we could ever get, but [AMD chief cores architect Jim] Keller really believes that we can keep pumping that IPC higher and higher as we go forward."

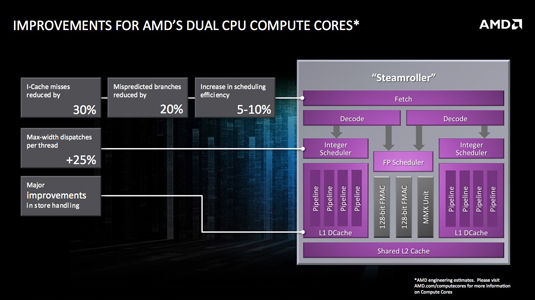

With the mobile Kaveri's release, Macri, Keller, and their 500 compatriots have evolved the CPU cores to raise IPC as much as 20 per cent over its predecessors, "Trinity" and "Richland", with an average IPC boost of around 10 per cent. Accomplishing this, of course, involved a boatload of tweaks. "It's complicated," Macri said. "There's no two ways about it.

As examples of that complicated effort, Macri cited the team's work on the front end of the processor, which resulted in a 30 per cent reduction in instruction-cache misses by "basically" adding 50 per cent more instruction cache. The team also knocked mispredicted branches down by about 20 per cent by both improving the branch-prediction algorithm and doubling the size of the branch target buffer to "about 10K entries," he said.

Scheduling efficiency is up by 5 to 10 per cent. "Scheduling is basically the window of instructions you're looking at," Macri said, "so we increased the window from 40 to 48 entries so we can look at more instructions and find things that don't conflict." One other front-end improvement involved removing conflicts by dumping the old shared integer decoder and simply having two independent ones.

Macri, Keller, et al. worked on the back end of Kaveri as well. For example, its predecessors' memory subsystems could only issue one store per cycle; Kaveri can issue two. Also, they increased the size of the queues through which loads and stores pass in and out of.

"All of this improves performance dramatically from an IPC point of view," Macri said. "These are all IPC tricks – the amount of work you get done in a single cycle. That's the most efficient way to improve CPU performance."