This article is more than 1 year old

All aboard the PCIe bus for Nvidia's Tesla P100 supercomputer grunt

No NVLink CPU? No problem

ISC Nvidia has popped its Tesla P100 accelerator chip onto PCIe cards for bog-standard server nodes tasked with artificial intelligence and supercomputer-grade workloads.

The P100 was unveiled in April at Nvidia's GPU Tech Conference in California: it's a 16nm FinFET graphics processor with 15 billion transistors on a 600mm2 die. It's designed to crunch trillions of calculations a second for specialized software including neural-network training and weather and particle simulations. The GPU uses Nvidia's Pascal architecture, which has fancy tricks like CPU-GPU page migration, and we've detailed the designs here.

Each P100 features four 40GB/s Nvidia NVLink ports to connect together clusters of GPUs, NVLink being Nvidia's high-speed interconnect. IBM's Power8+ and Power9 processors will support NVLink, allowing the host's Power CPU cores to interface directly with the GPUs.

Those Big Blue chips are destined for American government-owned supercomputers and other heavy-duty machines. The rest of us, in the real world, are using x86 processors for backend workloads.

Nearly 100 per cent of compute processors in data centers today are built by Intel; Intel does not support Nvidia's NVLink, and Intel does not appear to be in any hurry to do so. Thus, Nvidia has emitted – as expected and as planned – a PCIe version of its Tesla P100 card so server builders can bundle the accelerators with their x86 boxes. That means the GPUs can talk to each other at high speed via NVLink, and to the host CPUs via a PCIe bus.

The PCIe P100 comes in two flavors: one with 16GB of HBM2 stacked RAM that has an internal memory bandwidth of 720GB/s, and the other – cheaper – option with 12GB of HBM2 RAM and an internal memory bandwidth of 540GB/s. Both throw 32GB/s over the PCIe gen-3 x16 interface.

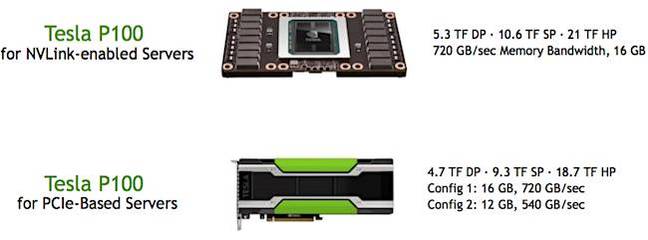

They can each sustain 4.7TFLOPS when crunching 64-bit double-precision math; 9.3TFLOPS for 32-bit single-precision; and 18.7TFLOPS for 16-bit half-precision. That's a little under the raw P100 specs of 5.3TFLOPS, 10.6TFLOPS and 21TFLOPS for double, single and half precision, respectively. The reason: the PCIe card's performance is dialed down so it doesn't kick out too much heat – you don't want racks and racks of GPU-accelerated nodes to melt.

While the NVLink P100 will consume 300W, its 16GB PCIe cousin will use 250W, and the 12GB option just below that.

By the way, if you want full-speed, full-power Tesla P100 cards for non-NVLink servers, you will be able to get hold of them: system makers can add a PCIe gen-3 interface to the board for machines that can stand the extra thermal output. But if you want PCIe only, and are conscious of power consumption, the lower performance, lower wattage PCIe options are there for you.

"The PCIe P100 will be for workhorse systems – the bulk of machines," Roy Kim, a senior product manager at Nvidia, told The Register. He suggested four or eight of the cards could be fitted in each server node.

These PCIe devices won't appear until the final quarter of 2016, and will be available from Cray, Dell, HP, IBM, and other Nvidia partners. The final pricing will be up to the reseller, but we're told the cheaper option will set you back about as much as an Nvidia K80 – which today is about $4,000.

For what it's worth, Nvidia told us the P100 PCIe cards will later this year feature in an upgraded build of Europe's fastest supercomputer: the Piz Daint machine at the Swiss National Supercomputing Center in Lugano, Switzerland. ®

PS: Look out for updates to Nvidia's AI training software Digits – version four will include new object detection technology – and its cuDNN library, version 5.1 of which will include various performance enhancements. Meanwhile, Nv's GPU Inference Engine (GIE) will finally make a public appearance this week: this is code designed to run on hardware from data center-grade accelerators down to system-on-chips in cars and drones, given applications the ability to perform inference using trained models.