This article is more than 1 year old

Cloudy outlook for climate models

More aerosols - the solution to global warming?

Climate models appear to be missing an atmospheric ingredient, a new study suggests.

December's issue of the International Journal of Climatology from the Royal Meteorlogical Society contains a study of computer models used in climate forecasting. The study is by joint authors Douglass, Christy, Pearson, and Singer - of whom only the third mentioned is not entitled to the prefix Professor.

Their topic is the discrepancy between troposphere observations from 1979 and 2004, and what computer models have to say about the temperature trends over the same period. While focusing on tropical latitudes between 30 degrees north and south (mostly to 20 degrees N and S), because, they write - "much of the Earth's global mean temperature variability originates in the tropics" - the authors nevertheless crunched through an unprecedented amount of historical and computational data in making their comparison.

For observational data they make use of ten different data sets, including ground and atmospheric readings at different heights.

On the modelling side, they use the 22 computer models which participated in the IPCC-sponsored Program for Climate Model Diagnosis and Intercomparison. Some models were run several times, to produce a total of 67 realisations of temperature trends. The IPCC is the United Nation's Intergovernmental Panel on Climate Change and published their Fourth Assessment Report [PDF, 7.8MB] earlier this year. Their model comparison program uses a common set of forcing factors.

Notable in the paper is a generosity when calculating a figure for statistical uncertainty for the data from the models. In aggregating the models, the uncertainty is derived from plugging the number 22 into the maths, rather than 67. The effect of using 67 would be to confine the latitude of error closer to the average trend - with the implication of making it harder to reconcile any discrepancy with the observations. In addition, when they plot and compare the observational and computed data, they also double this error interval.

So to the burning question: on their analysis, does the uncertainty in the observations overlap with the results of the models? If yes, then the models are supported by the observations of the last 30 years, and they could be useful predictors of future temperature and climate trends.

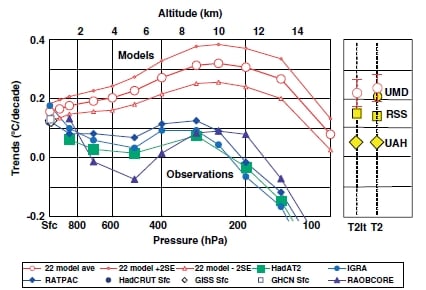

Unfortunately, the answer according to the study is no. Figure 1 in the published paper available here[PDF] pretty much tells the story.

Douglass et al. Temperature time trends (degrees per decade) against pressure (altitutude) for 22 averaged models (shown in red) and 10 observational data sets (blue and green lines). Only at the surface are the mean of the models and the mean of observations seen to agree, within the uncertainties.

While trends coincide at the surface, at all heights in the troposphere, the computer models indicate that higher trending temperatures should have occurred. And more significantly, there is no overlap between the uncertainty ranges of the observations and those of the models.

In other words, the observations and the models seem to be telling quite different stories about the atmosphere, at least as far as the tropics are concerned.

So can the disparities be reconciled?