This article is more than 1 year old

Gigabyte passively cooled Radeon 4850 card

How quieter does your GPU need to be?

Review We've been hugely impressed by the Radeon HD 4850 graphics card thanks to the balance it strikes between price and performance, and we firmly believe that, at £125, it's damn good value.

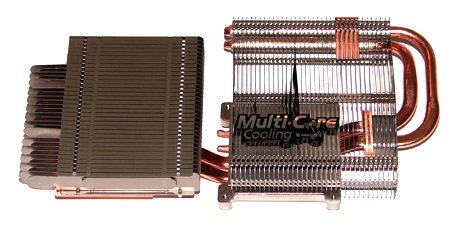

Gigabyte's GV-R485MC-1GH: double-slot design...

Pretty much our only reservation with the reference HD 4850 centres on the cooling package. The HD 4850's graphics chip employs 800 unified shaders that generate a fair amount of heat. AMD chose a single-slot design for the HD 4850 even though the dual-slot HD 4870 has an enormous cooler. The single-slot form-factor makes it easy to slip an HD 4850 into almost any PC, and there’s more good news: AMD has selected a gentle fan speed that makes the HD 4850 surprisingly quiet.

The combination of a slimline heatsink and low fan speed means that the heat produced by the HD 4850 gets trapped in the casing, and we concluded our original review by saying: "We’d give the HD 4850 the nod on this one despite its toasty hotness."

Gigabyte has decided that the cooling package on the HD 4850 could stand some improvement and the result is the GV-R485MC-1GH, which is passively cooled. The model code breaks down thus: GV for Gigabyte VGA; R485 denotes a Radeon HD 4850; MC stands for Multi-Core cooling; and 1GH refers to the 1GB of memory.

You can see an animation that explains the Multi-Core Cooling feature here, but our photos should make things clear enough.

...with some serious metal for passive cooling

One cooling core sits directly on top of the GPU, and there are two more cooling cores which are each connected to the main core by a pair of heatpipes. These cooling cores are quite sizeable affairs so Gigabyte has used a dual-slot design which means that this HD 4850 has a packaging envelope that's similar to an HD 4870. One of the coolers projects through the mounting bracket by a few millimetres but this looks like a means of supporting the cooler rather than a way of shedding heat into the air at the rear of the case.