This article is more than 1 year old

IBM shows off Power7 HPC monster

Big Blue unveils big box: Crowd goes wild

The water cooling links into the nodes through the front of the chassis, which is to the right in this picture. The chassis weighs a little more than 300 pounds fully loaded. A dozen of these, plus up to 1PB of local storage, can be put into a specially designed rack. This rack delivers 98.3 teraflops of number-crunching power.

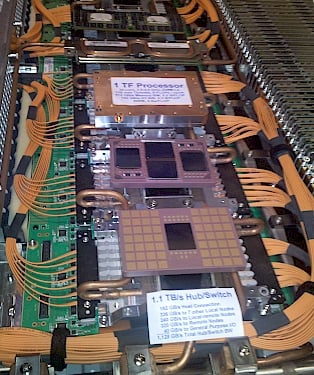

The Power7 IH node hub/switch network

The hub/switch at the heart of the Power7 IH node and linking them together is the secret sauce of this machine. Benner would not elaborate much on this network, but did confirm that it borrows ideas from the "Federation" SP hub/switch IBM created. This was for ASCI Blue and other supers running AIX and InfiniBand switches and related InfiniBand technologies Big Blue has been using to link Power5 and Power6 processors to remote I/O drawers for years.

Benner did brag that the hub/switch technology in the IH node "was better than both" Federation and InfiniBand, and said that one of the key distinctions is that it presents a two-level topology to all nodes in the network of machines. Within a node, all of the processors are linked to each other electronically through the motherboard and controlled by the IH node hub/switch.

The optical interconnects mount onto the top of the hub/switch - the squares on the top are actually comprised of a grid of small optical transceivers, with each square delivering 10 Gb/sec of bandwidth, according to Benner. The hub/switch module and the Power7 IH MCMs are put together in IBM's Bromont, Quebec, facility in Canada, which is also where Sony PlayStation 3 and Microsoft Xbox 360 chip packages are manufactured. IBM's East Fishkill, New York, wafer baker is where the Power7 chips and the chips that create the hub/switch are cooked up.

The way the Power7 IH node interconnect works is simple: most of the optical interconnects that come out of the backend of the box are used to link all of the nodes into a supernode, which is four drawers of capacity rated at 32 teraflops. The hub/switch interconnect shown at SC09 can currently scale to 512 supernodes, which works out to 16.4 petaflops. (IBM is going to have to overclock this puppy to 4.88 GHz to hit 20 petaflops, apparently.)

Benner said that the hub/switch module delivered a 1,128 GB/sec - that's bytes, not bits - in aggregate bandwidth. That is 192 GB/sec of bandwidth into each Power7 MCM (what IBM called a host connection), 336 GB/sec of connectivity to the seven other local nodes on the drawer, 240 GB/sec of bandwidth between the nodes in a four-drawer supernode, and 320 GB/sec dedicated to linking nodes to remote nodes. There is another 40 GB/sec of general purpose I/O bandwidth. ®