This article is more than 1 year old

Amazon cloud accused of network slowdown

Users cry latency spike

Amazon's sky-high EC2 service has experienced a significant increase in network latency in recent days, according to data from two separate companies running widely-used management tools in tandem with the service.

Cloudkick - one of the many outfits that offer a service for overseeing the use of Amazon EC2 and other so-called compute clouds - first noticed an Amazon latency spike around Christmas time, and the problem has grown steadily over the past few weeks.

"We have very concrete evidence that Amazon is having latency issues on its network," Cloudkick's Alex Polvi tells The Reg. "If you look at data across all of our customers, latency was completely smooth until about Christmas time and then things start going nuts."

Amazon EC2 - short for Elastic Compute Cloud - provides on-demand access to scalable compute resources via the net. Similar services are available from the likes of Rackspace, Slicehost, and GoGrid.

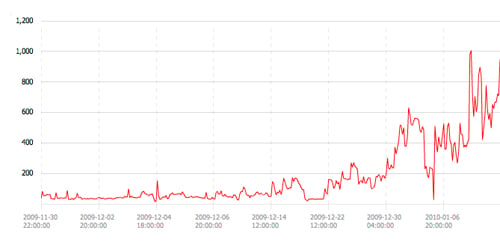

Cloudkick's data - posted to the company's blog here - covers "several hundred" EC2 server instances across a wide range of customers. Normally, Polvi says, the average ping latency is around 50 milliseconds. But in recent weeks, it has climbed as high as 1000 milliseconds.

Cloudkick maps Amazon EC2 latency

"Amazon has a great track record in performance and reliability, so this is why we are so surprised by this data," reads Cloudkick's blog post on the matter.

Cloudkick's numbers are limited to Amazon's "US-East" availability zone. EC2 serves up processing power from two separate geographic locations - the US and Europe - and each geographic region is split into multiple zones designed never to vanish at the same time.

enStratus, an outfit similar to Cloudkick, confirms the latency increase, but it says the spike is significantly smaller. Response time from the company's network into "all regions" of the Amazon cloud increased by 10 per cent on January 9, enStratus CTO George Reese tells The Reg, and it has remained roughly that high ever since. Reese's sample size is around 300 server instances.

Cloudkick and enStratus released their data in the wake of a blog post from Alan Williamson, co-head of the UK-based cloud consultancy AW2.0, who asked whether Amazon was experiencing capacity issues after one of his customers experienced a serious slowdown beginning at the end of last year. "We began noticing [the problem] around the end of November," Williamson tells The Reg. "We had been running with Amazon for approximately 20 months with absolutely no problems whatsoever. We could throw almost anything at them and it wouldn't even hiccup."

Echoing what Cloudkick and enStratrus have seen, Williamson says he eventually traced the problem back to network latency. On the application in question, the average time needed to turn around a web request jumped from about 2 to 3 milliseconds to about between 50 and 100 milliseconds.

Responding to an inquiry about the post from Data Center Knowledge, Amazon said that their infrastructure does not have capacity issues. And this afternoon, the company sent a similar statement to The Reg.

"We do not have over-capacity issues. When customers report a problem they are having, we take it very seriously," a company spokeswoman said. "Sometimes this means working with customers to tweak their configurations or it could mean making modifications in our services to assure maximum performance."

When we specifically asked about latency problems, the company did not respond.

Since posting to his blog, Williamson has discussed the issue with Amazon. But it is still unresolved. Williamson says that problem abates if he upgrades to more expensive instances. The least expensive EC2 instance offers 1.7GB memory, one virtual core, 160GB of storage, a 32-bit platform, and "moderate" I/O performance. More expensive instances offer greater resources.

According to Williamson, Amazon also says that more expensive instances reside in a different part of its infrastructure. Cloudkick's data represents all instance sizes, but Alex Polvi says that the it likely represents more small instances than large, because that's what people use more. Smaller instances are not only cheaper. They're the default.

This could mean that more expensive instances don't have the latency problem, says enStratus's George Reese, but it may simply mean that the problem can be masked with greater resources. He also points out that if you're using many small-server instances as opposed to a few big ones, latency will be more of an issue.

Cloudkick's Alex Polvi speculates that the issue could be down to the use of different hardware. Smaller instances, for instance, may use different networking gear.

Thorsten von Eicken, CTO of a third cloud-management firm, RightScale, has not noticed a latency spike and points out that ICMP pings may be a poor judge of latency because they receive low priority. "I can't confirm the issues reported as we have not seen these problems ourselves," he tells The Reg. "We tend to use larger instances, as do most of our customers, so we may not see these issues as much."

George Reese stresses that he's not seeing problems with processing or moving data. He's only seeing problems with latency. This means that customers will only notice the problem on certain applications. His customers are not seeing major problems, he says, but that could be because most of their applications are transactional enterprise tools, not the sort of thing that requires relatively low latency. ®