This article is more than 1 year old

Argonne taps IBM for 10 petaflops super

More BlueGene/Q details emerge

The US Department of Energy's Argonne National Laboratory announced on Tuesday that it has inked a deal with IBM to build a monster BlueGene supercomputer that will weigh in at 10 petaflops of peak theoretical performance when it is operational around the middle of next year.

El Reg caught wind of the Mira BlueGene/Q massively parallel super going into Argonne back in October, when Cray announced that it had been able to sell an 18,000-core, Opteron-based XE6 super into the Argonne facility even though it has been an IBM stronghold in recent years.

That Cray box weighs in at 150 teraflops of peak performance, and will be utterly dwarfed by the Mira BlueGene/Q machine – unless Argonne hands Cray a bunch of money to expand the Beagle XE6 box. The XE6 architecture can scale to more than 1 million cores and multiple petaflops of performance.

Last November, at the SC10 supercomputing conference in New Orleans, IBMers walked us through the BlueGene/Q prototype, which was on display publicly for the first time and which we compared to the prior BlueGene/L and BlueGene/P machines. IBM was a bit vague about the details of the processors used in the BlueGene/Q system, but provided some feeds and speeds – some of which turns out to be not true.

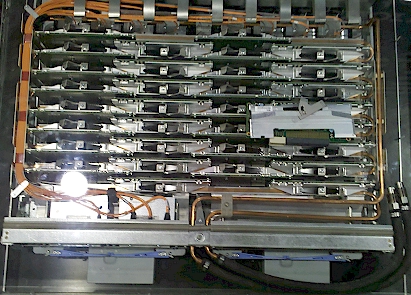

A rack of IBM BlueGene/Q HPC action

Back at SC10, an IBM software engineer working on BlueGene/Q said that the machine was based on a new Power-derived chip that ran at 1.6GHz. This chip had 16 cores for doing calculations and a 17th core for running a Linux kernel. These were 64-bit cores with four threads per core, just like the cores used in IBM's eight-core Power7 chips for its current generation of Power Systems servers, and the 16-core Power A2 "wire-speed" chip, which is not used in anything yet.

At the time, El Reg speculated that the BlueGene/Q processor would be a modified version of the Power A2 chip, but one running at a lower speed – 1.6GHz versus 2.3GHz – and with an extra core.

On Tuesday, IBM's hardware people cleared up the mystery about the processor at the heart of the BlueGene/Q super, saying that it is nothing funky with 17 cores but rather a 16-core Power A2 processor that is geared down and with special features for fast thread context switching. (This is what happens when you let a software engineer tell you about the hardware, I guess.) The BlueGene/Q machine will put one of these Power A2 chips on a server node, with 8GB or 16GB of main memory per node (512MB to 1GB per core) running at 1.33GHz. That's almost the same exact speed as the processor, which is something that is necessary to get more efficiency out of the BlueGene/Q machine.

The compute nodes have water blocks on the processors, main memory, and optical interconnects, and use water that is between 16 and 25 degrees Celsius (61 to 77 degrees Fahrenheit) to suck the heat out of the nodes. The water cools the optics first, then the compute nodes.

The BlueGene/Q compute drawer

With the BlueGene/Q design, IBM is separating compute nodes from I/O nodes, which will allow compute and I/O capacity to scale independently of each other (which you cannot do in the BlueGene/L and BlueGene/P designs).

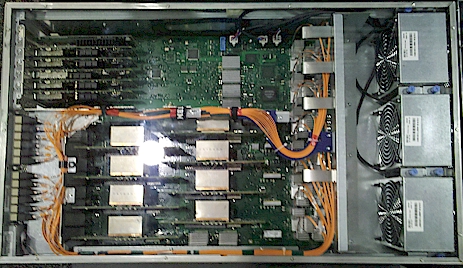

The compute drawer has 32 of the single-socket Power A2 modules, and up to 32 of these drawers can be crammed into a rack for a total of 16,384 cores per rack. The I/O nodes go into an I/O drawer; the drawer can hold up to eight I/O nodes based on the same PowerPC A2 boards, as well as up to eight 10 Gigabit Ethernet or InfiniBand adapters for linking to the outside world. The BlueGene/Q design allows from 8 to 128 I/O nodes to be used per rack, with 16 being the default.

The compute nodes run IBM's homegrown open source Linux kernel, with the front-end and service nodes running Red Hat's Enterprise Linux 6. The I/O nodes are also going to run RHEL 6.

The BlueGene/Q I/O node

Last fall, IBM said that the optical interconnect used in the BlueGene/Q machine would be a 5D torus mesh, and that turns out to be true. I am pretty good at visualizing in 2D and 3D, but 5D gives me trouble. To make this, you do a hypercube linkage of a block of nodes, and then you make a hypercube link of the vertices in the blocks.

This 5D torus/mesh network, says Dave Turek, vice president of deep computing at IBM, has a bandwidth of 40GB/sec and is used for collective operations on the machine. The 5D torus/mesh network also carries global barrier/interrupt traffic. The machine has a Gigabit Ethernet network for boosting individual nodes, debugging, and monitoring, and PCI-Express buses coming out of the I/O nodes to link to storage and the outside world.

Turek says that the Mira machine will essentially be half of the Sequoia BlueGene/Q super that is going into Lawrence Livermore National Laboratory – another DOE lab that took the very first BlueGene/L super – next year. Sequoia will weigh in at 20.13 petaflops of aggregate, raw number-crunching power. Mira will have over 750,000 cores and more than 750TB of main memory to reach its 10-petaflops performance.

IBM has never provided revenue and shipment figures for the BlueGene line of machines, but Turek said that IBM has sold dozens of large-scale machines over the years and hundreds of smaller boxes, adding up to many petaflops of aggregate performance. Turek did not divulge what the Sequoia and Mira machines were sold for, but did say that IBM's internal analysis shows that the BlueGene/Q machine will take about $100m in research and development to bring to market.

While IBM likes to make money, the BlueGene family of machines is more important to IBM as a means of hitting barriers to performance, scalability, and reliability and finding ways around them.

"BlueGene has taken us a step forward in systems design," Turek tells El Reg. "What I have observed is that if you encounter design problems at the peak of supercomputing, then somewhere between four and seven years later you have to deal with this issue in the commercial arena. BlueGene has been terrific at helping us deal with issues of reliability and scale. There has been and continues to be a lot of bantering about this in the industry, but my mantra is simple: you don't know anything until you build it and you have that empirical device in hand."

Argonne has 16 different research programs that are hot to trot to get access to the Mira box, which will be used for materials science, chemistry, nuclear physics, combustion, and energy research, including helping battery manufacturers design better products. Presumably the goal is to build a better battery before the Chinese do – and take over that industry, too. ®