This article is more than 1 year old

T-Platforms CPU-GPU hybrid hits 1.3 petaflops at Moscow State

Russian super maker invades Amerika

Moscow State University has moved into the upper echelons of the HPC field with an upgrade to its top-end supercomputer and moved to hybrid CPU-GPU blade servers from indigenous supercomputer maker T-Platforms.

It comes as no surprise that MSU has bulked up the math skills of the supercomputer, which is named after 18th century Russian polymath Mikhail Lomonosov, with Tesla GPU coprocessors from Nvidia. The Tesla GPU coprocessors, which are powered by the 512-core "Fermi" GPUs that are also used in Nvidia's graphics cards, are vastly preferred over FireStream alternatives from Advanced Micro Devices thanks to the CUDA programming environment and ECC scrubbing on the GDDR5 memory used on the GPUs. (AMD's FireStreams support OpenCL and do not have ECC graphics memory.)

The innovative T-Platforms blade servers that MSU is the primary customer for at this point – though that will change soon enough – were designed to support the stripped down Tesla X2070 and X2090 versions of the GPU coprocessors. But rather than wait for the X2090s, which Cray will ship in the third quarter in its XK6 ceepie-geepie hybrid supers, MSU and T-Platforms are going with the X2070s, which have the virtue of being ready to install now rather than waiting until later this year.

Moscow State's Lomonosov supercomputer

The Tesla M2090 fanless and X2090 embedded GPU coprocessors have all 512 cores etched on the Fermi GPUs activated and running at 1.3GHz, with memory running at 1.85GHz, and that yields 665 gigaflops at double-precision and 1.33 teraflops at single-precision with the 178GB/sec of memory bandwidth on the GDDR5 memory. The M2070 and X2070 that started shipping last May have only 448 out of the 512 cores running, and they spin at only 1.15GHz.

GDDR5 memory runs at 1.56GHz and offers only 148GB/sec of bandwidth, which is why the M2070 and X2070 GPU coprocessors are only rated at 515 gigaflops of double-precision and 1.03 teraflops single-precision. All four GPUs have 6GB of graphics memory and plug into PCI-Express 2.0 slots.

The upgrade to the Lomonosov super is a variant of the T-Blade blade server built by T-Platforms that El Reg told you about last September when they debuted. It was T-Platforms that outted Nvidia for even making an X2070 embedded GPU, much as Cray outted the X2090 long before Nvidia was ready to ship it. Based on the pictures available for the upgraded Lomonosov machine, it looks like T-Platforms has tweaked the blade design a bit while keeping the feeds and speeds the same.

T-Platforms T-Blade 2 TL blade server

The T-Blade design tips main memory on its side on the memory boards, which means they lay flat. It also allows T-Platforms to cram more blades into a chassis and for heat sinks to be pressed right against the memory modules and other components as a single unit. The T-Blade 2 TL blade uses two of Intel's four-core, low-voltage Xeon L5630 processors, which is a step backward from the six-core Xeon 5670 processors used in Lomonosov before the upgrade.

Main memory in the blade is 12GB, which is half of what was used before the upgrade as well. With the GPUs doing the bulk of the computing, CPU cores and memory can be cut back without hurting overall performance, apparently. The blade has two X2070 GPU co-processors and two ConnectX-2 hybrid InfiniBand/Ethernet adapter cards, each of which have a 40Gb/sec InfiniBand (QDR, or quad data rate) port and a Gigabit Ethernet port, mounted on the blade motherboard.

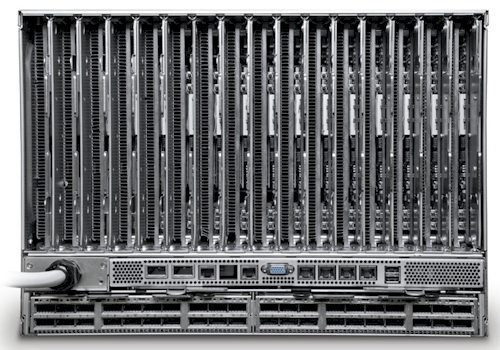

The hot end of the T-Blade 2 chassis with CPU-GPU blades installed

The T-Blade 2 chassis puts 16 of these nodes and two 36-port QDR InfiniBand switches from Mellanox into a single 7U chassis, with heat sinks sandwiched tightly between the blades and the whole shebang sucking about 12 kilowatts and delivering 17.5 teraflops.

By the way, the GPUs deliver about 16.5 teraflops of that oomph; the Xeons are there mostly to shepherd calculations to the GPUs. Last fall, Alexey Nechuyatov, director of product marketing at T-Platforms, said one of these chassis fully loaded would cost around $300,000.

The newly upgraded Lomonosov has 49 of these 7U chassis lashed together, with a total of 777 blades. (I am not sure why the 49th chassis only has nine blades, but there you have it.) That gives the system 1,554 X2070 GPUs and 6,216 Xeon cores with a total 850.5 teraflops of peak performance.

When you add up the number-crunching power in the 5,100 other Xeon 5500 and 5600 blades (based on the prior generations of T-Blade 1.5 and T-Blade 2 XN blades), that is 510 teraflops peak, and with these nodes all interconnected, you get 1.36 petaflops of aggregate oomph for climate modeling, drug design, industrial hydrodynamics, enzymology, turbulence modeling, and various chemical, physical, and biological simulations to frolic within.

Moscow State isn't the only Russian facility that's looking at GPU coprocessors. Keldysh Institute of Applied Mathematics has 192 Tesla C2050 GPUs doing simulations for atomic energy, aircraft design, and oil extraction. And Lobachevsky State University of Nizhni Novgorod (NNSU), which is Russia's first CUDA research center, is installing a cluster with 100 teraflops of GPU oomph this year and will push that up to 500 teraflops by the end of 2012.

Invading Amerika

With such compute density, T-Platforms said last year that it had a product that it thought it could sell to companies, universities, and laboratories in Western Europe and North America. And to get a toehold in the HPC market in the United States, T-Platforms has inked a reseller agreement with AEON Computing, a supercomputer supplier based in San Diego, California. ®