This article is more than 1 year old

Cisco gooses switching, virtual I/O for blades

Servers not yet crossing Sandy Bridge

Cisco has rolled out upgrades to its "California" Unified Computing System blade servers, but its announcement could have been much more interesting.

The networking-cum-server-making giant is hosting its annual IT and communications Cisco Live event in Las Vegas this week, and in a perfect world this would also have been the week that Intel announced its "Sandy Bridge" Xeon E5 processors for two-socket and four-socket servers.

Barring that perfect outcome, Intel could have at least given Cisco – the upstart of the server racket – a chance to get a jump on the Xeon announcements by a few weeks, just like it did back in March 2009 with the debut of the UCS blade servers.

But, alas, we live in an imperfect world. And although Cisco had some upgrades available for the integrated switching and virtual I/O for its UCS blade servers ready for the Vegas event, Intel is not expected to roll out its various "Romley" server platforms using Sandy Bridge-EP and -EN processors until later in the third quarter. We'll have to wait a little while longer to see what new B-Series blade and C-Series rack-mounted servers Cisco's engineers have wrought.

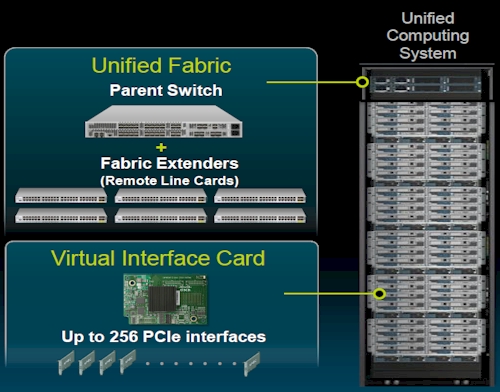

The first update of the UCS platform that Cisco rolls out on Wednesday is a new parent switch that implements the unified fabric that gives the machine its formal name. (No one would ever confuse California with being a unified fabric – and even less the United States of America, for that matter.)

Cisco is rolling out a new set of fabric extenders that hang off this parent switch – think of them as a kind of remote line card that extends the switch down into the server blades – as well as a new virtual interface mezzanine card that slides into each blade and allows the combined fabric to present a large number of virtualized Ethernet or Fibre Channel interfaces to either physical or virtual servers running on those blades.

The tiered fabric of Cisco's Unified Computing System

The 6248UP fabric interconnect is the heart of the UCS system, not only because it is the parent switch that lashes all of those blade servers – and external rack servers, if customers want to use these – together into a processing pool, but also because it runs the UCS Manager software glue that manages the whole shebang.

The original UCS 6120 parent 10 Gigabit Ethernet switch had 20 ports capable of running Ethernet or Fibre Channel over Ethernet (FCoE) protocols plus a number of add-on modules offering fixed numbers of uplinks to connect out to devices through 10GE or FC protocols. With the 6248UP fabric interconnect, Cisco is more than doubling the ports up to 48, and making them universal so they can all be configured as 10 Gigabit Ethernet ports or virtual Fibre Channel ports running over Ethernet at effective 2, 4, or 8Gb/sec speeds.

Cisco is also doubling the switching capacity of the unit to just under 1Tb/sec, and is also getting the point-to-point latency hop in the UCS system down to 2 microseconds – a 40 per cent reduction compared to the 6120 fabric interconnect.

You might think that by doubling the bandwidth in the parent switch, Cisco is also doubling the scalability of the UCS system in terms of how many blades are under a single management domain. That may happen eventually – and quite possibly with new Xeon servers coming later this year – but then again, it may not.

Two years ago, the UCS system launched with a design spec that allows it to scale to 40 blade enclosures and a total of 320 half-width blade servers in a single management domain. But Todd Brannon, senior marketing manager for unified computing at Cisco, tells El Reg that at the moment, the UCS Manager software is capped at 160 blades in a single management domain, and that up until now, this has been sufficient for the UCS customer base.

"It is not so much a technical limitation as a psychological one," explains Brannon. "Customers are hesitant to put all of their eggs in one basket, and after 160 servers, they tend to spawn a new pod."

It's all about clouds

What this doubled bandwidth and lowered latency is all about, of course, is boosting the throughput on the cloudy workloads – both on the servers and the storage – that are running on UCS iron. It takes more than just a faster top-of-rack switch with pretensions to make such applications run faster, of course. That's why Cisco is also goosing the UCS I/O modules and virtual interface cards.

The UCS 2208XP I/O module is the fabric extender that is used in conjunction with the new UCS parent switch. These are usually installed in pairs for redundancy, says Brannon, and they now offer 160Gb/sec of bandwidth out of the top-of-rack UCS fabric interconnect switch down into the UCS chassis where the blade servers live. That's twice the bandwidth of the fabric extenders they replace, and matches the doubled-up bandwidth of the parent switch.

As servers get more and more cores, they'll spawn more virtual machines per UCS blade or rack server, and that means more virtual Ethernet and Fibre Channel links back to the servers. With the VIC 1280 mezzanine card, a pair of ASICs on the card implements four 10GE lanes each, which yields a total of 80Gb/sec of bandwidth into and out of each blade. This new VIC can support up to 256 virtual interfaces per blade.

The first generation VIC for the UCS system had two ASICs, each able to support 10Gb/sec of bandwidth and 64 virtual interfaces. So the new VIC has twice the number of virtual connections and four times the bandwidth. These virtual interfaces can be deployed for bare-metal servers as well as for virtual machines running atop hypervisors.

All in all, the UCS blade box should be a more balanced system for the many-cored processors available now from Intel – and coming in the future.

Pricing for the new UCS fabric and I/O features was not available at press time. ®