This article is more than 1 year old

Dell floats Cloud Hadoop clusters

Stuffed elephant rides PowerEdge iron

Dell might not own a lot of systems or middleware software a la IBM, Oracle, and Hewlett-Packard, but it wants to sell configured stacks tuned for specific work just like its rivals in the systems racket.

The company already peddles pre-configured Ubuntu/Eucalyptus and OpenStack systems for building and managing private clouds, and now, it's expanding out into big data munching with a pre-built Hadoop cluster based on the software stack from Cloudera.

Hadoop is a set of programs originally created by that mimic the functioning of Google's MapReduce data muncher and its related Google File System. Cloudera, which launched in March 2009, is the first of a handful of organizations providing commercial Hadoop distributions.

The company is a big contributor to the open source Apache Hadoop project, along with Yahoo!, where the project was originally bootstrapped. IBM, which could have partnered with Cloudera, chose instead to create its own Hadoop distribution, called InfoSphere BigInsights in May 2010. MapR kicked out its own commercially supported Hadoop in May of this year, and Hortonworks, a spinout directly from Yahoo, joined the fray in June 2011.

Cloudera offers its own open source distro (the Cloudera Distribution for Hadoop (CDH)) and an enterprise version (Cloudera Enterprise) that has extra goodies in it for which it can charge money. These extra goodies are not open sourced.

For its Dell-Cloudera stack, Dell is using the Cloudera Enterprise CDH3 version, which can run atop Red Hat Enterprise Linux 5 and 6, its CentOS 5 clone, SUSE Linux Enterprise Server 11, and Ubuntu Server 10.04 LTS and 10.10. Dell is at the moment putting RHEL in the Dell-Cloudera reference architecture, but you are allowed to tweak this and still order a single stack preconfigured. CDH3 Update 1 was just released on July 22.

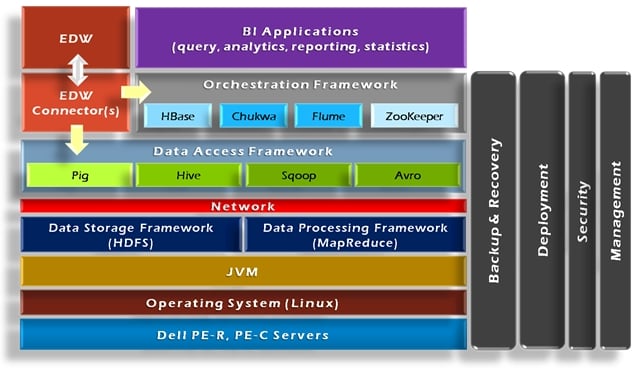

The Cloudera stack includes the core Apache Hadoop, which has the MapReduce and distributed file system, as well as the companion Apache Hive (SQL-like query), Pig (a high-level programming language for Hadoop), HBase (a column-oriented distributed data store modeled after Google's BigTable), and ZooKeeper (a configuration server for clusters). The stack also includes Cloudera's SCM Express, a management server for configuring Hadoop clusters.

"There are hundreds of settings for a Hadoop configuration, and this makes specific setting recommendations based on the hardware and software that customers are deploying on," Ed Albanese, head of business development at Cloudera tells El Reg. The settings for deploying on a small Hadoop cluster can be radically different from those needed on a mid-sized or large cluster, and SCM Express makes it possible for companies to get the right settings without having to be Hadoop experts like Yahoo!

Dell is also tossing in its Crowbar tool, which it created for OpenStack-based private clouds running on its PowerEdge-C servers, which were announced last week. Crowbar works in concert with SCM Express to do BIOS configuration, RAID array setup, network setup, operating system deployment, and manage the provisioning of Hadoop software on the bare metal iron from Dell.

John Igoe, executive director of cloud software solutions at Dell, says that for a typical company that is not all that familiar with Hadoop, it can take days to weeks to manually configure a Hadoop cluster with 20, 30, or 40 nodes. But with the combination of the Cloudera Enterprise software and Crowbar, companies can go from a bare-metal rack to running Hadoop data-crunching jobs in less than a day.

Speed to deployment is probably not as important as ease of use, however. "I think there is a substantial opportunity for Hadoop, but we are still in the product's infancy," says Igoe. "Current Hadoop customers have a very deep software bench. But other companies that want to use Hadoop don't have these skills, and they are looking for is to give them those skills."

Stacking it all up

Dell's reference architecture recommends that customers choose the company's PowerEdge-C 2100 energy efficient rack servers, which are optimized to cram the most computing performance in the smallest space with the least amount of extraneous hardware. This is the same iron that Dell used for its Ubuntu Enterprise Cloud (UEC) pre-fabbed private clouds that came out in March, but is different from the PowerEdge C6100 cookie-sheet servers that are being used in the preconfigured OpenStack clouds launched last week.

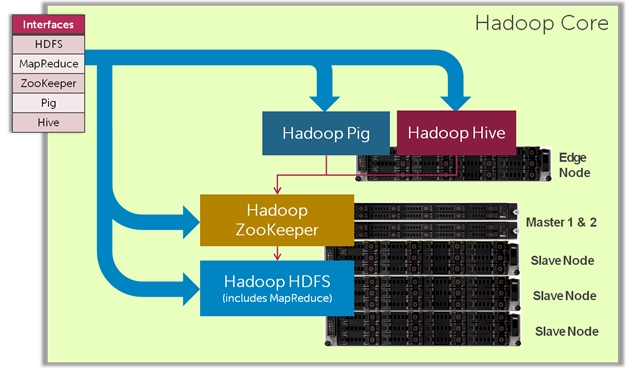

The Dell-Cloudera stack is built under the assumption that companies will start out small but grow fast. It consists of six PowerEdge C2100 server nodes and six (yes, six) PowerConnect 6248 48-port Gigabit Ethernet switches. There are two Hadoop master nodes (sometimes called a name node) that manage the Hadoop Distributed File System (HDFS) and MapReduce task distribution across the cluster. These master nodes can also run ZooKeeper. The edge node, of which there is only one in the base configuration, runs Pig and Hive and is the interface between users and the cluster. The slave nodes, of which there are three, run MapReduce and HDFS store data on local disk drives and chews on it as instructed by the master nodes.