This article is more than 1 year old

Petaflops beater: Nvidia chief talks exascale

Programming for parallel processes

"Power is now the limiter of every computing platform, from cellphones to PCs and even data centres," said NVIDIA chief executive Jen-Hsun Huang, speaking at the company's GPU Technology Conference in Beijing last week. There was much talk there about the path to exascale, a form of supercomputing that can execute 1018 flop/s (Floating Point Operations per Second).

Currently, the world's fastest supercomputer, Japan's K computer, achieves 10 petaflops (one petaflop = a thousand trillion floating point operations per second), just 1 per cent of exascale. The K computer consumes 12.66MW (megawatts), and Huang suggests that a realistic limit for a supercomputer is 20MW, which is why achieving exascale is a matter of power efficiency as well as size. At the other end of the scale, power efficiency determines whether your smartphone or tablet will last the day without a recharge, making this a key issue for everyone.

Huang's thesis is that the CPU, which is optimised for single-threaded execution, will not deliver the required efficiency. "With four cores, in order to execute an operation, a floating point add or a floating point multiply, 50 times more energy is dedicated to the scheduling of that operation than the operation itself," he says.

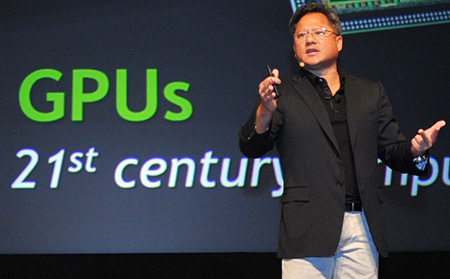

Power limits: NVIDIA chief executive Jen-Hsun Huang

"We believe the right approach is to use much more energy-efficient processors. Using much simpler processors and many of them, we can optimise for throughput. The unfortunate part is that this processor would no longer be good for single-threaded applications. By adding the two processors, the sequential code can run on the CPU, the parallel code can run on the GPU, and as a result you can get the benefit of the both. We call it heterogeneous computing."

He would say that. NVIDIA makes GPUs after all. But the message is being heard in the supercomputing world, where 39 of the top 500 use GPUs, up from 17 a year ago, and including the number 2 supercomputer: Tianhe-1A in China. Thirty-five of those 39 GPUs are from NVIDIA.

At a mere 2.57 petaflops though, Tianhe-1A is well behind the K computer, which does not use GPUs. Does that undermine Huang's thesis? "If you were to design the K computer with heterogeneous architecture, it would be even more," he insists. "At the time the K computer was conceived, almost 10 years ago, heterogeneous was not very popular."

Using GPUs for purposes other than driving a display is only practical because of changes made to the architecture to support general-purpose programming. NVIDIA's system is called CUDA and is programmed using CUDA C/C++. The latest CUDA compiler is based on LLVM, which makes it easier to add support for other languages. In addition, the company has just announced that it will release the compiler source code to researchers and tool vendors. "It's open source enough that anybody who would like to develop their target compiler can do it," says Huang.

Another strand to programming the GPU is OpenACC, a set of directives you can add to C code that tell the compiler to transform it to parallelised code that runs on the GPU when available. "We've made it almost trivial for people with legacy applications that have large parallel loops to use directives to get a huge speedup," claims Huang.

OpenACC is not yet implemented, though it is based on an existing product from the Portland Group called PGI Accelerator. Cray and CAPS also plan to have OpenACC support in their compilers. These will require NVIDIA GPUs to get the full benefit, though it is a standard that others could implement. There is a programming standard called OpenCL that is already supported by multiple GPU vendors, but it is lower level and therefore less productive than CUDA or OpenACC.