This article is more than 1 year old

Exascale by 2018: Crazy ...or possible?

It may take a few years longer, but not too many

HPC blog I recently saw some estimates that show we should hit exascale supercomputer performance by around 2018. That seems a bit ambitious – if not stunningly optimistic – and the search to get some perspective led me on an hours-long meander through supercomputing history, plus what I like to call “Fun With Spreadsheets.”

Right now the fastest super is Fujitsu’s K system, which pegs the Flop-O-Meter at a whopping 10.51 petaflops. Looking at my watch, I notice that we’re barely into 2012; this gives the industry another six years or so to attain 990 more petaflops worth of performance and bring us to the exascale promised land.

This implies an increase in performance of around 115% per year over the next six years. Is this possible? Let’s take a trip in the way-back machine…

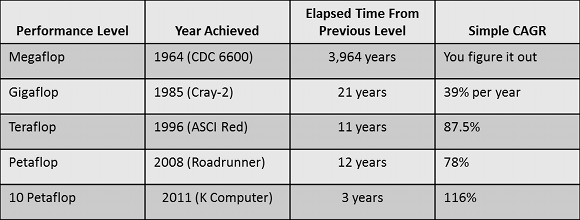

Here's a handy chart to show how long it took to move from one performance level to the next...

Just getting to megaflop performance took from the beginning of recorded history until 1964. If we start the clock with the Xia Dynasty at 2,000 BC, this means it took us 3,964 years to get from nothing to megaflops. This is a pretty meager rate of increase, probably somewhere around 0.17 per cent a year, but you have to factor in that everyone was busy fighting, exploring, coming up with new kinds of hats, and inventing the Morris Dance.

The first megaflop system, the Seymour Cray-designed Control Data CDC 6600, was delivered in 1964. It was a breakthrough in a number of ways: the first system to use newly-invented silicon-based processors, the first RISC-based CPU, and the first to use additional (but simpler) assist processors, called ‘peripheral processors,’ to handle I/O and feed tasks to the CPU. This was game-changing technology.

The transition from megaflop to gigaflop performance took only another 21 years with the introduction of the Cray-2, which hit the market in 1985. Seymour Cray broke away from Control Data in 1972 to start his own shop, Cray Research Inc. The Cray-2 delivered 1.9 gflops peak performance by extensively using integrated circuits (early use of modular building blocks), multiple processors (four units), and innovative full-immersion liquid cooling to handle the massive heat load. In its time, it was also game-changing technology. The Cray-2 was also highly stylish, with a futuristic design complimented by blue, red, or yellow panels. Here’s a PDF of a brochure covering the Cray-2.

Fast-forward another 11 years and we see the first system to sustain teraflop performance, the Intel-based ASCI Red system, which was also a big break from past supercomputer designs. Installed at Sandia National Lab in 1996, it’s an example of what we’ve come to expect from modern supercomputers with 9,298 Intel Pentium processors, a terabyte of RAM, and air cooling.

The compound annual performance growth rate (CAGR) for this move from gflop to tflop (another thousand-fold increase) is roughly 87.5 per cent per year, which won’t get us to exascale until midway through 2019 (just in time for the June Top500 list, I’d expect). Not too far off of the 2018 prediction, however.

Twelve years later, in 2008, the first petaflop (the IBM Roadrunner) system debuted. Achieving another 1000-fold performance increase in 12 years is equivalent to a 78 per cent compound annual growth rate. This is way faster than Moore’s Law, which has an implied CAGR of around 60%, but a little slower than the previous move from giga to teraflops. At this growth rate, we’ll reach exascale in 2020 – probably late in the year, but it might make the November 2020 Top500 list.

A mere three years after that, the K computer hit 10.51 pflops performance. The performance growth rate from Roadrunner to K? 116 per cent CAGR, which is almost exactly the growth rate necessary to deliver exascale by 2020.

Does this mean that we’ll see exascale systems in 2018 or even 2020? No, it doesn’t; it’s merely another data point in handicapping the race. This analysis simply looks at timelines; it ignores the problems inherent in housing, powering, and cooling a system that’s 1,000x faster than the current top performer, which sports more than 80,000 compute nodes, 700,000 processing cores, and uses enough power to run 12,000 households before they all get electric cars.

The technology challenges are mind-boggling, and it’s clear that simply applying ‘smaller but faster’ versions of today’s technology won’t get us over the exascale hump. It’s going to take some technology breakthroughs and new approaches. Even with these hurdles, I’m betting that we’ll see exascale performance before the end of 2020, putting us right in line with previous transitions.

But all bets are off if the Mayan prediction of global destruction in December of 2012 turns out to be true. In that case, I reserve the right to change my bet to the year 5976 – which is 2012 AD plus the 3,964 years it took us to get to megaflops. Seems like a safe enough hedge to me ... ®