This article is more than 1 year old

Tiniest ever 128Gbit NAND flash chip flaunted

A little bit of TLC from SanDisk, Toshiba

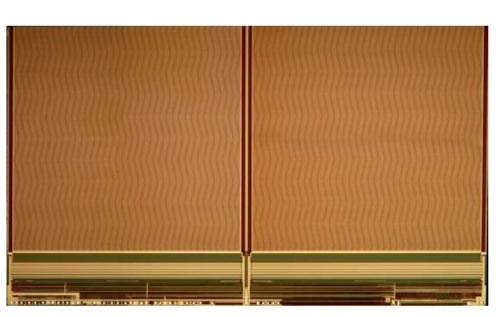

SanDisk and Toshiba have jointly developed the smallest 128Gbit NAND flash chip in the world by using a 3-bit multi-level cell design (TLC) and a 19nm process.

The thing, just 170.6mm2 in area, is SanDisk's fifth generation of TLC technology. It uses something called All Bit-Line (ABL) programming and lots of technology tweaks described in a SanDisk White paper (PDF) to get a write speed of 18MB/sec and a speed of 400Mbit/s through a toggle mode interface.

SanDisk's fifth generation 128Gbit TLC chip die

For example, the white paper says

In our first generation X3, we reported 8MB/sec write performance. Scaling to 19nm degrades the performance significantly. Process and cell structure changes, such as Air Gap recovers some of the degradation but that is not enough. In this design we have a) adopted a 16KB page size to double the performance capability, b) temperature compensation scheme for 10 per cent performance boost, and an enhanced 3-step programming to reduce FG-FG coupling by 95 per cent. A combination of these design features and process/cell structure changes allowed us to reach 18MB/sec on this advanced 19nm technology node.

The chip is in production already, with SanDisk saying products using it began shipping late in 2011 – although it doesn't say which products. A 64Gbit version of the chip compatible with the MicroSD format has been developed and a production ramp has started.

The company says that its 128Gbit TLC chip has enough performance to be able to replace 2-bit MLC ships in certain applications and hints pretty clearly at smartphones, tablets and SSDs.

How cost-effective this is going to be is open to question. On the face of it a 128GB SSD built with 128Gbit TLC chips should be significantly cheaper than one built with 2-bit chips, as you don't need so many chips.

But TLC has much less endurance – write cycles – than MLC; five times less or even worse. SanDisk doesn't say what the endurance is, which is a bad sign. We could readily imagine that there has to be so much over-provisioning of flash in a 3-bit product, compared to a 2-bit product, that the cost advantages are significantly eroded.

Until SanDisk and Toshiba announce actual products using their TLC chips we can't know what the endurance statistics are going to be, and what the cost penalties are going to be to turn a low endurance number into an acceptable one through over-provisioning and, perhaps, better controller technology to get usable data out of TLC cells nearing the end of their life.

Anobit, the controller company acquired by Apple, says its signal processing-based technology can make TLC flash as long-lived and as reliable as MLC flash. If controller tech and over-provisioning can actually deliver acceptable and affordable endurance for TLC NAND-based SSDs and other enterprise flash drive formats, such as PCIe cards, then we're set to see a good bump up in flash product capacity over the next six to eighteen months as products hit the market.

SanDisk and Toshiba talked about their fantastically small NAND chippery at the International Solid State Circuits Conference (ISSCC) in San Francisco on 22 February. This follows on from OCZ demonstrating a TLC drive at CES 2012. Intel and Micron also have TLC technology and will probably introduce 20nm TLC chips later this year. Ditto Samsung. The flash market and its customers are going to get a lot of TLC in the next few months. ®