This article is more than 1 year old

IBM gets flexible with converged Power, x86 system

Cloudy server, storage, networking, and software mashup

The details are a bit sketchy, but IBM is launching its first fully converged systems since it bought itself some clever storage and networking companies a few years back.

The new family of products are called PureSystems, and they are based on a new chassis and system architecture that was known as "Project Troy" inside Big Blue and referred to as "Flex Platform" or "Next Generation Platform" outside of the company.

What is clear is that IBM intends to be a contender in modern, modular, integrated systems that are capable of being the foundation of infrastructure and platform clouds.

According to sources at IBM, the company started working on the new system architecture in the second half of 2008, just as it was becoming obvious to the outside world that Cisco Systems was up to something in the server racket.

Converged systems, which weld servers, storage arrays, and networking gear into a seamless whole, are nothing new. Hewlett-Packard has been banging the converged systems drum for years. Cisco Systems has been getting traction with its "California" Unified Computing System blade and rack servers with its launch three years ago.

Dell and HP have also been buying up networking and storage companies to create (in the case of Dell) or improve (in the case of HP) their own converged (and usually virtualized) systems. Oracle has been going on and on about its "engineered systems" and getting traction with its Exa line of integrated systems.

IBM was the touchstone for what a system was, with the advent of the System/360 mainframe in 1964 and the System/38 minicomputer in 1979. It was only a matter of time before Big Blue would enter the modern converged system fray – and return to its roots as a system maker and stop worrying about just one element of the machine: the processing element we call a server thanks in large part to Sun Microsystems and the Unix wave and subsequent dot-com boom.

And for those of you who want to say IBM is late to the party, El Reg would point out that the converged systems shindig has only been going for a few minutes (relatively speaking) and the night is young. All you need do is remember how badly HP and the former Sun were kicking IBM's ass in the Unix market for a decade and a half to see how foolish it is to count Big Blue out in any part of the systems business.

IBM still derives the bulk of its prestige and profits from systems, if you account for it honestly. (IBM's books deliberately obfuscate all of this, of course.) It is equally foolish to count out HP and now Oracle, which have shown resilience and cleverness, or Cisco, which has done the same and has more than $40bn in cash, or Dell, which has a habit of hanging in there in a cut-throat IT market and appealing to millions of customers.

Pure as the driven snow

Of course, if you are talking about a system, you naturally want to talk about the server nodes first because these are the most important aspects. With the Project Troy machines, the new chassis that the server nodes slide into is called PureFlex, and the idea is that the chassis is more flexible than the current BladeCenter blade server chassis and allows for components to be packed more densely in the rack as well.

A PureSystem rack with a PureFlex

chassis at the bottom

The Flex System chassis is 10U high and mounts in a standard rack; you can put four of these in the rack and still have a little space left over. The server nodes slide into the front of the chassis and mount horizontally, like rack servers do. There are 14 half-width node bays or 7 full width node bays in the Flex System chassis, and you can slide in compute nodes or storage nodes.

One half-width bay is used to run dedicated management software that controls the compute and storage nodes and networking blades that slide into the back of the chassis, where the power supplies and fans are also located. This is called the Flex System Manager. The chassis has four switch blades that mount vertically in the chassis and six 2,500 watt power supplies. It has eight 80mm how swap fans plus four 40mm fans pulling air through the front of the chassis and pushing it out the back; the power supplies have their own fans as well.

Steve Sibley, director of Power Systems at IBM's Systems and Technology Group, gave El Reg a bit of a preview of the Flex System chassis and PureSystems family of machines ahead of the launch, which happens at 2 PM Eastern on Wednesday. IBM is supporting compute nodes running its Power7 processors, which made their debut in February 2010, as well as Intel's new Xeon E5-2600 processors, which were just launched in March after a six-ish month sorta-delay by Intel.

IBM is offering two-socket Xeon E5 nodes and two-socket and four-socket Power7 nodes as options in the chassis, according to Sibley. Presumably IBM will eventually have a four-socket Xeon blade as well – and very likely using the forthcoming Xeon E5-4600 processor from Intel. The feeds and speeds of all the processor nodes were not available at press time.

IBM is going to QLogic and Brocade Communications for Fibre Channel cards for the Flex System chassis to link compute nodes out to SANs and is using its own switches to provide 10 Gigabit Ethernet switching. I have heard that IBM will also support InfiniBand switching on the new machines, but it is not clear who IBM will partner with for this, or if it has created its own InfiniBand switches.

IBM will initially be supporting outboard Storwize V7000 arrays for local storage for the nodes, and will put out a statement of direction saying that it will create a variant of the Storwize V7000 that is modularized and that slides into the Flex chassis directly. In the graphic above, it looks like this integrated and modularized V7000 array is inside the Flex chassis.

The important thing is that the automated tiering of data for flash and disk drives that is built into the Storwize product will be a base feature of the Flex chassis. Each compute node will have local storage, either hard disk or SSD, as well, but this will be limited.

The Xeon-based compute nodes will be certified to run VMware's ESXi and Microsoft's Hyper-V hypervisors from the get-go, with support for KVM coming. I had heard rumors that only KVM was initially supported on these Xeon nodes, which is exactly opposite what Sibley said.

On the Power nodes, IBM's PowerVM hypervisor and its Virtual I/O Server (VIOS) for abstracting and isolating virtualized I/O for all the nodes is the only virtualization layer possible. The Xeon nodes support Microsoft Windows Server, Red Hat Enterprise Linux, and SUSE Enterprise Linux. The Power nodes will support IBM's own AIX Unix and IBM i (formerly OS/400) operating systems – at either the 6.1 or 7.1 release levels in both cases – as well as the RHEL and SLES Linuxes.

The exact components of the Flex System Manager appliance software, while runs the whole shebang, will be interesting to suss out, when the full details are finally available. What I can tell you is that the code that makes this an "expert integrated system" as far as IBM is concerned is comprised of Systems Director Management Console (including the VMControl virtual machine manager) and the BladeCenter chassis management tool mashed up with some Tivoli stuff. The graphical interface that was created for the XIV clustered storage and Storwize V7000 arrays was nicked for the new management appliance, IBM liked it so much.

That's the chassis and its basic components. What makes it a PureFlex raw infrastructure cloud is stacking them up in a rack, thus:

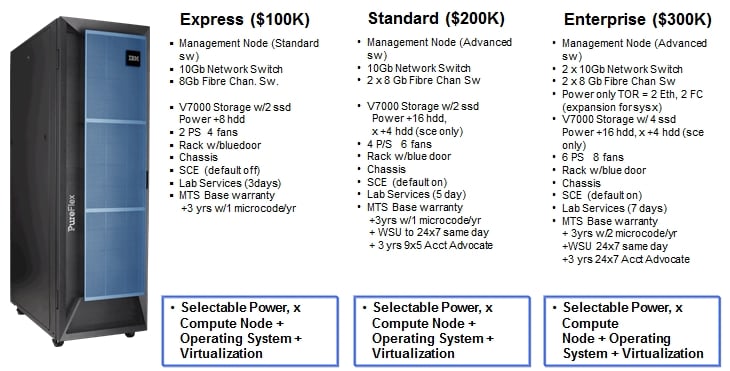

As you can see, the PureFlex systems are designed to essentially be the backbone of a private infrastructure cloud based on either Power or x86 processors – or both. The base configuration has a single Flex System chassis and a single V7000 array with eight disks and two SSDs. The chassis has the Flex System Manager management node, a 10GE Ethernet switch, an 8Gb Fibre Channel switch, two power supplies, four fans, the rack, three days of lab services (high end installation tech support) and three years of warranty on everything in the rack (and including the rack) for $100,000.

That does not include the cost of the compute nodes, which Sibley says will cost around what IBM charges for blade servers in the BladeCenter chassis. The Power nodes will cost a little more than the PS7XX blades since they have more oomph and capacity, which is one of the reasons IBM created the Flex System in the first place.

The PureFlex Standard configuration doubles up the storage outside the Flex chassis and doubles up the Fibre Channel switches, power supplies, and fans inside the chassis plus adds more services – including the same code that IBM uses to run its SmartCloud Entry (SCE) public cloud and an "account advocate" for a cool $200,000.

Yup, we're back to the IBM System Engineer, and this time it is bundled into the price as it was more than four decades ago rather than coming as a separate invoice from the Global Services behemoth. It is not clear yet if that SCE cloud stack runs on both Power and x86 nodes, but it is clever of IBM to put a license to its stack on the private version of an infrastructure box.

The PureFlex Enterprise configuration takes that account advocate support up to 24x7 coverage (presumably there are three people, not one very tired person) as well as beefing up the networking and storage in the base chassis, all for $300,000. Again, the server nodes, the operating systems, and the virtualization hypervisors are not in these prices. And it is not clear what a full rack of hardware would cost.