This article is more than 1 year old

Peeling back the skins on IBM's Flex System iron

More Power – and x86 – to you

Analysis IBM announced the PureSystems converged systems last week, mashing up servers, storage, networking, and systems software into a ball of self-managing cloudiness. What the launch did not talk a lot about is the underlying Flex System hardware which is at the heart of the PureFlex and PureApplication machines.

So let's do that now.

First, let's take a look at the Flex System chassis, which is 10U high and a full rack deep. About two-thirds of its depth in the front of the chassis is for the server and storage nodes and the back one-third of the space is for fans, power supplies, and switching. The compute and storage are separated from the switching, power, and cooling by a midplane, which everything links to in order to lash the components together. In this regard, the Flex System is just like a BladeCenter blade server chassis. But this time around, the layout of the machinery is better for real-world workloads and the peripheral expansion they require.

The 10U chassis has a total of 14 bays of node capacity, which are a non-standard height of 2.5 inches compared to a standard 1.75 (1U) server. The key thing is that this height on a horizontally oriented compute node is roughly twice the width a single-width BladeCenter blade server. That means you can put fatter heat sinks, taller memory, and generally larger components into the Flex System compute node than you could get onto the BladeCenter blade server. To be fair, the BladeCenter blade was quite taller, at 9U in height, but you couldn't really make use of that height in a way that was constructive. As the world has figured out in the past decade, it is much easier to make a server that is half as wide as a traditional rack than it is to make one that is almost as wide and twice as thin. And it is much easier to cool the fatter, half-width node. That is why Cisco Systems, Hewlett-Packard, Dell, and others build their super-dense servers in this manner.

And while the iDataPlex machines from IBM were clever in that they had normal heights, were half as deep, and were modular, like the Flex System design, the iDataPlex racks were not standard and therefore did not layout like other gear in the data center. (Instead of one rack with 42 servers in a 42U rack, you had 84 servers in a half-deep rack with two racks side- by-side.) This creates problems with hot and cold aisles, among other things. The PureFlex System rack is a normal 42U rack with some tweaks to help it play nicely with the Flex System chassis.

Here is the front view of the Flex System chassis, loaded up with a mix of half-wide and full-wide server nodes:

IBM's Flex System chassis, front view

The chassis has room for 14 half-wide, single-bay server nodes or seven full-wide, two-bay server nodes. You will eventually be able to put four-bay server nodes and four-bay storage nodes inside the box, with the nodes plugging into the midplane, or you can continue to use external Storwize V7000 NAS arrays if you like that better. While a single PureFlex System can span four racks of machines and up to 16 chassis in a single management domain, you need to leave at least one slot in one of those racks dedicated to the Flex System Manager appliance, which does higher-level management of servers, storage, and networking across those racks.

Take a look at the back of the Flex System chassis now:

IBM's Flex System chassis, rear view

The idea is to add server and storage nodes in the front from the bottom up and to add power and cooling modules from the bottom up as well. You can have up to six 2,500 watt power supplies and up to eight 80mm fan cooling units for the compute and storage nodes. There are no fans on the nodes at all – just these fans, which pull air from the front of the chassis, which sits in the cool aisle in the data center and dumps it into the hot aisle. There are four separate 40mm fans for cooling switch and chassis management modules (CMMs), which slide into the back of the chassis.

The CMMs are akin to the service processors on rack servers or the blade management module in a BladeCenter chassis; they take care of the local iron and report up to the Flex System Manager appliance server running inside the rack (or multiple racks). You can add two CMMs for redundancy, and you can also cluster the management appliances for redundancy, too. You can have as many as four I/O modules that slide into the back of the chassis vertically, between the fans, including Ethernet and Fibre Channel switches as well as Ethernet, Fibre Channel, and InfiniBand pass-thru switches. (A pass-thru switch is when you want to link the server nodes to a switch at the top of the rack and not do the switching internally in the chassis. It is basically a glorified female-to-female port connector with a big price.)

IBM is using its own Gigabit and 10 GE switches (thanks to the acquisition of Blade Network Technology) and Fibre Channel switches from Brocade and QLogic and adapters from Emulex and QLogic. It looks like IBM has made its own 14-port InfiniBand switch, which runs at 40Gb/sec (quad data rate or QDR) speeds and is based on silicon from Mellanox Technology, as well as adapters from Mellanox for the server nodes. Here are the mezz card options: two-port QDR InfiniBand, four-port Gigabit Ethernet, four-port 10 Gigabit Ethernet, and two-port 8Gb Fibre Channel. You can also run Fibre Channel over the 10 GE mezz card.

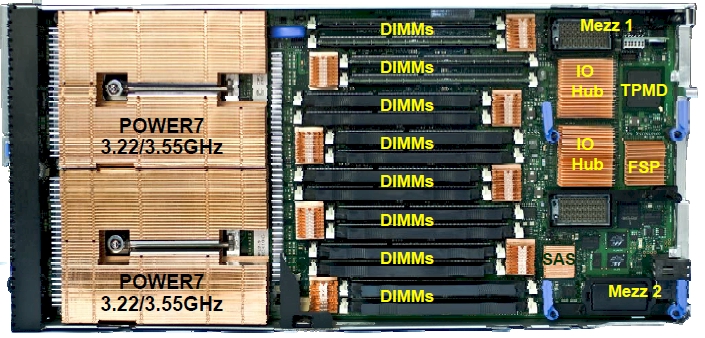

For whatever reason, IBM did not put out a separate announcement letter for the Flex System p260 server node, which is a single-bay, two-socket Power7 server. Here's the glam shot of the p260 node from above:

You can see the two Power7 processor sockets on the left, the main memory in the middle, and the I/O mezzanine cards and power connectors that hook into the midplane on the right. IBM is supporting a four-core Power7 chip running at 3.3GHz or an eight-core chip running at either 3.2GHz or 3.55GHz in the machine. Each processor socket has eight memory slots, for a total of 16 across the two sockets – and maxxing out at 256GB using 16GB DDR3 main memory.

The cover on the server node has room for two drive bays (that's clever, instead of eating up front space in the node and blocking airflow). You can have two local drives in the node: either two 2.5-inch SAS drives with 300GB, 600GB, or 900GB capacities, or two 1.8-inch solid state drives with 177GB capacity. These local drives slide into brackets on the server node lid and tuck into the low spot above the main memory when the lid closes. The lid has a plug that mates with the SAS port on the motherboard.

One important thing: If you put the local hard 2.5-inch disk drives in, you are limited to very-low-profile DDR3 memory sticks in 4GB or 8GB capacities. If you put in the 1.8-inch SSDs, you have a little bit more clearance and can use 2GB or 16GB memory sticks that come only in low-profile form factors and are taller. So to get the max capacity in the node, you need to use no disks or use SSDs locally.

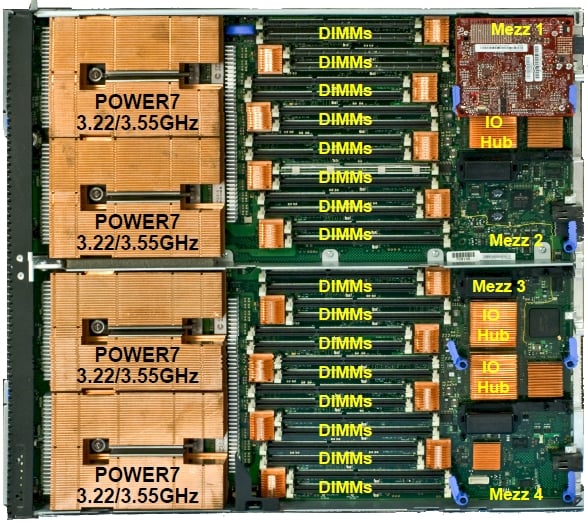

The Flex System p460 is essentially two of these p260 nodes put side-by-side on a double-wide tray and linked with an IBM Power7 SMP chipset. (It is not entirely clear where IBM hides this chipset, but it is possible that the Power7 architecture supports glueless connections across four processor sockets.) In any event, you get four sockets with the same Power7 processor options, with twice the memory and twice the mezzanine I/O slots because you have twice the processing.

I am hunting down information to see what the pricing is on these nodes and what their IBM i software tier will be. But generally speaking, Steve Sibley, director of Power Systems servers, says that the performance of the p260 and p460 nodes will fall somewhere between the PS 7XX blade servers and the Power 730 and 740 servers and the bang for the buck will be somewhere in between there as well. The PS 7XX blades were relatively attractively priced, of course, overcompensating maybe just a little bit for the lack of expansion on the blades and the extra cost of the blade chassis and integrated switching.