This article is more than 1 year old

Revealed: Inside super-soaraway Pinterest's virtual data centre

How to manage a cloud with 410TB of cupcake pictures

It's every startup's dream: to be growing faster than Facebook without having to build a Facebook-sized server farm.

Pinterest is an online picture pinboard for organising your favourite snaps and sharing them. It was founded by Ben Silbermann, Paul Sciarra, and Evan Sharp in March 2010, and it's growing like crazy with just 12 employees. It raised $74.5m in three rounds of funding in the past year, yet the only thing that Pinterest isn't doing is buying warehouses of servers.

Speaking at the AWS Summit in New York earlier this month, Ryan Park, operations and infrastructure leader at Pinterest, gave a sneak peek into the Pinterest data centre, which runs on the AWS cloud.

According to ComScore data cited by Park in his presentation, Pinterest had 17.8 million monthly unique visitors as February came to a close. According to ComScore, it took the Tumblr blog-hosting service 30 months to break through 17 million uniques; Twitter took 22 months; Facebook took 16 months; YouTube (now part of Google) took 12 months; but Pinterest only took nine months after opening up its service in May 2011. And that is with an invite-only beta programme.

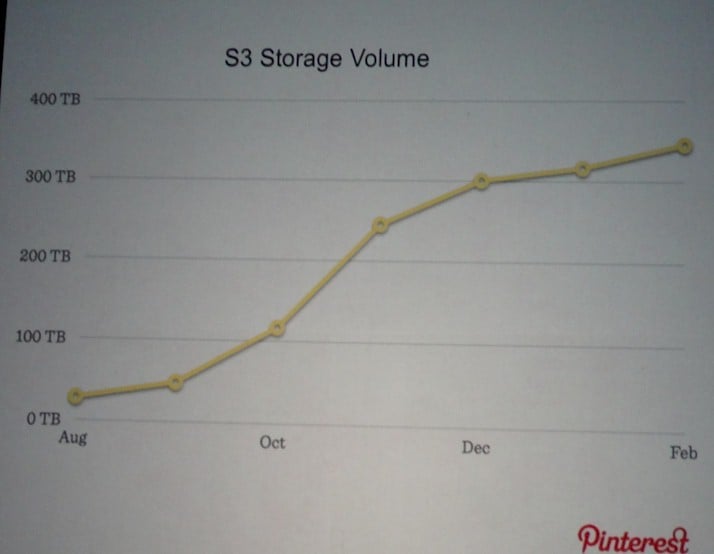

Among other things, the Pinterest pinboard uses Amazon's S3 object storage to keep the photos and videos that its millions of users have uploaded. Between August last year and February this year, Pinterest has grown its capacity on S3 by a factor of 10, and server capacity on the EC2 compute cloud is up by nearly a factor of three, according to Park, from about 75,000 instance-hours to around 220,000.

"Imagine if we were running out own data centre, and we had to go through a process of capacity planning, and ordering hardware, and racking that hardware, and so on," said Park in his keynote at AWS Summit.

"It just would not have been possible scale fast enough – especially with such a small team. Until about a month ago, I was the only operations engineer at the whole company."

Park walked through the basic architecture of the Pinterest application and the virtualised iron underneath it, and then explained how the company's use of autoscaling and different kinds of compute instances on AWS have evolved over time.

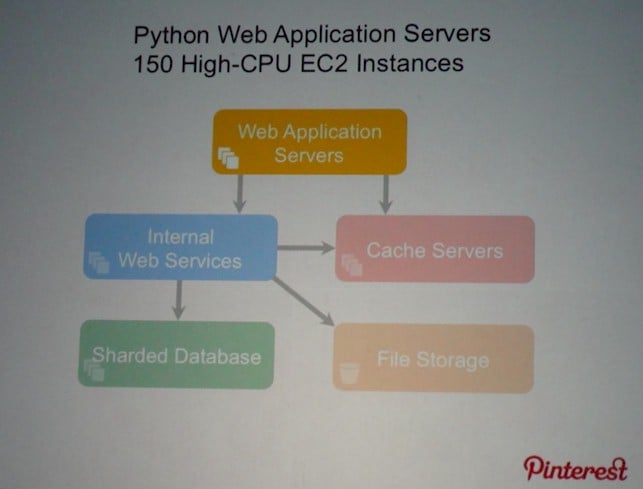

The Pinterest application stack has five basic pieces:

There are 150 high-core EC2 instances that run the Python web application servers that power Pinterest, which has deployed the Django framework for its web app. Traffic is balanced across these 150 instances using Amazon's Elastic Load Balancer service. Park says that the ELB service has a "great API" that allows Pinterest to programmatically add capacity to the Python-Django cluster and also take virtual machines offline that way if they are not behaving or need to be tweaked.

The Pinterest data centre on the AWS cloud also has 35 other EC2 instances running various other web services that are part of the pinboard site, and it also has another 90 high-memory EC2 instances that are used for memcached and Redis key-value stores for hot data, thereby lightening the load on the backend database.

There are another 60 EC2 instances running various Pinterest auxiliary services, including logging, data analysis, operational tools, application development, search, and other tasks. For data analysis, Pinterest is using the Elastic MapReduce Hadoop cluster service from Amazon. This costs a few hundred dollars a month, which is cheaper than having two engineers babysit a real Hadoop cluster, explained Park.

"And better than that, we are also able to experiment with new services like this, very easily and with very low risk. There's no big sales process or big up-front costs when we are trying something out. And so we can try experiments and see what works and what doesn't."

The genius of the setup is that you find what doesn't work and move on, and when you find something that does work, you can scale up capacity to support it quickly.

The Pinterest setup has a MySQL database cluster that runs on 70 master nodes on 70 standard EC2 instances plus another 70 slave database instances in different AWS availability zones for redundancy.

This database is shared into thousands of pieces, with each shard having users' account information and pins and boards within it. Each shard has thousands of users, and the site never runs queries that will span shards. When the shard architecture for the MySQL backend was launched last November, Pinterest had eight master-slave pairs. It has split three times since then, with 64 pairs right now and another 6 running other databases relating to the site but not to the pinboard and users accounts.

The S3 file storage currently has 8 billion objects in it, which weigh in at 410TB.

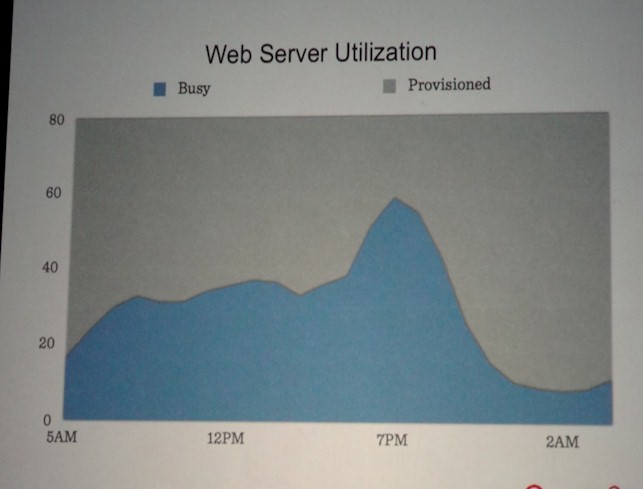

When Park made the presentation he showed at the AWS Summit, presumably a month or so ahead of the show, the company had only 80 web application servers, so the following data is based on those machines, not the 150 it had as of the end of April.

Initially, like any other data centre manager, Pinterest went out and provisioned its web server farm to be able to meet peak capacity and then have 25 per cent or so head room on top of that for crazy spikes:

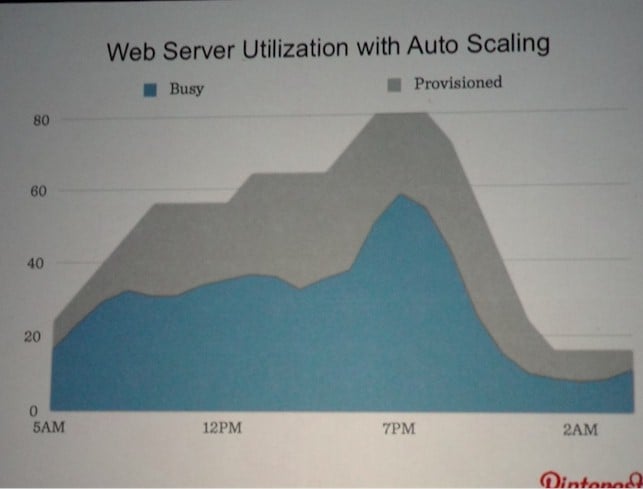

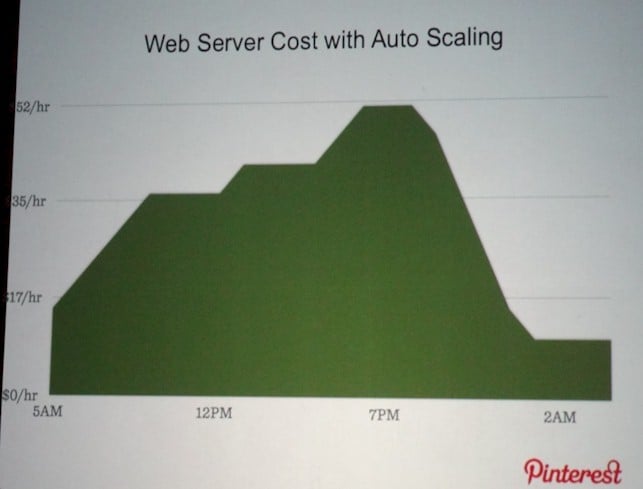

Pinterest is still largely an American Midwest phenomenon (that's changing, of course), and so at night, the provisioned EC2 images supporting those Python application servers were just spinning their clocks, doing nothing useful except giving Amazon money. So Pinterest turned on the autoscaling feature of EC2, allowing AWS to automatically dial up and down instances with some headroom built in:

The average reduction in web server instances using autoscaling was 40 per cent over the course of a single day, and because CPU-time is money on AWS, it saves about 40 per cent for the web server farm.

Here's how the costs break down over the course of a day:

At the peak, Pinterest is spending $52 per hour to support its web farm, and late at night when no one is using the site too much, they are spending around $15 an hour for the web farm, said Park.

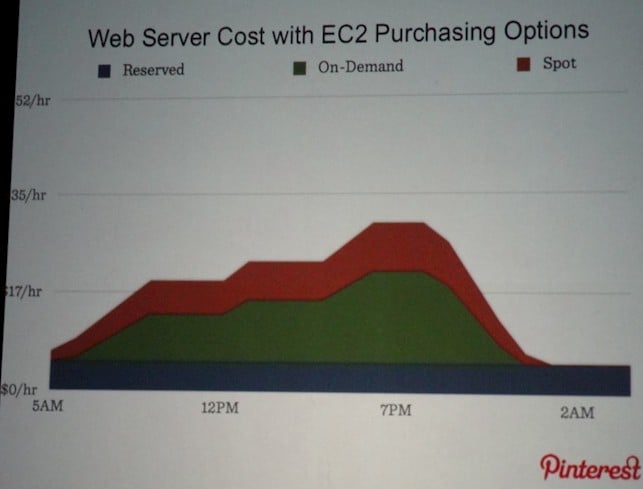

To push the costs down even further, Pinterest has figured out how to use a mix of reserved, on demand, and spot EC2 capacity for the web farm:

Basically, that baseline capacity needed to support users who are hitting the site in the wee hours of the American timezones are reserved up front, which has a lower per-unit capacity cost. Then the expected capacity for the day workload is acquired with the normal on-demand instances, which you pay for by the hour. Peaks are paid for through spot EC2 instances, which generally cost less than the on-demand instances.

Pinterest has created a watchdog service to work with Elastic Load Balancer to make sure it is never more than a few EC2 instances shy of safe capacity for reserved and on-demand instances. The upshot is that its peak web server costs are under $35 per hour, down from $52 per hour, and costs drop all along the curves that plot out the day.

Makes you want to get a case of beer and sit around with your buddies and form a startup, don't it? ®