This article is more than 1 year old

This supercomputing board can be yours for $99. Here's how

Adapteva's parallel dash for community cash

Feature Adapteva, an upstart RISC processor and co-processor designer that has tried to break into the big-time with its Epiphany chips for the past several years, is sick and tired of the old way of trying to get design wins to fund its future development.

So it has started up a community project called Parallella that seeks to get users to pay for development directly through crowdfunding via Kickstarter.

"We're going to build a community around parallel computing," Andreas Olofsson, CEO and co-founder of Adapteva, tells El Reg. "It will be kind of like the Raspberry Pi, but with real performance."

He is quick to add that he has nothing against Raspberry Pi, but rather that a hybrid architecture, marrying ARM processors and Epiphany massively parallel RISC coprocessors, is the way to go.

A community of enthusiasts monkeying around with hardware might be able to sustain the development of current and future Epiphany chips if the Kickstarter plan pans out. The initial target price to get a board is $99 compared to the $35 for a Raspberry Pi board, which is a little high but not unreasonably so.

Vibrant communities sprang up around Beagle boards and Arduino kits, too, so there is some precedence for this. Perhaps more significantly, the Parallella community approach is as reasonable and sensible as begging for money from venture capitalists and trying to go up against Intel with its Xeon and Xeon Phi coprocessors or AMD with its FirePro GPU coprocessors or Nvidia with its Tesla GPU coprocessors. And it is less demeaning, too. Provided it actually raises the necessary funds.

Risc-y business

Adapteva was founded in February 2008, and as Olofsson, which designed digital signal processors at Analog Devices for a decade, jokingly explained to El Reg, "I got the RISC memo from 1980 and I paid attention."

The idea with RISC was to have chips with relatively simple instructions and to do complex things by combining operations in quick succession; the theory was that a simple RISC chip could get more work done than a CISC processor, and there are not enough pixels in the world to settle that argument in this story.

Suffice it to say that Olofsson and his compatriots at Adapteva – Roman Trogan, director of hardware development, Oleg Raikhman, director of software development, and Yaniv Sapir, director of application development, who all hail from Analog Devices and worked on the TigerSHARC DSPs – believe that for parallel computing to take off, devices have to be simple, cheap, and accessible. And so they designed the Epiphany line of processors to be used as coprocessors as well to make them more accessible and therefore useful.

Adapteva's Epiphany-IV chip

But the problem that Adapteva is chasing is much larger than providing cheap parallel computing for hobbyists. The company wants to be at the forefront of exascale computing, and to do so by providing the cheapest and most energy-efficient floating point operations on the planet.

"We have been out there for four years now, and we see that the pickup for parallel processing is too slow," says Olofsson. "There are too many gatekeepers, and too many people can't afford the $10,000 startup fee for a reference board to run tests and do development."

The Parallella Kickstarter funding program is about changing that, with users being given an older generation Epiphany board if they help fund the development of the future ones.

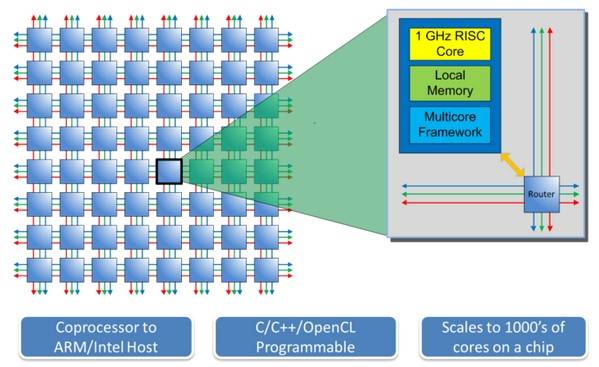

Before we get into that program, we need to talk about the Epiphany chips. They have their own instruction set, although Olofsson says he was inspired by MIPS and ARM RISC processors as well as the DSPs that he and his co-founders know so well. And like the massively multicore processors from Tilera, the idea behind the Adapteva chips is to take hoards of very modest RISC processors and lash them together with an on-chip interconnect.

Olofsson took this approach not because he loves minimalist core designs out of a three-decade old textbook, but because this is the only approach that will fit into the thermal envelope that will limit exascale-class systems.

Adapteva sees the parallel computing challenge as its opportunity

Like most people in the processor and coprocessor chip rackets, Adapteva thinks the future of computing is both parallel and heterogeneous. T be even more specific, the company believes that you need a clean slate approach on the coprocessors because this is the only way to get the coprocessors, which will do most of the heavy lifting on compute, to be much more efficient than the usual suspect processors we are used to on our desktops, inside out handhelds, and in our data centers.

The Epiphany core has a mere 35 instructions – yup, that is RISC alright – and the current Epiphany-IV has a dual-issue core with 64 registers and delivers 50 gigaflops per watt. It has one arithmetic logic unit (ALU) and one floating point unit and a 32KB static RAM on the other side of those registers.

Each core also has a router that has four ports that can be extended out to a 64x64 array of cores for a total of 4,096 cores. The currently shipping Epiphany-III chip is implemented in 65 nanometer processors and sports 16 cores, and the Epiphany-IV is implemented in 28 nanometer processes and offers 64 cores.

Block diagram of the Epiphany chip

The secret sauce in the Epiphany design is the memory architecture, which allows any core to access the SRAM of any other core on the die. This SRAM is mapped as a single address space across the cores, greatly simplifying memory management. Each core has a direct memory access (DMA) unit that can prefetch data from external flash memory.

The initial design didn't even have main memory or external peripherals, if you can believe it, and used an LVDS I/O port with 8GB/sec of bandwidth to move data on and off the chip from processors. The 32-bit address space is broken into 4,096 1MB chunks, one potentially for each core that could in theory be crammed onto a single die if process shrinking continues.