This article is more than 1 year old

Mellanox etches software-defined networking onto SwitchX-2 chips

Working on OpenFlow controller for fabric manager

Networking chip and switch maker Mellanox Technologies is ramping up its efforts to play in the software-defined networking (SDN) world. The company will create its own OpenFlow controller and work to ensure that its switches work well with controllers from other vendors. The company is also rolling out a new switch ASIC that has been tweaked to support SDN features.

Mellanox is a longtime InfiniBand switch supplier, and Gilad Shainer, vice president of market development, is having a bit of a flashback as OpenFlow-capable switches and routers, which break the data plane in the switch from the control plane and allow routing and switching tables in the devices to be controlled by an out-of-band management server, take off in the market. Shainer has seen this all before with InfiniBand, which was designed from the very beginning to have such capabilities.

In addition, Mellanox is well used to Ethernet stealing all of the good ideas in InfiniBand. In fact, Mellanox has spent the last several years converging its networking ASICs and switches so they can support either InfiniBand and Ethernet protocols, so adding an OpenFlow control freak for its switches is just the next thing on the to-do list.

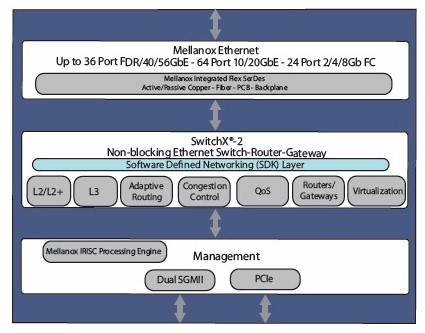

But before that happens, Mellanox is tweaking its ASIC chip, adding new features to make the SwitchX-2 better able to support SDN capabilities, and other tweaks to cope with traffic congestion compared to the earlier SwitchX chips launched in April 2011 and shipped in products shortly thereafter.

With the tweaked SwitchX-2 chips, the basic feeds and speeds remain the same: 4Tb/sec of aggregate switching bandwidth, just like its predecessor – and two to three times what Intel, Broadcom, Cisco, or HP can deliver in a single chip.

The SwitchX-2 can deliver InfiniBand speeds up to 56Gb/sec (so-called Fourteen Data Rate, or FDR) and can – with a special golden screwdriver upgrade – also pump up Ethernet ports to run at that speed.

Depending on the protocol and the workload, port-to-port latencies are still in the range of 170 to 220 nanoseconds – and that, Shainer tells El Reg, is the latency when the switch is loaded up and all the ports are working, not some bogus number with only a few ports turned on.

The SwitchX and SwitchX-2 ASICs also have a gateway function, which allows traffic coming in on one port in one protocol to be converted on the fly to the other protocol going out on another port.

SwitchX-2 networking chip architecture

The SwitchX-2 chip measures 45 millimeters on each side, and the version of the chip that can drive 64 10 Gigabit Ethernet ports has a typical power consumption of 40 watts. If you push it up to 36 ports running at 40GE or 56GE speeds, then the chip works a little harder – and hotter – and burns 55 watts.

The SwitchX-2 chip can also drive Fibre Channel ports if you want to make a Fibre Channel storage switch out of it, or allocate some of the ports on a switch to FC links out to storage area networks. It can drive up to 24 FC ports running at 2Gb/sec, 4Gb/sec, or 8Gb/sec speeds.

The new ASIC supports Fibre Channel over Ethernet (FCoE) if you want to converge server and storage traffic over one physical network, and also sports data center bridging (DCB) to make sure bits don't get lost in transit between servers and storage.

With the SwitchX-2 chip, Mellanox already supports Subnet Management, which is how InfiniBand allows for external control of switching and routing tables like OpenFlow enables for Ethernet, but now support for OpenFlow protocols is being added to the chip so it can be fluent in either Subnet Management or OpenFlow.

The SwitchX-2 chip also includes better adaptive routing and congestion management for Layer 2 and Layer 3 of the network, and also has been tweaked so it can drive copper cables that are 25 per cent longer than with the prior ASIC – which helps cut down on costs, given that the fiber optic cables that are required for long-haul links between adapters and switches can cost five to six times as much as copper cables, according to Shainer.

Importantly, the SwitchX-2 chip also sports routable remote direct memory access (RDMA) for both InfiniBand and Ethernet. With RDMA, a network card can reach out through the switch directly into the main memory of an adjacent server on the network and grab data, thereby avoiding the TCP stack and the latencies it imposes.

On Ethernet controllers and switches, RDMA is encapsulated and runs on top of Ethernet, and is called RDMA over converged Ethernet, or RoCE for short. The problem with RoCE is that it is limited to Layer 2 of the network, but with the routable RDMA feature of the SwitchX-2 chip, RDMA can route traffic up over Layer 3 of the network.

Where SDN fits in the Mellanox software stack

The SwitchX-2 chip is available now, and will be rolled into Mellanox products in the coming weeks and months.

Shainer says that Mellanox doesn't want to be passive when it comes to OpenFlow, and wants to field its own software stack much as it has been doing for InfiniBand with its Unified Fabric Manager. The UFM stack was not created by Mellanox itself, but is rather a network management tool that it got through its $218m acquisition of sometime-partner, sometime-competitor Voltaire back in November 2010.

Rather than grab an open source OpenFlow controller and jam it into the UFM stack, Mellanox is taking its time and extending UFM to speak OpenFlow as well as Subnet Management as a controller. Shainer says that the schedule for the OpenFlow controller based on UFM will come out sometime between the fourth quarter of this year and the first quarter of next year. More details will be forthcoming as the software gets closer to launch, he adds. ®