This article is more than 1 year old

Appro adds water-cooling to Xtreme supercomputers

An HPC-driven hot tub for every university and city

It's time to install the hot tub, sauna, and heated swimming pool next to the supercomputer centers of the world and open them up to the public as modern-day baths. If you can think of a better use for waste heat generated by petafloppers, so be it.

But clearly this is possible with a new line of supercomputers from Appro called the Xtreme-Cool machines, which have replaced fans and air cooling with a cooling system based on warm water heat exchangers.

The Xtreme-Cool machines will complement the existing Xtreme-X air-cooled machines that Appro has been selling for a number of years, which are based on its GreenBlade blade servers.

The Xtreme-Cool server nodes, blade enclosures, and racks all have modifications to the Xtreme-X machines to accommodate the water plumbing, which has to be done carefully because electronics and water don't mix well. Those of us who live in New York City got a blunt reminder of that this week thanks to Hurricane Sandy.

Because of these modifications, the Xtreme-X machines cannot be upgraded to Xtreme-Cool machines, although when Appro starts selling them next year, El Reg is pretty sure Appro can be convinced to treat existing customers pretty well. (Where do you offload a massive parallel x86 cluster, and how much value does it retain? Good question. . . . Time to do some research.)

Water cooling of machines is coming back in vogue again as the heat density of systems starts getting back to that of the bipolar circuits and multichip modules used in mainframes and Cray's high-end vector processors back in the 1980s. That was before big iron shifted to slower and cooler CMOS chip technology and made it up in parallel.

Appro is not the first water cooled machine by far in the supercomputing racket. The Cray-2 vector supercomputer, which came out in 1985, was water-cooled like the high-end mainframes of the time. More recently, parallel supers based on IBM's BlueGene/Q, iDataPlex, Power 575, and Power 775 supercomputing nodes have water blocking on the CPUs and main memory.

So do the Sparc64-based processors and memory on the nodes of the K super computer built by Fujitsu as well as the commercialized and more compute-dense PrimeHPC variants that Fujitsu is selling to commercial institutions and academic HPC centers.

Silicon Graphics announced water blocking on the CPU heat sinks for its ICE X supers this time last year, and Hewlett-Packard, IBM, SGI, and Cray have built heat exchangers into their racks to capture the heat from the server nodes and storage before it can escape from the racks and therefore need to be extracted from the data center air. Cray uses a very funky liquid-to-gas phase change evaporator system in its racks, which eliminates the need for 2.5 CRAC units.

Appro Xtreme-Cool water-cooled blade supercomputers

It is always better to capture the heat before it escapes into the air in the first place, and water is a much better transport mechanism for either heat or the lack thereof than is air. So Appro is going the way of the water block with the Xtreme-Cool systems.

The plumbing that it has come up with can be applied to x86 processors and their main memory on the modified GreenBlade server nodes (you can do CPUs or CPUs and memory as you see fit) as well as to nodes that are equipped with coprocessors such as Nvidia Tesla GPU coprocessors or Intel Xeon Phi x86 coprocessors.

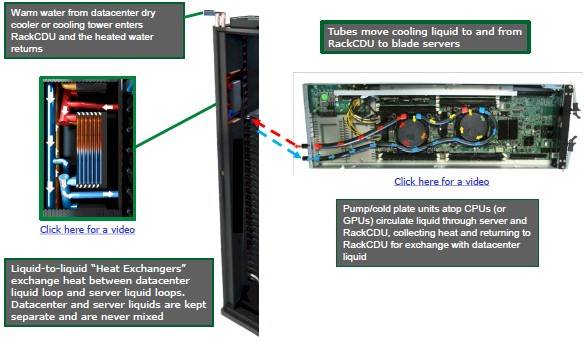

The plumbing in the Xtreme Cool rack and node

Here's how the Xtreme-Cool system works. The back of the rack is modified to have liquid-to-liquid (L2L in the lingo) heat exchangers. The exchangers keep the data center cooling water separate from the cooling liquid inside the compute nodes.

The top of each rack has a coolant reservoir, and this hooks into a coolant distribution manifold that interfaces with the multiple L2L heat exchangers. This manifold has drip-free quick connectors that mate to ports on each modified GreenBlade enclosure, which then feed liquid through tubes out to water blocks on the processors, memory, or coprocessors inside the chassis.

The setup also has a flow controller option to make sure the warm water coursing through the Xtreme-Cool tubes meets specific settings as workloads change and nodes are powered up in down across a cluster.

The Xtreme-Cool server nodes are two-socket and four-socket Intel Xeon E5 blade servers, similar to those offered in the GreenBlade machines or the Xtreme-X clusters, with 32GB, 64GB, or 128GB or main memory per node. Both machines offer the same compute density at 80 nodes per rack or 40 nodes with an Nvidia Tesla GPU or Intel Xeon Phi x86 coprocessor linked to each node.

That's ten dual-socket compute blades or five hybrid CPU-GPU or CPU-Phi blades. The cluster is configured with Ethernet or InfiniBand switches and ConnectX server adapters from Mellanox Technologies, and runs the Appro Cluster Engine cluster and node management tools on Red Hat Enterprise Linux, its CentOS clone, and SUSE Linux Enterprise Server.

All of this fits in a 5U rack, which only need three low-speed cooling fans to handle the other parts of the systems not blocked and water cooled. The Xtreme-Cool chassis has four power supplies, and the system has an option for 408-volt power distribution feeds off the data center mains with the option of 208-volt or 277-volt three-phase power into the chassis.

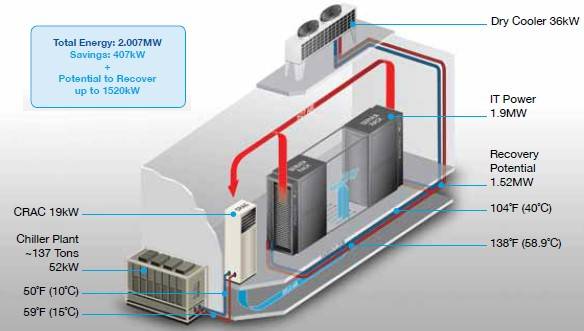

Power savings from the Xtreme Cool water-cooled supers

Here's why the Xtreme-Cool machines are hot, er, cool. In a relatively large computer installation, the setup might consume a little over two megawatts, and because of the savings from using the water-blocking and heat exchangers, you save something on the order of 407 kilowatts that would have been used to chill air and move it through the Xtreme-X system to cool it had it not been an Xtreme-Cool box.

But you save that. And because you are pumping water into the system at 104 degrees Fahrenheit and pulling it back out at 138 degrees Farrenheit and only using a 36 kilowatt dry cooler to dissipate that heat, you don't need to install as many CRAC units and chiller plants.

The water-cooling system removes 80 per cent of the system heat, cutting cooling costs by around 50 per cent and allowing you to drive your power usage effectiveness (PUE) down to 1.1 or even lower. (PUE is a ratio of the total energy consumed by the data center divided by the power used by the compute, storage, and networking infrastructure that actually does the work.)

And if you move to outside air cooling for the data center itself, you might be able to do away with those CRACs and chillers altogether and get the PUE even

The Xtreme-Cool machines will ship in the first quarter of 2013, but Appro is taking orders now and will be showing them off at the SC12 supercomputing extravaganza in Salt Lake City in two weeks.

Pricing has not yet been determined, but it is reasonable to assume that the Xtreme-Cool machines will be slightly more expensive than the Xtreme-X machines, given the extra plumbing. How much is anybody's guess, but it is hard to imagine it being so much that companies wouldn't want to install it.

It will probably something on the order of your first year's savings in electricity from using it, and if the cost of a server is the same as the cost of supplying it with power and cooling, then that should work out to around a 20 per cent premium given the savings Appro is citing above. ®