This article is more than 1 year old

WTF is... H.265 aka HEVC?

Ultra-efficient vid codec paves way for MONSTER-res TVs, decent mobe streaming

Singing, ringing tree

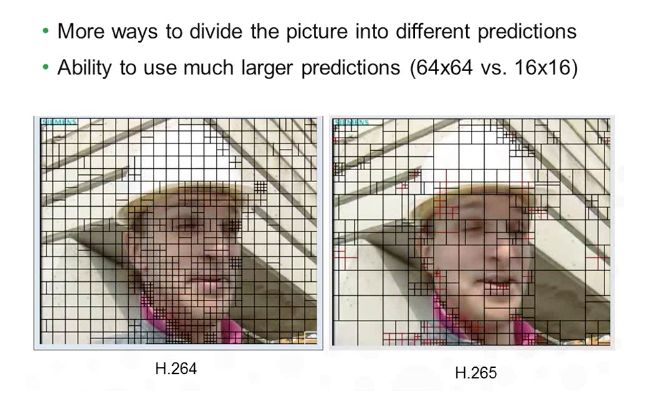

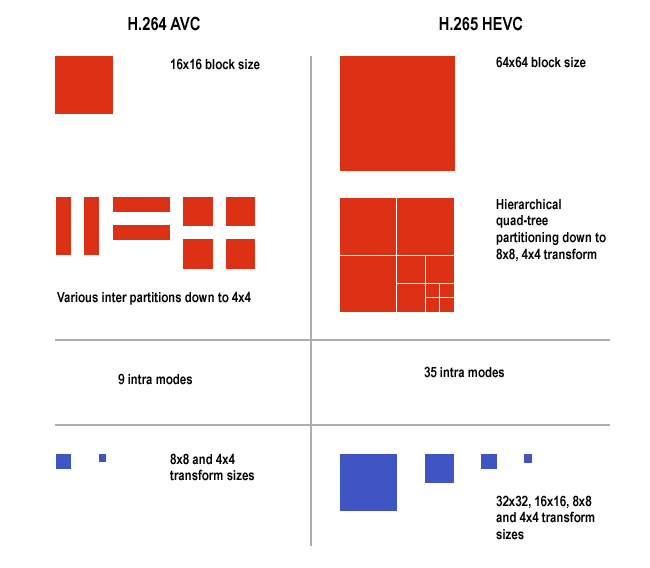

When an H.264 frame is encoded, it’s divided into a grid of squares, known as "macroblocks" in the jargon. H.264 macroblocks were no bigger than 16 x 16 pixels, but that’s arguably too small a size for HD imagery and certainly for Ultra HD pictures, so H.265 allows block sizes to be set at up to 64 x 64 pixels, the better to detect finer differences between two given blocks.

In fact, the process a little more complex than that suggests. While H.264 works at the block level, with a 16 x 16 block containing each pixel’s brightness - "luma" in the jargon - and two 8 x 8 blocks of colour - "chroma" data - H.265 uses a structure called a "Coding Tree". Encoder-selected 16 x 16, 32 x 32 or 64 x 64 blocks contain pixel brightness information. These luma blocks can then partitioned into any number of smaller sub-blocks containing the colour - "chroma" - data. More light-level data is encoded than colour data because the human eye is better able to detect differences the brightness of adjacent pixels than it is colour differences.

HEVC has a smart picture sub-division system

Source: Elemental Technologies

HEVC samples pixels as "YCrCb" data: a brightness value (Y) followed by a number that shows how far the colour of the pixel deviates from grey toward, respectively, red and blue. It uses 4:2:0 sampling - each colour sample has one-fourth the number of samples than brightness and specifically half the number of samples in each axis. Samples are 8-bit or 10-bit values, depending on the HEVC Profile the encoder is following. You can think of a 1080p picture containing 1920 x 1080 pixels worth of brightness information but only 960 x 540 pixels worth of colour information. Don’t worry about the "loss" of colour resolution - you literally can’t see it.

A given "Coding Tree Unit" is the root of a tree structure that can comprise a number of smaller, rectangular "Coding Blocks", which can, in turn, be dividing into smaller still "Transform Blocks", as the encoder sees fit.

The block structure may differ between H.264 and H.265, but the motion detection principle is broadly the same. The first frame’s blocks are analysed to find those that are very similar to each other - areas of equally blue sky, say. Subsequent frames in a sequence are not only checked for these intrapicture similarities but also to determine how blocks move between frames in a sequence. So a block of pixels that contain the same colour and brightness values through a sequence of frames, but changes location from frame to frame, only needs to be stored once. An accompanying motion vector tells the decoder where to place those identical pixels in each successive recovered frame.

Made for parallel processing

H.264 encoders check intrapicture similarities in eight possible directions from the source block. H.265 extends this to 33 possible vectors - the better to reveal more subtle pixel block movement. There are also other tricks that H.265 gains to predict more accurately where to duplicate a given block to generate the final frame.

As HEVC boffins Gary Sullivan, Jens-Rainer Ohm, Woo-Jin Han and Thomas Wiegand put it in a paper published in the IEEE journal Transactions on Circuits and Systems for Video Technology in December 2012: “The residual signal of the intra- or interpicture prediction, which is the difference between the original block and its prediction, is transformed by a linear spatial transform. The transform coefficients are then scaled, quantised, entropy coded and transmitted together with the prediction information.”

When the frame is decoded, extra filters are applied to smooth out artefacts generated by the blocking and quantisation processes. Incidentally, we can talk about frames here, rather than interlaced fields, because H.265 doesn’t support interlacing. So there will be no 1080i vs 1080p debate with HEVC. “No explicit coding features are present in the HEVC design to support the use of interlaced scanning,” say the minds behind the standard. The reason? “Interlaced scanning is no longer used for displays and is becoming substantially less common for distribution.” At last we’ll be able to leave our CRT heritage behind.

H.264 vs H.265

In addition to all this video coding jiggery pokery, H.265 includes the kind of high-level information that H.264 data can contain to help the decoder cope with the different methods by which a stream of video data can move from source file to screen - along a cable or across a wireless network, say - which will result in degrees of data packet loss. But HEVC gains extra methods for segmenting an image or the streamed data to take better advantage of parallel processing architectures and synchronise the output of however many image processors are present.

H.264 has the notion of "slices" - sections of the data that can be decoded independently of other sections, either whole frames or parts of frames. H.265 adds "tiles", which are an even number of 256 x 64 slices into which a picture can be optionally segmented so that each contains the same number of HEVC’s core Coding Tree Units so there’s no need to synchronise output. This is because each graphics core processes any given CTU in the same amount of time. Other tiles sizes may be allowed in future versions of the standard.