This article is more than 1 year old

Facebook launches data center ticker tape

Bit barn efficiency metrics on a minute-by-minute basis

Facebook has heaped pressure on major data center operators to be more transparent, publishing a dashboard that gives up-to-the-minute figures on the efficiency of the social network's gigantic bit barns.

The dashboards for the company's Prineville, Oregon, and Forest City, North Carolina, datacenters were made available on Thursday, and once the company's new facility in Lulea, Sweden is finished, Facebook will make that data available as well.

"Why are we doing this? Well, we're proud of our data center efficiency, and we think it's important to demystify data centers and share more about what our operations really look like," Lyrica McTiernan, a program manager for Facebook's sustainability team, wrote in a blog post discussing the change.

"Through the Open Compute Project (OCP), we’ve shared the building and hardware designs for our data centers. These dashboards are the natural next step, since they answer the question, "What really happens when those servers are installed and the power’s turned on?""

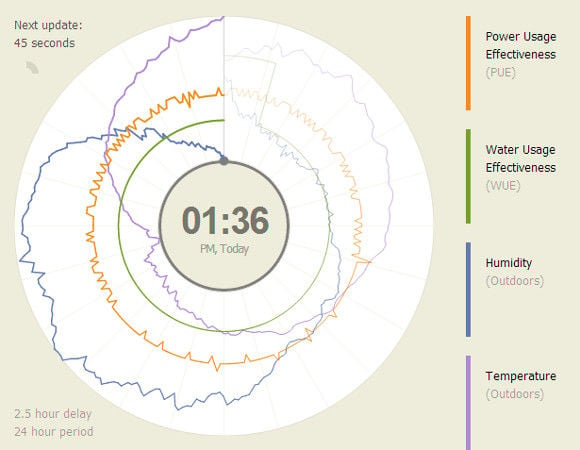

The dashboard outputs information on both the facility's power usage effectiveness* and water usage effectiveness, as well as the outdoor humidity and temperature. This means data center buffs can see how environmental factors influence the efficiency with which Facebook can run its operation. Facebook designed the real-time information displays in collaboration with agency partners Area 17.

Facebook's surprisingly informative Spirograph

In the past, Facebook (and the rest of the tech industry's major data center operators) have been pilloried by Greenpeace and other environmental activists over the efficiency of their data centers, and the types of power they consume.

Thursday's announcement sees Facebook move from a quarterly disclosure of its data centers' efficiency (as favored by Google), to publishing information every minute on a two-and-a-half-hour delay, along with trailing 12-month averages.

"At this time we don't have plans to change, but will continue to provide consistent updates and transparency around these efforts," Google told us in an emailed statement.

The company will also publish as open-source the front-end code for the dashboards on github "sometime in the coming week," allowing other data center operators to use the tech to display information about their own data centers.

"We translate the data from our [Building Management System] into .csv files that are then visualized using the dashboards," a Facebook spokeswoman told us via email. "We've focused on standardizing the data into .csv format so that we can open source the front-end UI such that others who convert their data into .csv format – from whatever management systems they have in place – will be able to visualize their data in this way as well."

Though this all sounds fairly benign, it may upset companies that produce Building Management Systems, such as Schneider Electric, BuildingIQ, and IBM. These firms like to use flashy management dashboards similar to the one Facebook will publish as open source as part of their sales pitch, The Register understands. At the time of writing, Facebook had not responded to queries about the nature of its building management system, and had not told us whether it was self-built or acquired.

Though Facebook uses these announcements to stress the importance of transparency in web infrastructure, it is in a privileged position among the large data center operators: unlike Google, Amazon, and Microsoft, Facebook is not embroiled in a massive IaaS price war, so it need not be quite so paranoid about disclosing any scrap of information about its own facilities that could give a competitor an edge. All Facebook needs to worry about is preserving its user base so it can display ads to them, and any data center info it discloses is unlikely to be useful in Google's efforts to swell the ranks of G+.

This Vulture wishes to extend his thanks to Facebook for not mentioning "Big Data" anywhere in this data-heavy announcement. ®

* Bootnote

Power Usage Effectiveness is an industry standard term that reflects the ratio between the power consumed by the data center supporting infrastructure (cooling, lights, et cetera), and the energy used by its compute, storage, and network resources. Facebook's PUE of 1.09 means that for every watt spent on IT gear, 0.09 watts are spent on supporting it. This compares favorably with data centers operated by Google, and is miles better than the industry average of 1.5 to 1.9.