This article is more than 1 year old

China rumored to rule impending Top500 supers list with 50-plus petaflopper

Big Red wields 'hard power' that is Intel Inside

It looks like China is getting ready to scare the wits out of – or maybe some life into – the US and European supercomputing establishments and their sugardaddy governments once again by taking the top slot in the June rankings of the Top500 supercomputers in the world. And this time, it will be with an all-Intel ceepie-phibie hybrid box using a proprietary interconnect.

Over the Memorial Day holiday weekend, rumors started running around that China was going to get the top ranked machine into the field. HPCwire caught wind of the machine ahead of everyone else and published this initial take based on the chatter and then this follow up with more details. (Tip of the hat, there.)

El Reg has manned the phones and done some digging of its own as well, and while no one wants to steal the Top500 thunder ahead of the International Super Computing event in Leipzig, Germany next month, just like loose lips sink ships, loose chops talk about flops when there is national politics involved.

A lot of the details of the Tianhe-2 machine are out there, and it is a pity that they don't all agree, as often happens with rumors. (Tianhe is Chinese for "Milky Way," in case you are wondering.)

Chinese server vendor Inspur, no doubt responding to the initial story published by HPCwire, sent El Reg an invitation to come visit the company in Leipzig to talk all things HPC, and then dropped the following paragraph in. This is the original translation Inspur did, not something we passed through Google Translate, although I suspect Inspur might have for reasons that will become obvious:

Besides, the fresh worldwide HPC Top500 will be announced on the opening day of ISC13. It is said that the Chinese Tianhe-2 supercomputer build by Guangzhou Supercomputer Centre will top Top500 list. This will be the second time that China win the first chair of Top500 after 3 years of Tianhe-1A. This based the continuous improvement of Chinese HPC industry, that Chinese 'hard power' is one of the largest in the world like HPC R&D and construction etc. Meanwhile, the Chinese 'soft power' include HPC application and talents catches up on the advanced level in the world. The worldwide HPC distribution maybe is changing.

Inspur stopped short of saying it was building the machine, and because of time delays we have yet to get any kind of clarification. Suffice it to say, Inspur would not be bragging about it if Dawning or Lenovo had the deal.

Addison Snell, analyst at HPC watcher Intersect360 Research, said that he has heard rumors from multiple sources, and that the machine is a mix of Intel Xeon processors and Xeon Phi x86-based coprocessors that delivers north of 50 petaflops of aggregate peak performance on double-precision math.

The talk is that the machine will do somewhere around 52 to 54 petaflops peak and maybe 25 to 30 petaflops sustained on the Linpack Fortran parallel processing benchmark test, which is used to rank the Top500 machines in the world.

That means Tianhe-2 will blow by the "Titan" XK7 ceepie-geepie that was ranked first on the November 2012 Top500 list. The Titan behemoth, which is one of the US Department of Energy's big bad boxes and which is located at Oak Ridge National Laboratory, marries a sixteen-core Opteron 6200 processor from Advanced Micro Devices to a Tesla K20X GPU coprocessor, has 18,688 processors and 18,688 GPUs for a total of 560,640 cores.

It uses the earlier generation "Gemini" interconnect to lash the CPUs together into a 3D torus, and the GPUs link to the CPUs using the HyperTransport links on the Opteron chips. Titan has a peak theoretical performance of 27.1 petaflops and did 17.58 petaflops sustained on the Linpack test last fall.

If Titan's performance remains unchanged, then Tianhe-2 is going to be the new titan, at least until the US and Europe come up with some cash to build bigger boxes. (Maybe they can borrow the money from the Chinese government, which has something on the order of $2 trillion sitting around?)

Any of the DOE labs could build a much more powerful and scalable machine using Cray's Intel's "Aries" interconnect and its dragonfly topology. Cray sold the Gemini and Aries interconnects to Intel back in April 2012 for $140m, but still gets to use Aries in its current "Cascade" XC30 line of supers.

The Aries interconnect is better than its Gemini XE or SeaStar2+ XT predecessors because it links to server nodes over PCI-Express 3.0 slots rather than through Intel's QuickPath or AMD's HyperTransport point-to-point interconnects, which link CPUs to each other and to main memory in SMP/NUMA systems.

And while Aries has a very clever mix of optical and copper cabling, the requirement for PCI-Express 3.0 has frozen out AMD's Opterons, which are stuck at PCI-Express 2.0 slots and which don't even have those on the die.

Cray has not yet offered support for Nvidia Tesla or Intel Xeon Phi coprocessors on Cascade. So, if you want to build a 100 petaflops XC30 machine, which you can do, you need to load it up with Xeon E5 processors. And that is more expensive than using a mix of CPUs and GPUs – on the order of four times more expensive per floating point operation.

Cray should have launched support for Tesla K20 and K20X GPU coprocessors and Intel Xeon Phi coprocessors on Day One when the Cascade machines came out last November, but that would have put a serious damper on Intel's Xeon E5 sales in the HPC racket and, frankly, Intel and Nvidia could not have made enough of their coprocessors anyway.

This is just a long way of explaining why the DOE labs in the US have not moved forward, and you can expect, budget permitting, that at least some of the labs will try to get big fat Cascade boxes with some kind of accelerator into the field to compete against China.

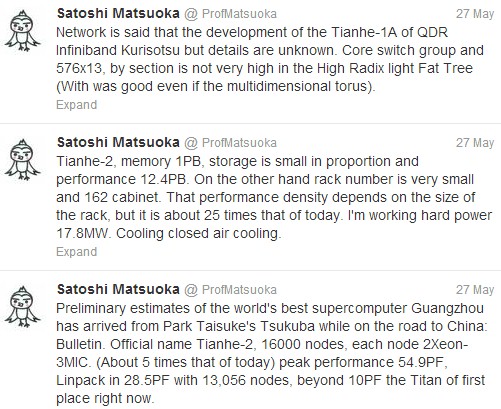

Satoshi Matsuoka, professor of computer science at the Tokyo Institute of Technology and the man in charge of the Tsubame ceepie-geepie that is one of the most powerful machines in Japan, spilled most of the beans, which had been spilled by an unknown (to us) Chinese researcher who was talking at a conference within the past several days about Tianhe-2. Here's what Matsuoka posted on Twitter, and it seems pretty precise although it is still rumor, not confirmed feeds and speeds:

Possible feeds and speeds of the Tianhe-2 super built by the Chinese government

In this case, Google is doing the translating from Japanese. The numbers that Matsuoka is giving do not conform to the feeds and speeds of the expected a 100 petaflopper that is being commissioned by the Chinese Ministry of Science to be used in space exploration and healthcare research.

That machine, as yet unnamed, will consist of around 100,000 of Intel's "Ivy Bridge-EP" Xeon E5-2600 v2 processors and the same number of the next-generation "Knights Landing" Xeon Phi coprocessors – all for a very reasonable $100m supposedly.

The Tianhe-2 box, which was expected to hit 100 petaflops by 2015, not 50 petaflops by 2013, will almost certainly be based on the forthcoming Xeon E5-2600 v2 processors, which are expected in the third quarter of this year.

So how has this Tianhe-2 machine already been tested running Linpack? Well, just like Intel started shipping the "Sandy-Bridge-EP" Xeon E5 v1 chips back in September 2011 before their March 2012 launch, it is reasonable to surmise that the Ivy Bridge-EP parts are going to select customers – or maybe in this case, customer – well ahead of the general availability of the chips.

Intel, of course, refused to comment on any rumors or speculation about the Tianhe-2 machine.

It would be great if Intel had the next-generation Knights Landing x86 coprocessors ready, but the initial "Knights Corner" Xeon Phi chips are only in the field for six months. The word has been going around that Intel is getting ready to ship upgraded versions of these Knights Corner parts, and it is very likely that one of these parts – or perhaps a mix – will be used in the Tianhe-2 machine.

At the moment, there is a Xeon Phi 5110P part with 60 cores that is rated at 1.01 teraflops that has passive cooling and a Xeon Phi 3120A part with active cooling (meaning a fan) with 57 cores rated at a tad over 1 teraflops. The 5110P is the better option because it burns 225 watts instead of the 300 watts of the 3120A and it has more memory capacity and bandwidth. At $2,649, the 5110P is a bit more expensive than the $2,000 Intel is charging for the 3120A.

The word on the street is that Intel will be cooking up kicker Knights Corner 3000 and 5000 series chips as well as a 7000 series with passive cooling and one that integrates onto system boards. These are all based on the same 62-core Knights Corner chips, but will have a different mix of active cores, clock speeds, memory sizes, price points, packaging and cooling.

If El Reg had to guess which GPU Tianhe-2 is using, it will be the one with the most cores, the highest clocks, and the integrated system board packaging, which we believe is called the Xeon Phi 7120X.

It is hard to guess the feeds and speeds of this expected 7120X x86 coprocessor, and there is no guarantee that Tianhe-2 is using them, but let us assume for the sake of a thought experiment that it has all 62 cores fired up. That is only a 3.3 per cent bump in performance if you keep the clock speed at 1.053GHz for the Xeon Phi cores.

You can get another 4.5 per cent more oomph by moving to 1.1GHz, the same clock as the 3120A, but now you have to dump the heat. If you do the packaging right on an integrated GPU, you could dump the extra heat.

So let's assume Intel has come up with such a design with Inspur. Then each 7120X Xeon Phi would deliver 7.9 per cent more floating point performance, which gets you up to 1.09 teraflops. (Doesn't seem to be worth the trouble, does it, especially if it burns more juice and creates more heat?)

With a total of 16,000 server nodes, each with two Xeon E5 v2 processors and three Xeon Phi coprocessors, you can get 52.4 petaflops of peak performance out of the Xeon Phi side of the machine alone if Intel can goose the Xeon Phi as outlined above. Matsuoka suggests that only 13,056 nodes were fired up to run the Linpack test, and that would give you 42.75 petaflops on the Xeon Phi side of the box if the X7120 part is as this hack imagines it.

There is some confusion about the interconnect that is being used in Tianhe-2, but sources tell El Reg it is a proprietary interconnect even though they don't know which one. It could be the Arch interconnect (perhaps an enhanced version) that was used in the Tianhe-1A machine and developed by the Chinese government.

The switch at the heart of Arch has a bi-directional bandwidth of 160Gb/sec, a latency for a node hop of 1.57 microseconds, and an aggregate bandwidth of more than 61Tb/sec. But there is some talk that it is a modified 40Gb/sec (Quad Data Rate) InfiniBand network that is lashing this Tianhe-2 beast together, too.

And it would be funny, in a way that would make the US government go nuts, to have Intel put the Aries interconnect or even TruScale InfiniBand at the heart of Tianhe-2, but with export controls on interconnects to certain countries, that is not gonna happen.

You would think that the Chinese government would want to push the limits of Arch, prepping the way for the day when it has its Godson MIPS chips ramped up and perhaps its own accelerators up to speed and can tell US-based chip makers to have a nice life.

If Tianhe-2 is using a modified InfiniBand instead of Arch, it could be that Arch is not suitable for the particular applications that are expected to run on Tianhe-2. About which, no one knows anything. And the performance of those applications is what will be interesting to find out about.

Bootnote: Having slept on the issue and pondered it a bit more, it is also possible that Tianhe-2 will use a different Xeon Phi part than the 7120X. Think about it for a moment. Can Intel really make 48,000 of its highest-end parts? That would be truly dramatic. And as this hack was writing yesterday, it seemed that what would be better still would be a less expensive part, such as a 3000X or a 5000X that snaps into a mezzanine card on the server board. Something where a lot of the Xeon Phi cores are duds, but the price is right. That would be why you might have three Xeon Phis for every two Ivy Bridge-EP processors. ®