This article is more than 1 year old

Never mind your little brother - happy 10th birthday, H.264

Today it's all about you, Mister Video Codec

The science of prediction

Every message we receive is a mixture of novelty and predictability. Compression is a method of concentrating a message by taking out the predictability or redundancy so it contains a greater proportion of information. It follows immediately that one of the key elements of compression must be prediction. I don’t mean prediction in the sense (or perhaps nonsense) that the world is going to end, but in the sense of identifying redundancy.

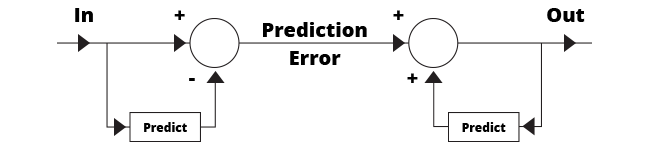

Any prediction we attempt is doomed to be imperfect, so we don’t attempt perfection. Instead we build systems that can handle the imperfection. The diagram below shows a system that contains two identical predictors, one at the sending end and one at the receiving end - hence the need for standardisation.

MPEG prediction methods are, in essence, the same but their capacity to predict has steadily improved over the years

The predictor at the sending end has access to what went before and attempts to predict what will come next. Clearly it will fail to some extent. But by subtracting the prediction from the original, to create what is called the residual, the failure is measured. At the receiving end, the same prediction takes place, failing in the same way. But if the residual is transmitted and added to the prediction, the original message is recovered and, properly engineered, the system is lossless.

All of the MPEG coders work in this way. The only difference between them is that with the passage of time their ability to predict has improved so that the compression factor could increase. This use of prediction leads to the alternative interpretation of MPEG: which is Moving Pictures by Educated Guesswork.

Coding time and space

H.264 works in two major areas. Within the space of a single picture it can identify redundancy using spatial coding. Within the timespan of a series of pictures it can use temporal coding. Of these, temporal coding is the most powerful because much of the time little changes from one TV frame to the next.

Temporal coding uses comparison of successive pictures. Instead of sending a new picture each time, what is sent is a picture difference which is added to the previous picture to make the new one. If the scheme is too simple it won’t be practical. If the previous picture is always needed to decode the present one, changing channel is going to be a problem, and clearly if the signal is corrupted briefly, every subsequent picture will reflect that corruption.

In practice we overcome those objections by creating Groups Of Pictures (GOPs). Every GOP begins with a specially coded picture that can fully be decoded with no other information, so that after a channel switch viewing can begin from the next group. Equally any uncorrectable error will be prevented from propagating beyond the next group. This is one reason a digital TV set takes longer to change channels.

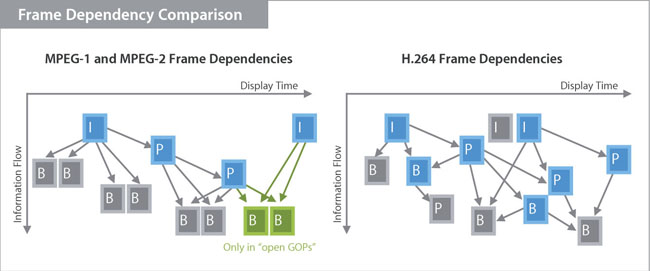

I‑frame (Intra-coded picture - an independent image); P‑frame (Predicted picture) and B‑frame (Bi-predictive picture)

Previously, frame reordering relied on B-frames; but with H.264, any type of predictive frame (B and P) can be used.

Movement results in objects having different locations in successive frames and potentially causes prediction failure and large increases in the picture difference data. In order to overcome that, the encoder measures movements from picture to picture and sends motion vectors to the decoder. The decoder uses the vectors to shift information from an earlier picture to a new location on the screen where it will be more similar to the present picture, improving the prediction.

The remaining difficulty is the parts of the picture that are revealed when objects move out of the way. They could not be predicted from previous pictures because they were simply not visible. The simple solution is to take some information from later pictures where more of the background is revealed.

Clearly to do this the pictures cannot be sent in the correct order. After the first picture of a group, the coder may proceed to send the fourth picture. Then the second and third can take information forward from the first picture, or backwards from the fourth one, using motion compensation as required to produce predicted pictures, to which the transmitted residuals are added to correct for the prediction error. The re-ordering is done using buffer memory and clearly has to be reversed in the decoder. To help the decoder get it right, each picture contains time stamps that tell the decoder when it should be decoding and displaying the associated information. Clearly all this re-ordering causes delay.

Motion carried

The first picture of a group is sent as a real picture, whereas in the subsequent pictures what is sent is a residual, or picture difference. Nevertheless both of these are pixel arrays in which spatial redundancy can be found. Where H.264 differs from its predecessors is in the greater use of prediction in the spatial domain. Given that TV scans along lines and the lines are sent in sequence down the screen, it is possible to predict what some new part of the picture, and/or the motion in it, may be like using information above and to the left that is already known.

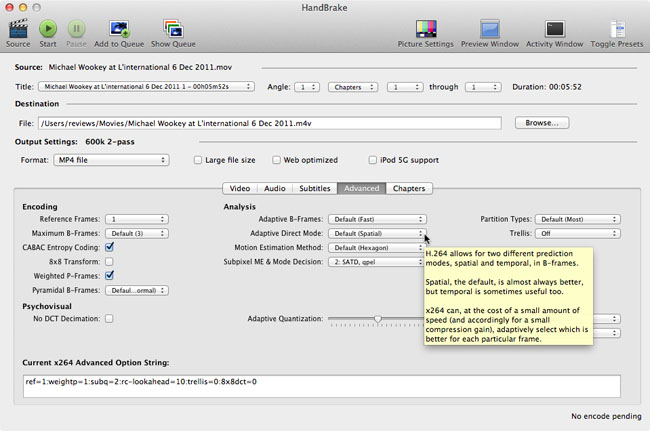

Handbrake's advanced settings that tap into H.264's features - click for a larger image

Coding refinements and experimentation can be performed using this freeware app

Coders produce the bit rate that is demanded from them, whether the demand is reasonable or not. In the real world there is pressure to cram more channels into a given transmission and all video coders cut bit rate beyond what prediction allows by limiting the output of the spatial coders. This makes the spatial information more approximate and great care is taken to make those approximations as invisible as possible to the viewer using a model of the human visual system. This may succeed on easy material, but if the picture content is difficult to predict, the approximations become visible.

So if you see coding artefacts on your TV, don’t blame the codec. Instead blame the broadcasters who are short-changing viewers by using bit rates that are not high enough. After all, what is more cynical than the broadcaster that uses a high bit rate when the HD service is introduced and then lowers it after people have bought their sets? It's deplorable behaviour but then again, there are some UK broadcasters that are no strangers to scandal. ®

John Watkinson is an international consultant on digital imaging and the author of The MPEG Handbook.