This article is more than 1 year old

Google Brain king slashes cost of AI gear

Anything CPU can do GPU can do better

The architect behind some of Google's mammoth machine-learning systems has figured out a way to dramatically reduce the cost of the infrastructure needed for weak artificial intelligence programs, making it easier for cash-strapped companies and institutions to perform research into this crucial area of technology.

In a paper published this week entitled Deep learning with COTS HPC system, Andrew Ng, the director for Stanford University's Artificial Intelligence Lab and former visiting scholar at Google's skunkwork research group "Google X", and his colleagues detail how they have created a much cheaper system for running deep-learning algorithms at scale.

Machine learning is an incredibly powerful tool that lets researchers construct models that can learn from data and therefore get better over time. It underpins many advanced technologies such as speech recognition or image categorization, and is one of Google Research's main areas of investigation due to the huge promise it holds for the development of sophisticated Chocolate Factory AI.

The secret for how Ng's Commodity Off-The-Shelf High Performance Computing (COTS HPC) system is able to cost much less than Google's system is its use of GPUs rather than relying mostly on CPUs, the researchers write.

"Our system is able to train 1 billion parameter networks on just 3 machines in a couple of days, and we show that it can scale to networks with over 11 billion parameters using just 16 machines," the researchers write. "As this infrastructure is much more easily marshaled by others, the approach enables much wider-spread research with extremely large neural networks."

The COTS HPC approach appears to supersede the system Andrew Ng played with when he was at Google, and consumes a fraction of the resources. The Chocolate Factory's "DistBelief" infrastructure used 16,000 CPU cores spread across 1,000 machines to train a machine-learning model with unlabeled images culled from YouTube into recognising high-level concepts such as cats. Google's gear cost around $1m, whereas COTS HPC costs around $20,000, according to Wired.

Using this cheap rig, the researchers trained a neural network with more than 11 billion parameters – 6.5 times larger than the model in Google's previous research – and accomplished this in only three days.

They were able to do this by building a machine-learning cluster that consisted of 16 servers, each with two quad-core CPUs and four Nvidia GTX680 GPUs, linked together by Infiniband.

The researchers paired this system with software written in C++ and built on top of the MVAPICH2 MPI implementation. By using MVAPICH2 they were able to make pointers to data in a GPU's memory be provided as an argument to an Message Passing Interface (MPI) call, letting the whole system pass information among its various GPUs via Infiniband without increasing latency. The GPU-specific code was written in Nvidia's CUDA language. Work is then divided up among separate GPUs according to a partionining scheme.

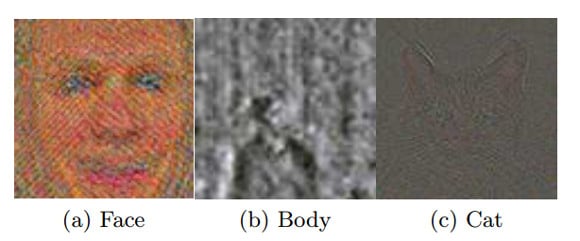

What the COPS HPC system's trainer program 'thinks' a face, upper body, and cat look like

To test their system, the researchers built a training data set out of 10 million YouTube video thumbnails which were scaled down to 200-by-200-pixel color images. They then fed these images into the neural network on the COTS HPC. The system developed an ability to evaluate various objects and classify them, and for the 1.8 billion parameter set demonstrated a best guess of 88.2 per cent success rate for identitying human faces, 80.2 per cent for upper bodies, and 73 per cent for cats.

It was less effective on the 11 billion parameter set (86.5 per cent, 74.5 per cent, and 69.4 per cent, respectively) but the academics hope to iron these problems out with further tuning.

"With such large networks now relatively straight-forward to train, we hope that wider adoption of this type of training machinery in deep learning will help spur rapid progress in identifying how best to make use of these expansions in scale," they write.

Though machine learning is still a very challenging area to develop tech for, the publication of papers such as COTS HPC could make it easier for organizations and universities to come to grips with the tech – and in an age in which few companies can afford to run supercomputer-grade infrastructure to fiddle with it, that's a wonderful thing. ®