This article is more than 1 year old

VMware goes after biz critical apps with vSphere 5.5

Virtual SAN and networks complete grand unified theory of virtualisation - eventually

Virtual networks and virtual storage

After inhaling virtual networker Nicira for $1.26bn in July 2012, VMware has been hard at work integrating the virtual networking and security features of the ESXi hypervisor that were subsequently busted out and included in the vCloud Suite cloud orchestration product, with the virtual switching and network controller it got from Nicira. These hypervisor features, called vCloud Networking and Security, or vCNS, hook into the Nicira products: the Open vSwitch virtual switch (which tucks inside the ESXi hypervisor) and the NVP OpenFlow controller, which sits outside of switches and routers and controls the forwarding and routing planes in those physical devices. Mash all this functionality up and you get NSX.

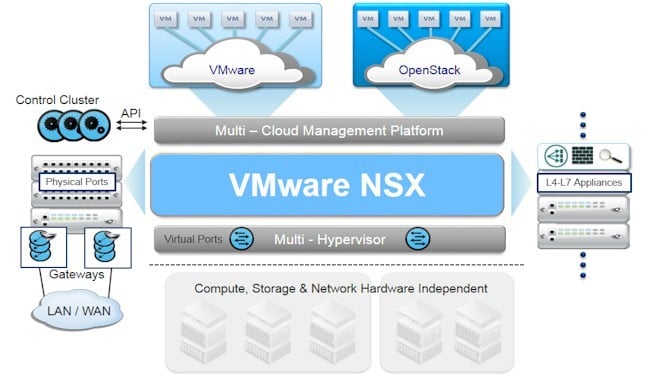

Block diagram of the NSX virtual networking stack from VMware

With NSX, VMware is maintaining the open stance of Nicira even if a lot of the code behind it remains closed. (Exactly what bits are open and closed was not available at press time.) The idea behind NSX, says Gilmartin, is to virtualize the network from Layer 2 all the way up to Layer 7, and to do so atop any existing Ethernet network infrastructure in the data center and without disrupting that network.

"We run Layer 2 through 7 services in software, and now you can deploy network services in seconds instead of days or weeks," he says.

This is the same exact playbook for server virtualization a decade ago, of course. VMware is committed that NSX will hook into any server virtualization hypervisor, not just its own ESXi, will run on any network hardware, will interface with any cloud management tool, and will work in conjunction with applications without modifications. And, as El Reg pointed out back in March when VMware previewed its plans for NSX, there is another parallel with ESXi. As network virtualization controllers are commoditized and possibly open sourced, VMware will eventually be able to give it away if necessary to compete but still charge a licensing fee for the management console - in this case, NSX Manager - to unlock, in golden screwdriver fashion, the features of the network controller.

The materials that VMware has put together ahead of the NSX launch are a bit weak on details, and our coverage from March, while having its share of guesses, has more information than the backgrounder that VMware has put together. A recap is certainly in order, with the addition of whatever other information we can get our hands on thus far ahead of the launch.

NSX rides on top of a hypervisor itself and integrates with other hypervisors in the data center to provide logical L2 switches and logical L3 routers, whose forwarding and routing tables are centralized in the NSX controller and which can be changed programatically as network conditions and applications dictate rather than manually as is required today.

The core NSX controller provides integration with other cloud management tools - VMware named OpenStack and its own vCloud Director by name in March and has been less specific in its backgrounder - is accomplished through a set of RESTful APIs. The add-ons to the core NSX include logical firewalls, load balancers, and virtual private network servers. And VMware expects a partner ecosystem to evolve to plug alternative network applications into the NSX framework, thus giving customers choice to use the logical switches, routers, load balancers, firewalls, and VPN servers.

The NSX stack is expected to be available in the fourth quarter, and thus VMware is not yet talking about the packaging and pricing for it. If history is any guide, there will be Standard, Advanced, and Enterprise Editions of the network virtualization stack, and it would not be surprising to see the software have extra features or cost less when used in environments based solely on the ESXi-vCenter-vCloud. It is not clear what virtual switches will be supported with NSX, but Open vSwitch supported Hyper-V, KVM, and XenServer and VMware said in March it would be integrating it with ESXi. It is not clear what other virtual switches will be able to work along with NSX, but the spirit of openness would suggest that the ones from Microsoft, Cisco Systems, IBM, and NEC should be part of the compatibility matrix at some point.

What CEO Pat Gelsinger will focus on in his keynote announcing NSX is that Citigroup, eBay, and GE Appliances, and WestJet are early adopters of the technology and thus far more than 20 partners have signed up to snap their products into the NSX controller.

Like SANs through the hourglass. . . .

On the storage front, VMware is moving the vSAN virtual storage area network software that has been available in a private beta since last year to an open public beta. The company is also providing more details about how vSAN will be packaged, what workloads it is aimed at, and how it will work alongside real SANs in the data center.

The vSAN software bears some resemblance to the Virtual Storage Array (VSA) software that VMware created for baby server clusters generally stored in remote offices. That VSA software, which debuted in two years ago, is currently limited to a three-node server cluster and was really designed for customers who would never buy a baby SAN for those remote offices. With Citrix Systems, Nutanix, and others offering virtual SANs aimed at virtual desktop infrastructure and other workloads, VMware had to do something to compete. vSAN will not be a toy, but the real deal, with more scalability and performance and intended for data center workloads.

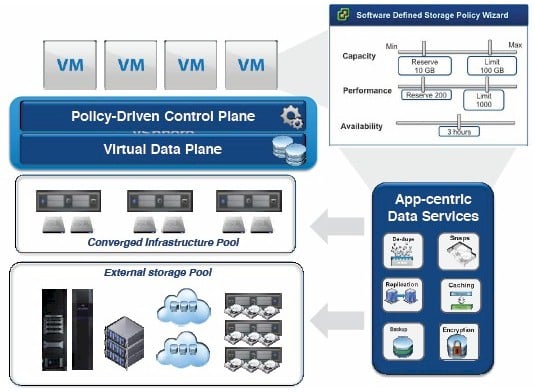

vSAN is that converged storage layer in the storage scheme VMware has cooked up

vSAN's design point, says Gilmartin, is to span a vSphere cluster, which tops out at 32 servers and 4,000 virtual machines using ESXi 5.1 in a single management domain. (This is presumably the same with ESXi 5.5.) The vSAN code takes all of the disk and flash storage in the servers in the cluster and creates pools that all of the VMs in the cluster can see and use. vSAN uses flash for both read and write caching, and disk drives for persistent storage.

"vSAN is really simple to turn on, just one click, and we are really pointing this at clusters supporting virtual desktops and lower-tier test and development environments," says Gilmartin.

Just in case you think VMware is going to take on parent EMC with its physical SANs, that is not the intent. But of course, if the pricing on vSAN software plus local disk and flash storage is compelling enough in combination, and the performance is respectable, then there is no question that vSANs will compete against real SANs as data stores for vSphere workloads. Customers will be able to point VMs at either vSAN or real SAN storage, so it is not an either-or proposition.

While NSX virtual networking is open (in terms of supporting multiple hypervisors, cloud controllers, switches, and routers), vSAN currently only works with the ESXi hypervisor, and specifically only with the new 5.5 release. And that is because it needs to be tightly integrated into the ESXi kernel to work properly.

vSAN uses solid state disks and disk drives on the servers, and has distributed RAID and cache mirroring data protection to guard against data loss if there is a drive, host, or network failure. If you want to add capacity to the vSAN, you add drives to existing hosts its or new ESXi hosts and their capacity is available to be added to the data store pool. Perhaps most importantly, each VM in a cluster has its own storage policy attached to it and controlling vSAN slice so its capacity and performance needs are always met, and the capacities for flash and disk slices are automagically load balanced across the cluster. vSAN is aware of VMotion and Storage VMotion (which respectively allow for the live migration of VMs and their underlying storage), and integrates with vSphere Distributed Resource Scheduler to keep compute and storage slices working in harmony. vSAN also has hooks into the Horizon View VDI broker and vCenter Site Recovery Manager, and is controlled from inside the vCenter management console.

vSAN has no particular hardware dependencies and works with any server that supports the new ESXi 5.5 hypervisor. vSAN will bear the same 5.5 release number as the new ESXi and vSphere, and will come in two editions when it becomes generally available in the first half of 2014. vSAN Standard Edition will have a cap of 300GB of SSD capacity per host, while vSAN Advanced Edition will have an unlimited amount of SSD storage available for each server node in the cluster and will also sport higher performance. (It is not clear if that means SSDs are driving that higher performance, or if VMware is somehow otherwise goosing the Advanced Edition.)

vSAN will work on server nodes with Gigabit Ethernet links between them, but VMware recommends that customers use 10Gb/sec Ethernet connectivity to get decent performance. Each node needs a SATA or SAS host bus adapter or a RAID controller with pass-through or HBA mode, and there has to be one SSD and one disk per node, and VMware recommends that SSDs make up at least 10 percent of total capacity for respectable performance. VMware requires vSAN to run on at least three nodes and during the beta testing, the code will top out at a mere eight nodes, but again the design scales a lot further than that.

You need vSphere Standard, Enterprise, or Enterprise Plus at the 5.5 release level as well as vCenter 5.5 to use vSAN. You can also the vCloud Suite, which includes vSphere 5.5 in its latest incarnation. You can get the beta of vSAN here. Pricing has not been set for vSAN yet, and VMware is not giving any hints, either. ®