This article is more than 1 year old

Oracle revs up Sparc M6 chip for seriously big iron

Bixby interconnect scales to 96 sockets

Hot Chips The new Sparc M6 processor, unveiled at the Hot Chips conference at Stanford University this week, makes a bold statement about Oracle's intent to invest in very serious big iron and go after Big Blue in a big way.

Oracle's move is something that the systems market desperately needs, particularly for customers where large main memory is absolutely necessary. And – good news for Ellison & CO. – with every passing day, big memory computing is getting to be more and more important, just like in the glory days of the RISC/Unix business.

It looks like it took Oracle to finally get a decent microprocessor and interconnect development team together for Sun Microsystems, which is a bit of a shame for Sunners who had many of the right conceptual ideas for a server much like the Sparc M6 machines in mind when they created the UltraSparc-III more than a decade and a half ago. After successive waves of chip development teams with big ambitions that were not met – the ill-fated "Millennium" UltraSparc-V and "Rock" UltraSparc-RK projects being the biggest failures, but there are others – the microelectronics division of Big Larry has settled down and delivered three processors and increasingly sophisticated interconnects and system scalability.

The Sparc M6, unveiled at Hot Chips, makes four.

To you system enthusiasts out there who crave options and who love big iron, this is a welcome return of true engineering. It remains to be seen if Oracle can manufacture and sell these systems, particularly since they only run Solaris and not Linux or Windows, but any machine that can scale to 96 sockets is bound to get the attention of three-letter government agencies and large corporations who are looking for very large memory address spaces for applications to play in.

While the S3 core at the heart of the latest Sparc T4, T5, M5, and M6 processors is modestly powerful compared to Xeon, Power, System z, and even Itanium (yes, Itanium) alternatives, there are plenty of workloads where the S3 core is going to do just fine.

The Oracle M6 processor

And thus, if Oracle wants to push Sparc M6 systems aggressively, it will have to come out and say that for many customers these machines are better than a Xeon-based Exadata cluster and even more suitable than a four-socket Xeon E7 machine for hosting an in-memory database. Should Oracle do this, El Reg thinks that the Sparc M family of machines (meaning the ones made by Oracle, not the ones made by Fujitsu, which have an entirely different processor and interconnect in the two most recent product lines after sharing a Fujitsu design for the past several generations) could be the means of a resurgence in big iron for the former Sun Microsystems.

In other words, if the Sparc M6 and Bixby interconnect work as advertized, hardware-loving Larry doesn't need to build Exadata clusters any more and can focus on single database images running on big iron. Oracle doesn't need Intel. It just needs to sell Sparc and Solaris, like Sun did during the dot-com boom.

This, of course, is probably not going to happen. Oracle has invested far too much in its Exa server line, and unless the salespeople at Oracle are motivated to sell the Sparc M servers where they might otherwise sell Exa machines for database, web application, or analytics workloads, all of this engineering will be wasted effort.

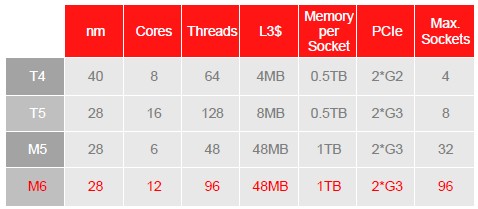

The Sparc T and M chips have come a long way in the three years that Oracle has owned Sun and gotten its Sparc chip house in order. Here's how the four processors stack up in terms of their basic feeds and speeds:

The latest Sparc T and M chips, compared

The Sparc T series are aimed at entry and midrange systems, spanning from one to eight sockets and up to 512GB of memory per socket. The Sparc T5 shrank to a 28-nanometer process – all of Oracle's server chips and interconnect chips are currently etched by Taiwan Semiconductor Manufacturing Corp (aka TSMC), while Sun used Texas instruments as its fab – and that allowed Oracle to double up to sixteen cores and 128 threads on a die.

With the jump from the Sparc M5 to the M6, Oracle is not changing process from 28 nanometers, but is making a larger chip with twice as many cores. But again, the M series processors, while based on the same eight-threaded S3 cores, have a different number of cores per die and much larger L3 cache and main memories feeding into those cores. And thanks to the on-chip SMP that is similar to that used in the Sparc T5 chips, the M5 and M6 chips can be used to gluelessly link up to eight sockets into a single system image. And with the M6 machines, the Bixby interconnect has been boosted to scale from 32 sockets up to 96 sockets – more on that in a bit.

As a reminder, the S3 core has a dual-issue, out-of-order pipeline. Each core has eight threads using Sun/Oracle's implementation of simultaneous multithreading, which virtualizes that pipeline and makes it look like eight instead one to the operating system.

The chip's threads, as these virtual pipelines are called, can be dynamically allocated, which means instructions that have higher priority – what Oracle calls a critical thread – can hog as much resources as they need up to the full resources of the core, if they have the priority. In effect, an S3 core or all of the S3 cores in a die or in a system can be set up with critical threads and run as if they were a single-threaded machine. This dynamic threading was new with the Sparc T4, and it has made a real difference to the viability of the Sparc server line; many legacy Sparc/Solaris applications are resource hogs and did not make good use of all those threads.

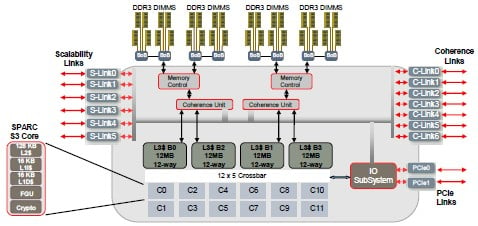

Block diagram of the Sparc M6 chip

The S3 cores on the M6 chip have 16KB L1 instruction and data caches, which is a little skinny by modern standards, plus a 128KB L2 cache, which is also a bit skinny. The M6 chip has 48MB of L3 cache on the die, which is shared by all twelve cores on the processor. Again, given the increase in cores, you would expect for Oracle to have boosted the L3 cache by a factor of two, but with 4.27 billion transistors on the chip, the M6 is already a monster.

It is reasonable to guess that with a shrink to 20 nanometers with the supposed Sparc M7, Oracle will probably focus on ramping up the clock speed and boosting the caches as well as adding more on-chip accelerators to better position the M series chips against their Power and Xeon alternatives at the high end of the server racket. At 96 sockets, 1,152 cores, and 9,216 threads in a single system image with the fully extended Bixby interconnect, Oracle doesn't need to focus on scalability for a while – unless, of course, those hyperscale data center operators and three-letter agencies ask it to.

The Sparc M6 has four DDR3 memory controllers on the die, with each one feeding out to two memory-buffer chips that in turn each have two memory channels supporting two DDR3 memory sticks. That works out to 16 channels and 32 slots, and with 32GB memory sticks, that gives you a maximum of 1TB per socket. So that means the top-end Sparc M6 server can have 96TB to feed those 1,152 cores.

The dozen cores on the M6 chip are linked through a 12-by-5 crossbar interconnect, with four of the pipes linking the cores to each of the four 12MB L3 cache segments; the fifth pipe is used to link the cores to the I/O subsystem, which feeds out to two PCI-Express 3.0 interfaces on the die. Those controllers support two x8 ports of PCI traffic each. The chip also has seven scalability links (SLs) that implement the glueless SMP interconnect, which hooks every socket in a cluster to every other socket. "Small-scale coherence cannot scale up," explained Ali Vahidsafa, senior principal hardware engineer at Oracle who was in charge of the M6 design, "and it should be obvious that large-scale coherence is complicated."

No kidding. But Oracle is taking a stab at it just the same.

The Sparc M6 chip also has seven coherence links (CLs), which reach out to the Bixby interconnect chips to scale beyond eight-way SMP to lash multiple four-way machines together in a giant NUMA web. Depending on how many Bixby chips are used, Oracle can create machines with 16, 24, 32, 48, 64, or 96 sockets, all with a single shared memory space. The Sparc M6 chip has an astonishing 4.1Tb/sec of aggregate bandwidth feeding into and out of those SL and CL ports.

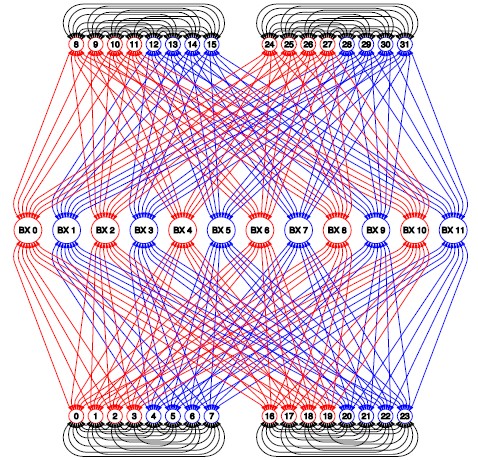

Here's what the schematic of a 32-socket machine using twelve Bixby interconnect chips looks like:

The Bixby interconnect for the M6 servers

The Bixby interconnect does not establish everything-to-everything links at a socket level, so as you build progressively larger machines, it can take multiple hops to get from one node to another in the system. (This is no different than the NUMAlink 6 interconnect from Silicon Graphics, which implements a shared memory space using Xeon E5 chips, or the "Aries" XC interconnect invented by Cray and now owned by Intel or the Fujitsu "Tofu" interconnect, neither of which implements shared memory across nodes. If you want to scale, you have to hop.)

The Bixby coherence-switch chips hold L3 cache directories for all of processors in a given system, and a processor doing a memory request has to use the CLs to find the proper processor SMP group in the system, and then the processor socket in the SMP group that has the memory it needs. It then hops to to closest Bixby chip linked to that segment of the machine (not directly, but by passing over CLs and then SLs back through CLs), and goes down the proper SL to reach that data in L3 cache or to use the local main memory associated with that socket to retrieve it from DRAM if it is not in cache.

The machine is a NUMA nest of SMP servers. And to get around the obvious delays from hopping, Oracle has overprovisioned the Bixby switches so they have lots of bandwidth. The SLs have four lanes of traffic both ways running at 12Gb/sec, but the CLs have twelve lanes running at that bandwidth. Each Bixby has links to sixteen SLs. In the 32-way machine shown above, each Bixby hooks to four of the eight sockets in each eight-way SMP, and it looks like it can take anywhere from one to four hops to get from one processor to another in the system.

The Sparc M5-32 machine that Oracle already ships using a dozen Bixby chips can have four physically partitioned hardware domains (one for each SMP in the box), and has 3.1TB/sec of aggregate bandwidth on the CLs and 1.5TB/sec of aggregate bandwidth on the SLs.

It is not clear when Oracle will ship the Sparc M6 processor or the machines using it. But Vahidsafa did say that Oracle intended to support both Sparc M5 and M6 processors side-by-side in the same system, allowing for a gradual upgrade of processors when customers buy high-end Oracle Sparc boxes. This is something that Sun used to do, and that IBM and Intel do not allow with their respective Power and Xeon chips and chipsets. ®