This article is more than 1 year old

The future of PCIe: Get small, speed up, think outside the box

Plans range from smartphone storage to InfiniBand competitor in HPC

IDF13 The near-ubiquitous PCI Express interconnect – aka PCIe – is finding its way into mobile devices, working its way into cabling, and is on schedule to double its throughput in 2015 to a jaw-dropping 64 gigabytes per second in 16-lane configurations.

So said Ramin Neshati, the Marketing Workgroup Chair of the PCI-SIG, when The Reg sat down with him during this week's IDF13 in San Francisco.

This June, Neshati reminded us, the PCI-SIG announced the M-PCIe spec, which enables the PCIe architecture to operate over the high bandwidth, serial interface M-PHY physical layer technology of the MIPI (mobile industry processor interface) Alliance.

On the same day that the PCI-SIG closed the M-PCIe spec and announced it, two IP vendors - Synopsis and Cadence – announced that they had working IP based on the spec. "Which is," Neshati told us, "to my recollection the record. I haven't seen anybody on the same day the spec was closed announce that they had IP on it."

The M-PCIe spec supports three "Gears" – Gear 1 operates at 1.25 to 1.45 Gbps; Gear 2 is 2.5 to 2.9 Gbps; and Gear 3 is 5.0 to 5.8 Gbps, all tuned to work on the short-channel topologies for which the M-PHY is designed to support.

"Basically, the scope of the M-PCIe spec is limited," Neshati said. "That's why it got released so quickly." This simplicity – and M-PCIe's ability to work across multiple usage scenarios in a single system without reconfiguration – helps developers of mobile hardware such as handset or tablets to easily reap the benefits of PCIe in small form-factor devices. "You can 'do once, use many'," he told us, which shortens development cycles.

"In an environment where things turn quickly, like smartphones and tablets, this is ideal because it allows you to keep that cadence going," he said.

Although the MIPI Alliance suggests that M-PCIe can be used for chip-to-chip interconnects in a mobile system, and promotes the UFS (universal flash storage) interconnect for – you guessed it – storage, Neshati sees no reason M-PCIe can't be extended to storage, as well. "There's nothing precluding you from using it for storage," he said.

Neshati was loath to disparage UFS, and noted that since the two share the same PHY their native bit rates are identical, but he did point out that PCIe does have an advantage over UFS in that the latter doesn't yet have a software ecosystem to support it. "There may yet be one," he said, "but it has to come about. There is an existing ecosystem around PCI Express, so from a software point of view it's already solved, it's already there." PCIe already has tools for measuring performance and compliance, he said, while UFS has yet to benefit from that sort of ecosystem.

Thinking outside the box

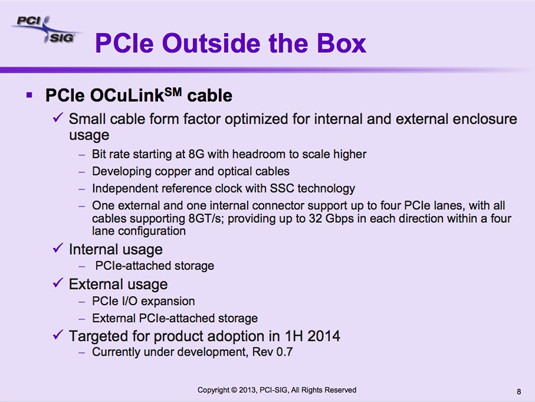

The PCI-SIG is also working on a new spec that will extend PCIe via cabling, either inside a system to connected storage devices, or externally for any sort of PCIe-supported device, be it storage an enclosure with PCIe slots, or some other implementation. This spec, now under development, is called "OCuLink" – optical copper link.

"Usually PCI Express is a technology that's in the box," Neshati said, "connectivity that's chip-to-chip or on a board. OCuLink is the cable version of PCI Express."

Despite its name, the first iteration of OCuLink will be copper-based, but is being specced so that future iterations can be based on optical cabling. It's based on PCIe generation-three tech, and as Neshati put it, it's being "future-proofed" for future generations of PCIe as well.

The PCI-SIG's OCuLink cabling will extend PCIe outside a system enclosure to external devices

The cable spec allows for one, two, or four lanes, and since it doesn't carry clock signals or power over the cable, he said, the scheme will allow for low-cost cables, connectors, and ports due to the lack of the need for what he identified as "complex shielding requirements."

OCuLink should be available next year, Neshati said. "OEMs are behind it, the connector vendors are behind it, there are some very interesting mock-ups right now that are proofing the concept."

In comparison to an outside-the-box high-speed connector tech that tunnels PCIe, Thunderbolt, Neshati said that he predicts OCuLink will be a less-expensive way to connect external devices because implementers won't have to provide a controller for the cabling. "Thunderbolt has a controller," he said, "so you're paying extra for that chip, whereas here you're getting PCI Express for free – it's driving your cable."

Next up: fourth-generation PCIe

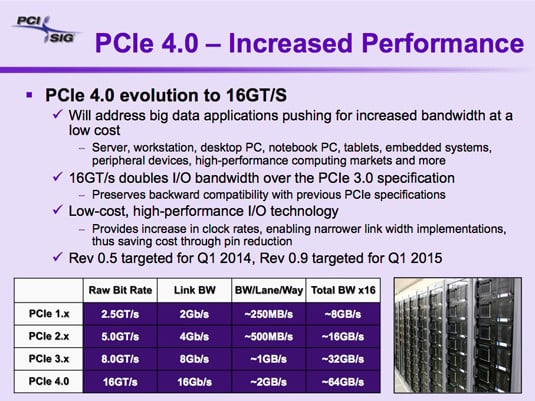

The PCI-SIG is preparing the imminent release the PCIe 3.1 spec, which Neshati referred to as a "collector spec" that incorporates a shedload of "little itty-bitty chiclet changes" that developers have contributed to PCIe 3.0 since it was released that improve power management and performance, and add features. The next big change in the PCIe spec, however, is expected to be released in 2015: PCIe 4.0.

PCIe 4.0 was announced in late 2011, and Neshati says that work on it is proceeding apace. "PCI-SIG members are bringing in technology to drive gen-4 functionality," he said.

At a maximum theoretical bit rate of 16 gigatransfers per second, don't expect to find PCIe 4.0 in your desktop or laptop. Quite simply, those mundane devices have no need for such performance – "Gen-2 is enough for most applications," he said. "Gen-4 will be servicing some of the very high-end server, HPC kinds of applications."

PCIe 4.0, he said, will be in the datacenter competing with InfiniBand, which currently has a theoretical maximum throughput in its FDR flavor at 14Gb/sec per lane one-way, and QDR at 10Gb/sec one-way. PCIe 4.0 will have a maximum theoretical throughput of around 2GB/sec per lane one-way.

PCIe 4.0 is preparing to go head-to-head with InfiniBand

PCIe 4.0 will allow for 16-lane implementation, which would thus have a total bandwidth of around 64GB/sec, both ways. By comparison, 12-lane InfiniBand FDR has a theoretical bandwidth, both ways, of 42GB/sec, and QDR, 30GB/sec.

Of course, those figures represent InfiniBand today and PCIe 4.0 in 2015; InfiniBand will almost certainly be snappier by then – in fact, testing is now underway of InfiniBand EDR (enhanced data rate), which will increase the one-way throughput to 25Gb/sec per lane. A 12-lane EDR implementation would thus have a overall throughput – both ways, again – of 75GB/sec.

PCIe 4.0 will still have one advantage over InfiniBand, though: it's snuggling up with compute cores on microprocessor dies, while InfiniBand isn't – there are distinct advantages to being a legacy technology. In addition, Neshati said, the PCI-SIG's goals for the PCIe spec include "low cost, high volume" manufacturability.

As we mentioned, PCIe 4.0 is expected to be released sometime in 2015, but Neshati gave the PCI-SIG a little wiggle room. "Maybe a little bit longer," he said. ®