This article is more than 1 year old

Tracking the history of magnetic tape: A game of noughts and crosses

Part Two: 'Tis a spool's paradise, t' be surrrre

Joining the dots

As magnetic tape proved itself to be a more efficient storage medium than punched card or paper tape, the issue of data density became more and more of a fixation. Just how much could you get on a ribbon of rust? What were the limits to be able to reliably record and replay those data signals?

Compared to audio, the needs of data storage were radically different given the characteristics of the signals involved. Recording computer data didn’t need a particularly stellar dynamic range. Consequently, having a lower signal-to-noise ratio requirements meant that tracks and their respective guard bands could be narrower.

Sound recording in the late 1980s offers an easy comparison. Analogue audio 24-track machines required 2-inch tape, whereas the digital equivalent worked on 1/2-inch tape. The track count would later double to 48 on later digital recorders using the same 1/2-inch tape. Although digital tape recorders had many additional features, they were, nevertheless, all about storing data.

With data tape drives, you could also dispense with the additional complexity of bias circuitry used to enable a more linear response from tape for analogue sound recording. Non-linearity in magnetic tape recording wasn’t an issue for digital tape systems, however, attention would still need to be paid to the coercivity characteristics of the magnetic tape and the recording level calibrated to match.

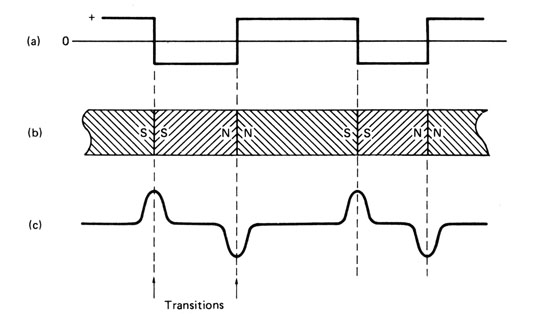

When recording data, the current to the tape head is constant and recording is achieved by changing the direction of the current over time. Hence the tape is magnetised by changes in flux and which amount to transitions – in other words, bipolar recording.

Prior to recording, there is a channel-coding stage that converts the raw data into waveforms that, among other things, improve recording density and provide essential timing information. The recorded transitions that result from this process also avoid recording for long periods in one state unchanging state.

Digital data is not recorded on tape as binary waveforms but as signals indicating transitions

For computing, increasing tape speed allowed for higher data rates and these faster transports also enabled swifter access to data stored on reels containing half a mile of tape or more. As none of this was ever intended to played to a listener, much broader bandwidths could be used and were necessary in improving the data density.

Information overload

By contrast, the improvements in audio recording would rely more on a low noise floor, and accurate capture and playback of varying signal amplitudes. The art of sound recording depended on being able to contain the dynamics of a performance within the limitations of the recording media. Hence the need to avoid overloading – that would cause distortion – and having a sufficient signal strength such that lower level content wouldn’t be lost in tape hiss.

Add to that, the signal processing necessary to produce an even frequency response – that could match or at least get close to lowest and highest pitched sounds audible to the human hearing – and you can see that there’s a lot to be considered when saturating a tape with audio content.

Naturally enough, compromises are inevitable and while audio systems for the sake of dictation, portability or consumer-friendly pricing could utilise tape recording formats that lacked the best fidelity, they could deliver other advantages such as slower tape speeds and hence lower tape costs.

Regardless of whether it was analogue audio or binary data, what was being tested was the capacity of magnetic tape to store information.

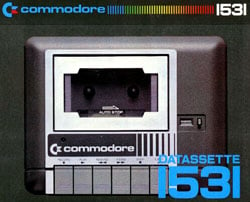

Commodore's Datasette recording system relied on the

Compact Cassette for storage needs

As transistors came on stream, the analogue world would benefit from smaller and cheaper components with lower power requirements and improved noise characteristics. Together, they would make possible developments such as the Compact Cassette – a compromise in audio fidelity, but so massively convenient, it dominated the consumer market for over 30 years.

Error tolerance

The shortcomings of audio equipment has always been strikingly obvious to the listener, moreover, analogue audio recording errors are hard to mask and impossible to undo. However, there’s quite a bit of give and take in analogue audio recording. Momentary over-recording can be tolerated and there’s a sweet spot regarding tape saturation where pleasing harmonic distortion occurs.

Furthermore, background noise can be masked by louder sounds so even dubious recording systems can deliver listenable results, that is, until quieter passages come along or the song starts to fade. Noise, distortion and minor variations in playback speed (flutter) are undesirable elements and (in an audiophile world) are the equivalent of errors in data stream.

Still, data recordings were never meant to be heard by people, despite storage needs migrating to the Compact Cassette for micro computers in the late 1970s and early 1980s.

The only errors computer systems needed to worry about were whether it could accurately replay the pattern of transitions recorded onto tape. Indeed, in this domain, errors can’t be tolerated, and yet they can be accommodated. Data accuracy is paramount in computing systems – there is no give and take when it all goes wrong, just a data error message, if you’re lucky.

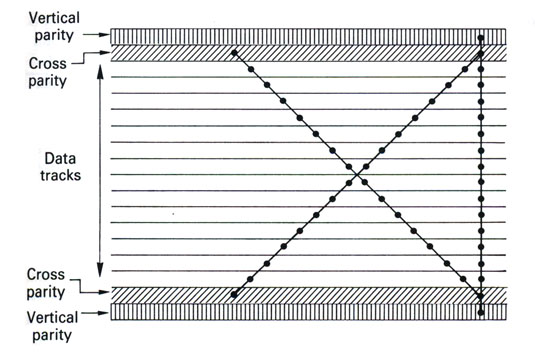

To make sure it doesn’t all go wrong, the multitrack recording heads not only allowed more information to be recorded across the width of a data tape but also featured a separate parity track reserved for check codes as part of error correction schemes, as mentioned earlier.

In 1964 IBM’s System/360 appeared with an increased track count from seven to nine to get more out of its half inch tape (eight for data and one for parity). Yet as the track count inevitably increased, so would the need to add more parity tracks to maintain the integrity of the data whilst recording and on playback.

IBM 18-track layout and parity tracks

Indeed, 20 years on, the IBM 3840 tape drive came on stream sporting 18-tracks, four of which were used as part of its adaptive cross parity error correction scheme. The interleaving of data across tracks was all part of the the 8/9 group coding system, a necessity to achieve an even higher data density on magnetic tape.

This is quite a convoluted topic which John Watkinson covers expertly in The Art of Data Recording and, lucky for us, he wrote a very accessible summary of these techniques recently for The Register here.