This article is more than 1 year old

Mind waiting for compute jobs? Boy, does the cloud have a price for you!

Big boys to flog low-grade IT at a low, low price - and you know what that means

LADIS 2013 It's an open secret that the virtual machines people buy from public clouds have variable performance properties, but it's about to get a whole lot worse as providers prepare to sell people gear with sludgier capabilities than ever before, but at a low, low price.

Now, researchers have figured out a way cloud providers can take advantage of the chaotic properties of their infrastructure to sell users cheap workloads with less desirable performance characteristics, such as a potentially high job execution time. We've also separately had strong hints from Google and Microsoft that the big cloud companies are actively investigating services of this nature.

The Exploiting Time-Malleability in Cloud-based Batch Processing Systems paper was presented on Sunday at the LADIS conference in Pennsylvania by a trio of researchers from City University, Imperial College, and Microsoft Research.

"The goal of our work is on how to exploit time-malleability for the cloud revenue problem," says City University researcher Evangelia Kalyvianaki.

The paper finds that cloud providers could make more money and waste less infrastructure by pricing and selling workloads according to the performance, capacity, and expected latency of jobs running in a cluster. They could charge less for jobs, such as MapReduce batch jobs, that might run a period of time after provisioned, or charge more for jobs to run immediately. Best of all, the system could be implemented as an algorithm at point-of-sale, fed by data taken from the provider's own monitoring tech.

This type of service is possible because major cloud providers such as Google, Amazon, and Microsoft have worked on sophisticated resource schedulers and cluster managers (such as Google's Borg or Omega systems) that give them the fine-grained control and monitoring necessary to resell hardware according to moment-by-moment demand and infrastructure changes.

Just as Amazon has a spot market for VMs today that adjusts prices according to demand, the researchers think there should be an equivalent market that prices workloads according the cost of executing them within a certain time period according to underlying resource utilization within the cloud. By implementing the proposed model, cloud providers can reduce the amount of idle gear in their infrastructure, and developers can run certain jobs at reduced costs.

These ideas line up with whispers we've been hearing about prototype cloud products that are being developed by major infrastructure operators to further slice and dice their infrastructure.

'I'm willing to spend more to get a result sooner'

Google engineering director Peter Magnusson has told us that Google is contemplating using the non-determinism endemic in its cluster management systems to spin-up a cloud service which does SLA bin-packing. This might let Google offer up VMs with reduced SLAs at a substantially lower price, for example. One of the paper's authors, Luo Mai, is the recipient of a Google Europe Fellowship in Cloud Computing, and the research is partly backed by this fellowship.

Microsoft seems interested as well – one of the three authors of the paper is Paolo Costa who is affiliated with Microsoft Research in Cambridge, UK. When we asked Azure general manager Mike Neil during a recent chat about the possibilities of bin packing for different pricing, he paused for a long time, then said: "That's very interesting... there's an exciting opportunity to give the customer a knob which says 'I'm willing to spend more to get a result sooner'."

For example, Neil said, oil and gas companies might be willing to pay less to get batch jobs back later, while financial firms wanting to quickly analyze a huge data release may want to go to the cloud for some extra capacity, and in a guaranteed time period.

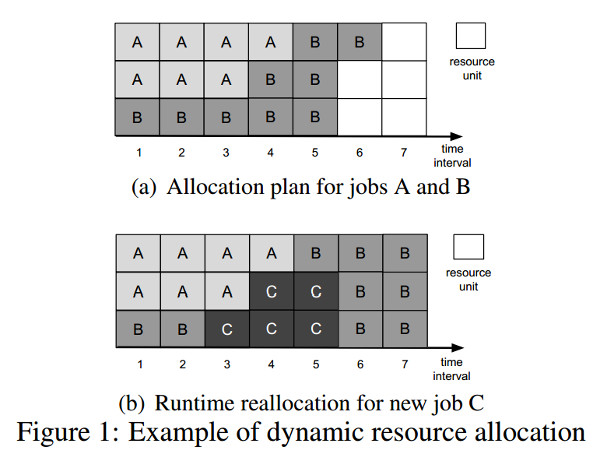

The LADIS paper explains how cloud providers can offer this type of system, why it makes sense, and the pricing model that users can look forward to. All you need to run the system is a notion of what a unit of infrastructure within a cloud provider looks like, and the rough completion time of your job. A unit of infrastructure could be a standard size of CPU, attached memory, storage, and bandwidth.

When users prepare to buy resources they can put the estimated completion time of their job, which could be a batch MapReduce workload. In response, the cloud will look at what is currently being scheduled, how many "resource units" it may have available, and calculate the execution cost of the job. Urgent jobs will cost more than the average, and non-time contingent jobs will cost less.

In the future the researchers hope to implement dynamic pricing that displays a fluctuating base workload price according to up-to-the minute availability, rather than calculating it at the point of order.

GEARBOX can reschedule workloads to fit available resource slots

The researchers tested out their idea via a system called GEARBOX which used a discrete event simulator and IBM's CPLEX optimizer (which is free for academic use). They tested it on a cloud with a capacity of 1,000 resource units and ran it through two scenarios - one job which required between 2,500 and 7,500 workload units, and another which required significantly fewer than the available capacity.

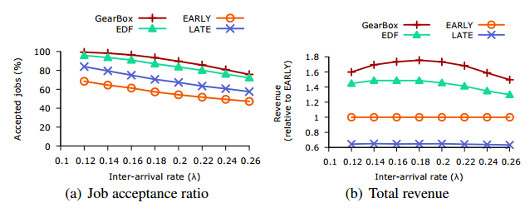

The researchers tested the performance of the system against three baselines: one named 'EARLY' reserves a fixed set of resources for a job to meet its most demanding deadline, 'LATE' where resources are earmarked when the job is put into the system, and 'EDF' which is works by earliest deadline first.

Gearbox can make cloud providers more money, and take on more jobs than other approaches

In all cases, the GEARBOX approach performed better than these, making the cloud providers more money, and offering developers access to both pricey and timely workload slots, as well as cheap slots as well.

The researchers are working on implementing more constraints and variables, though are also wary of making the job-brokerage algorithm so complex it slows down the sale of resources.

"Some jobs are more important than others, some jobs don't matter – you might think about users specifying probability of success or a deadline," noted Google's cluster and resource manager expert John Wilkes during a question and answer session. Both Google's Omega and Borg systems use this in some format.

For their next steps, the team hopes to build a prototype Hadoop-based system, and also integrate other scale-out compute munching'n'crunching systems.

If they don't come across any stumbling blocks and extend the approach further, we here on El Reg's cloud desk reckon we could see product implementations of this design fairly soon as it gives cloud providers a way to increase resource utilization and grow revenue, which is about as fundamental a goal for a cloud company as you can get.

Amazon Web Services is holding its huge cloud conference next week, and we'll be sure to prowl the halls to find out if Bezos & Co are as interested in this as Google and Microsoft seem to be. ®