This article is more than 1 year old

ARM targets enterprise with 32-core, 1.6TB/sec bandwidth beastie

Think the UK boys are just mobe-chip makers? Been out of town lately?

Updated ARM has released more details about the innards of its cache-coherent on-chip networking scheme for use cases ranging from storage to servers to networking – specifically, its CCN-5xx microarchitecture family and its newest member, the muscular CoreLink CCN-508.

It's been almost exactly 29 years since Acorn Computers' ARM1 chip ran its first code on April 26, 1985. Since then, the company that was soon to become ARM branched out beyond CPUs to IP design for GPUs, microcontrollers, coherent and non-coherent interconnects, memory controllers, system memory management units, interrupt controllers, direct memory access controllers, and more, popping up in products from toys to smart cards to mobile devices to printers to servers.

"You name it, we generally build it," lead architect and ARM Fellow Mike Filippo told attendees at his company's 2014 Tech Day last week in Austin, Texas.

ARM's most recent expansion has been in the enterprise space, with work on high-end servers and networking IP. "In 2010," Filippo said, "we really began to take a hard look at the networking market, and we're getting a lot of interest from networking OEMs."

ARM's broad IP portfolio comes in handy when it's designing complex enterprise-level networking, storage, and server parts; the Cambridge, UK company can wrangle a passel of their own IP blocks into a larger assemblage, and license that überblock to chipmakers hungry to service the enterprise market.

And that's what ARM is doing with its CCN-5xx family of high-performance cache-coherent on-chip networking IP. The first product in the family was announced last year: the CoreLink CCN-504, and the newest member is the CoreLink CCN-508. ARM also has plans to not only create a scaled-up, higher-performance family member, but also to move in the opposite direction and create a more modest CCN-5xx for low-end servers and networking.

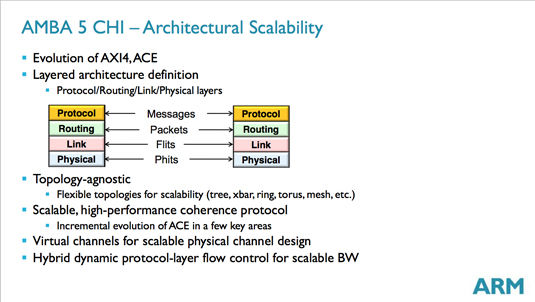

Each member of the family has a similar scalable topology and microarchitecture, and is based on version five of the advanced microcontroller bus architecture coherent hub interface (AMBA 5 CHI). AMBA is an open-standard on-chip interconnect specification first incarnated by ARM back in the mid-1990s, and is used to tie together different functional blocks such as those, for example, that comprise a system-on-chip (SoC) part.

The AMBA 5 CHI's architecture is topology-agnostic, able to support anything from point-to-point to mesh, and for the CCN-5xx product family, ARM chose a ring bus over which it works its high-speed element-to-element communication chores.

"Generally speaking," said Filippo, "the architecture was built and designed around scalability, scalability in all aspects: scalability of performance, scalability of design complexity, scalability of power, etcetera." And, apparently, scalability in the use of the word "scalability."

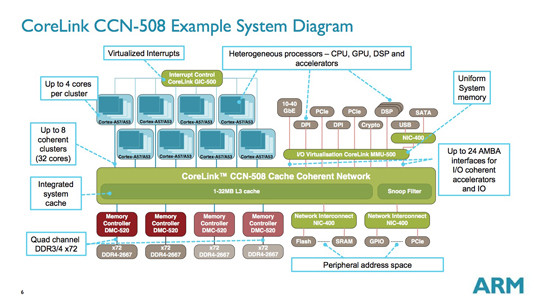

By way of example, the CoreLink CCN-508 scales up from its CCN-504 predecessor, allowing its licensees – ARM prefers the term "partners" – to hang onto it a veritable Noah's Ark of functional units: CPUs, DDR3 or DDR4 memory controllers, network interconnects, memory management units, and up to 24 AMBA interconnects for such peripherals as PCIe, SATA, and 10-40 gigabit Ethernet.

Actually, Noah's Ark isn't all that good an analogy, seeing as how the CCN-508 can bring onboard more than that old guy's limit of two of a kind. It will, for example, support up eight 64-bit ARMv8 CPU clusters of four cores apiece – each cluster could, for example, contain two beefy ARM Cortex-57 and two lightweight ARM Cortex A-53 cores in a big.LITTLE configuration*. Easy math: 32 cores, a doubling of the CCN-504's 16-core max.

In any case, what's of most interest in ARM's new multi-IP mashup is the way in which all its blocks communicate at high speed thanks to the CoreLink CCN-5xx family's modular, highly distributed microarchitecture. First off, the smarts that manage its transport layer are fully distributed, with no point of centralization; multiple modular "crosspoints" know everything they need to know to take data coming in from whatever source and route it to where it needs to go.

The whole system can run at CPU clock speed, so no core should be kept waiting long for the bits and bytes awaiting duty in a shared, distributed, global L3 cache. But this is not your father's CPU-centric L3 cache – this L3 has a few tricks up its sleeve.

* Update

After this article was a published, ARM got in touch with us with a clarification: the CCN-5xx architecture doesn't support a big.LITTLE configuration in its compute clusters; each max-of-four-cores cluster contains either Cortex-A57 or Cortex-A53 cores, just not both in the same cluster.