This article is more than 1 year old

Google reaches into own silicon brain to slash electricity bill

Skunkworks PEGASUS system tramples cloud's ugly power-sucking secret

Google has worked out how to save as much as 20 percent of its data-center electricity bill by reaching deep into the guts of its infrastructure and fiddling with the feverish silicon brains of its chips.

In a paper to be presented next week at the ISCA 2014 computer architecture conference entitled "Towards Energy Proportionality for Large-Scale Latency-Critical Workloads", researchers from Google and Stanford University discuss an experimental system named "PEGASUS" that may save Google vast sums of money by helping it cut its electricity consumption.

PEGASUS addresses one of the worst-kept secrets about cloud computing, which is that the computer chips in the gigantic data centers of Google, Amazon, and Microsoft are standing idle for significant amounts of time.

Though all these companies have developed sophisticated technologies to try to increase the utilization of their chips, they all fall short in one way or another.

This means that a substantial amount of the electricity going into their data centers is wasted as it powers compute chips that are either idle or in a state of very low utilization. From an operator's perspective, it's a sucking chest wound in the budget, and from an environmentalist's perspective it's a travesty.

Now Google and Stanford researchers have designed a system that increases the efficiency of the power consumption of the data centers without compromising performance.

PEGASUS tunes the power consumption of the chips to fit the task

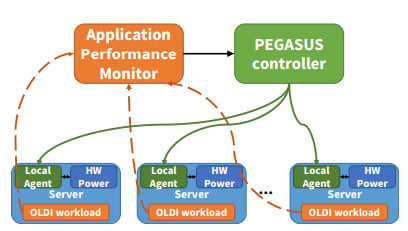

PEGASUS does this by dialing up and down the power consumption of the processors within Google's servers according to the desired request-latency requirements – dubbed iso-latency – of any given workload. (For silicon-heads among our readers, the power management tech PEGASUS uses is Running Average Power Limit, or RAPL, which lets you tweak CPU power consumption in increments of 0.125W. The system "sweeps the RAPL power limit at a given load to find the point of minimum cluster power that satisfies the [service-level objective] target.").

Put another way, PEGASUS makes sure that a processor is working just hard enough to meet the demands of the application running on it, but no harder. "The baseline can be compared to driving a car with sudden stops and starts. iso-latency would then be driving the car at a slower speed to avoid accelerating hard and braking hard," the researchers write. "The second way of operating a car is much more fuel efficient than the first, which is akin to the results we have observed."

Existing power management techniques for large data centers advocate turning off individual servers or even individual cores, but the researchers said this was inefficient. "Even if spare memory storage is available, moving tens of gigabytes of state in and out of servers is expensive and time consuming, making it difficult to react to fast or small changes in load," they explain.

What saving vast amounts of money looks like

Shutting down individual computer cores, meanwhile, doesn't work due to the specific needs of Google's search tech. "A single user query to the front-end will lead to forwarding of the query to all leaf nodes. As a result, even a small request rate can create a non-trivial amount of work on each server. For instance, consider a cluster sized to handle a peak load of 10,000 queries per second (QPS) using 1000 servers," they explain. "Even at 10 per cent load, each of the 1000 nodes are seeing on average one query per millisecond. There is simply not enough idleness to invoke some of the more effective low power modes."

So, PEGASUS, which stands for Power and Energy Gains Automatically Saved from Underutilized Systems, has been created. The tech "is a dynamic, feedback-based controller that enforces the iso-latency policy." It tweaks the power to the chip according to the task its running, making sure to not violate any service-level agreements on latency.

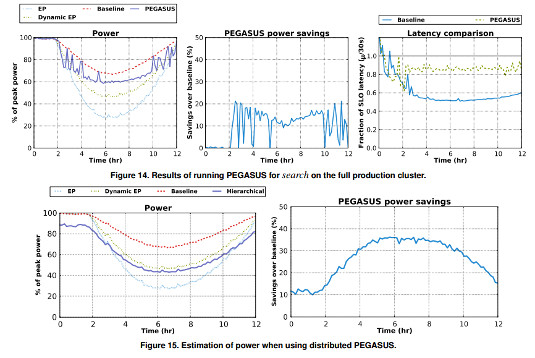

During tests on production Google workloads, the researchers found that PEGASUS saved as much as 30 per cent of power compared to a non-PEGASUS system during times of low demand, and 11 per cent total energy savings over a 24-hour period. The team also evaluated it on a "full scale, production cluster for search at Google", aka, the company's crown jewel workload. Here, PEGASUS did marginally less well by saving between 10 per cent and 20 per cent during low utilization periods. This is due to the way it applied policy across the thousands of servers without taking into account variations between chips.

A potential solution to this is to distribute the PEGASUS controller so that it lives on each node and applies latency policy from there.

"The solution to the hot leaf problem is fairly straightforward: implement a distributed controller on each server that keeps the leaf latency at a certain latency goal," the researchers write.

In the real world, as any grizzled veteran of distributed systems can tell you, implementing any kind of distributed controller scheme is inviting a world of confusion and pain into your data center – but Google isn't the real world, it's a gold-plated organization that can fund the necessary engineers to keep a distributed scheme like this working.

If PEGASUS were to be implemented in a distributed way, the researchers reckon it could save up to 35 per cent of power over the baseline – a huge savings for Google.

As is typical with Google, the paper gives no details of whether PEGASUS has been deployed across Google's infrastructure in production, but given these power savings and the substantial amount of work Google has invested in the scheme, we reckon it's likely. Google did not respond to questions.

"Overall, iso-latency provides a significant step forward towards the goal of energy proportionality for one of the challenging classes of large-scale, low-latency workloads," the researchers write.

The deployment of complex systems like PEGASUS alongside other advanced Google technologies such as OMEGA (cluster management), SPANNER (distributed DBMS), or CPI2 (thread-level performance monitoring) enables Google to make its data centers dramatically more efficient than those operated by smaller, less sophisticated companies. These technologies will, over time, help Google compete in public cloud with rivals such as Amazon and Microsoft, while serving more ads at a lower cost than before.

Ride on, PEGASUS, ride on. ®