This article is more than 1 year old

AMD details aggressive power-efficiency goal: 25X boost by 2020

10X lift in past six years, 25X in next six thanks to real-time tuning, sleepy video

Deep Tech AMD has announced an ambitious 2020 goal of a 25-times improvement of the power efficiency of its APUs, the company's term for accelerated processing units – on-die mashups of CPU, GPU, video-accelerator, or any other types of cores.

Although AMD has committed itself to that goal, it won't be an easy one to achieve. Efficiency gains are no longer as automatic as they were in the past, when merely shrinking the chip-baking process resulted in steady improvements.

"The efficiency trend has started to fall off, and it just boils down to the physics," AMD Corporate Fellow Sam Naffziger told The Reg on Friday. "We've scaled the dimensions in the transistors as far as they can go in some respects, and the voltage has stalled out at about one volt."

Robert Dennard's process-scaling guidelines, as outlined in his landmark 1974 paper, have hit a wall, Naffziger said. "The ideal Dennard scaling, where you scale the device in three dimensions and get a factor of four energy improvement, yes, that's at an end. We still get an energy improvement – maybe 30, 50 per cent per generation, which is nice – but it's not what it used to be."

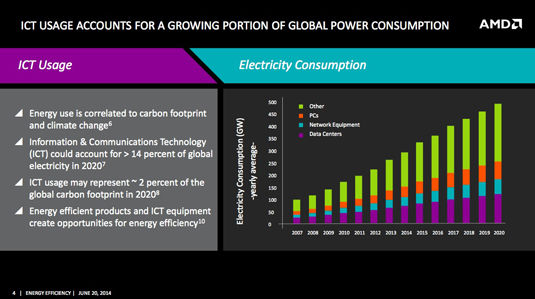

In other words, when it comes to power-efficiency improvements, merely shrinking the chip-baking process isn't going to provide the improvements that are needed to support a world in which the hunger for power by the information and communications technology (ICT) industry is increasing.

And that hunger is voracious. According to work done by Stanford University's Jonathan Koomey, the amount of power needed by ICT is growing fast. The explosion of connected devices, the surging number of internet users, the vast amount of digital content consumption, the need for data-center expansion to handle it – all combine to lead to ICT accounting for over 14 per cent of the world's electricity in 2020, a figure that could account for around 2 per cent of the global carbon footprint that year.

"Our intention," Naffziger said, "is to overcome the slowdown in energy-efficiency gains through intelligent power management and through architectural and software optimizations that squeeze a lot more out of the silicon than we have in the past."

When Naffziger talks about energy efficiency, by the way, he's referring to a metric set by the US Environmental Protection Agency's voluntary Energy Star program called "typical use" efficiency. "There's a definition in the Energy Star specification – a weighted sum of power states that has been shown to be representative of what the power consumption is for typical uses," he said. "What we're doing is optimizing for that typical-use scenario."

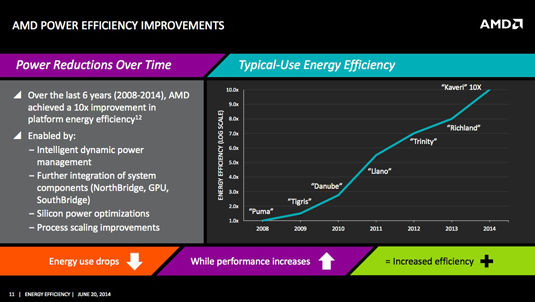

According the Naffziger, AMD has improved typical-use power efficiency by about 10 times over the past six years – from 2008's "Puma" to 2014's "Kaveri", both parts being aimed at laptops.

AMD has done a lot in the past six years, and now plans to more than double its pace (click to enlarge)

That's good, but not great, Naffziger admitted. Had silicon-scaling's efficiency improvements continued at the same pace they enjoyed during the heyday of Dennard scaling, he said, that increase should have been 14-fold.

"We need to do better, going forward," he said, noting that AMD has a roadmap of how it plans to boost that power-efficiency curve to achieve a 25-times improvement by the end of this decade – an effort the company is calling "25X20".

Not that there hasn't been a lot of good work done in this area by AMD and others. Power management has been made smarter and more fine-grained in recent years, and the trend towards putting more cores and other components onto the same die has reduced power wasted by moving bits back and forth among disparate dies, just to name a pair.

And then there's AMD's embrace of heterogeneous system architecture (HSA) across its product line. Through heterogeneous Uniform Memory Access (hUMA) and heterogeneous Queing (hQ), CPU and GPU cores share the same system memory, with the added efficiency of the CPU not needing to feed the GPU with data – the GPU is smart enough to do so itself, thus increasing efficiency. In addition, using the GPU as a compute core for appropriate tasks allows the APU to save power by offloading those tasks from the less-efficient CPU.