This article is more than 1 year old

What makes a tier-1 enterprise flash array a superior beast?

Storage monoliths enter the fray

Enterprise tier-1 storage arrays are a breed apart, focusing on providing fast access to vast amounts of data without either losing data or limiting access to it.

This is in contrast to the broad mass of storage arrays, which have dual controllers and thus limit the amount of data they can store and the number of concurrent accesses they allow.

There are four main tier-1 arrays on the market:

- EMC's VMAX

- IBM's DS8000

- Hitachi Data Systems'(HDS) VSP

- HP’s XP, an OEM version of the Hitachi VSP

Typically such arrays have more than two controllers and an internal backplane or fabric to link the controllers with the storage shelves and ensure there is enough internal bandwidth to service the high number of I/O requests coming in.

The VMAX 40K, for example, had a 4PB capacity with 2,000 2TB disk drives. There is a Virtual Matrix interconnecting the controllers, aka storage engines, and there can be up to eight of them.

These have high availability, with data access continuing if a controller fails and no loss of data if a disk drive fails. Updates to the systems software and firmware should be accomplished without hindering access to data.

This class of array also has a rich set of data-management features, such as replication to another array, either local or remote, and snapshots.

Suppliers assert that the array operating system needs two or more years of development and real-world testing to be truly reliable. Overall these arrays are highly available and highly reliable.

The key components are:

- More than two controllers

- Internal interconnect fabric

- Scale-up rather than scale-out design

- High performance for large number of concurrent accesses

The scale-up nature of their design, adding controllers and disk shelves as a customer's needs grow, is why some call these arrays monolithic. They are very large single systems, in contrast to dual-controller arrays which can scale out to add capacity and performance by linking separate boxes, using clustering for example.

The internal fabric is vital to support performance, although other things being equal if that is missing but the other tier-1 enterprise array features are present then the array’s status is not compromised.

Fine performance

On that basis the main features of a tier-1 enterprise class array are performance, scaling up past two controllers and reliability.

It is generally agreed that scale-out arrays do not have the claimed bullet-proof reliability of tier-1 enterprise-class arrays.

Suppose an all-flash array from a startup can equal a tier-1 array’s performance – does that make it a tier-1 enterprise class array?

We have to understand how performance is measured. It is not enough simply to say the system can run a certain number of random read or write IOPS, or that its bandwidth when writing or reading is a certain number of GBps.

We need to understand the mix of IOs in the real world, the percentage of reads and writes, the size of the data blocks and their distribution.

Unless a benchmark is run on your own workloads, any performance rating is going to be artificial, but you can at least get an industry-standard rating by looking at the Storage Performance Council (SPC) benchmarks.

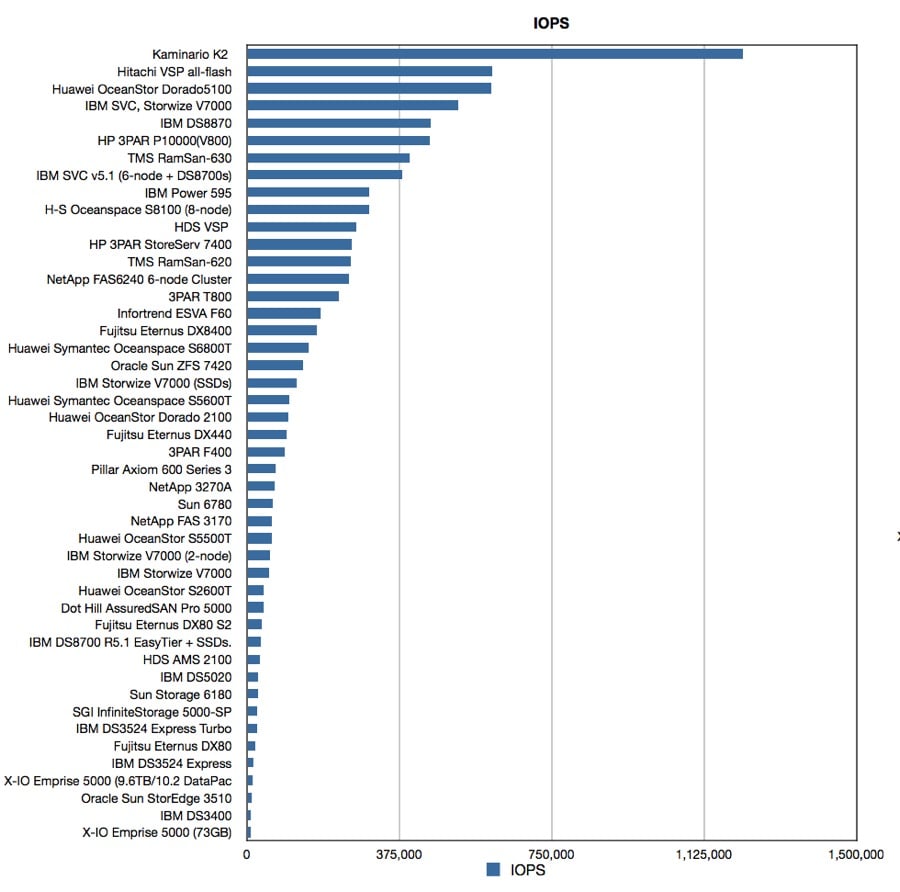

The SPC-1 benchmark provides a rating of how well a storage array does at random IO, with SPC-2 doing the same for sequential IO. A check of results for various arrays is quite informative:

This chart shows SPC-1 rankings for the Hitachi VSP array in disk (269,506.69) and all-flash (602,019.47) versions and IBM’s DS8870 (451,082.27 IOPS)

The top-scoring system is a Kaminario K2 all-flash array with 1,239,898 IOPS. Does that make this a tier-1 enterprise-class array?

Test of endurance

We would say not because despite out-performing mainstream vendors’ tier-1 arrays it lacks the enterprise-class array features mentioned above.

There are no other all-flash array or hybrid array startup systems in the SPC-1 rankings. That means we cannot compare Pure Storage or Nimble Storage, for example, with other systems in the SPC-1 rankings.

Also there is no independent and objective measure of their performance based on an industry-standard workload. Customers have to run their own internal tests if they wish to evaluate such an array.

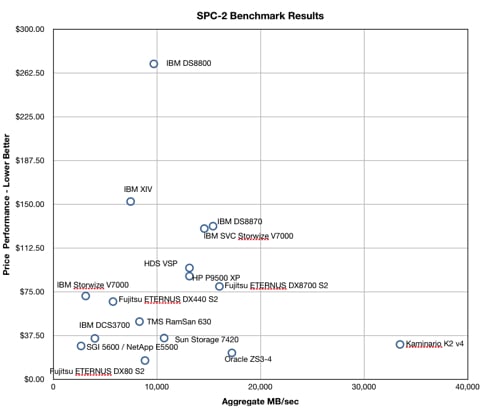

How about the SPC-2 benchmark, the sequential IO one? Here is a chart of some SPC-2 results:

This chart is a combination of Mbps throughput and price/performance. That is based on list price, so it is less relevant in the real world where discounts may apply, but we still get a worthwhile comparison.

The chart shows IBM's DS8870, HDS’s VSP and HP’s XP systems, which are soundly beaten in sequential throughput terms by the Kaminario array again. But the same reasoning applies: the K2 is not a tier-1 enterprise class array because it lacks the features we have already mentioned.

In general then, it seems there is no startup offering a scale-up all-flash array that scales past two controllers and has the reliability features needed.

The logic of this pretty much dictates that the only straightforward way to build a tier-1 all-flash enterprise array is to put the flash inside an existing one, like the HDS VSP.

You can also buy all-flash versions of IBM's DS8000 and EMC's VMAX, and we would assume that they are better performers than their disk-based versions, although we have no SPC lens, as it were, through which to verify this.

However, suppliers such as HP with its all-flash 3PAR system and NetApp with its all-flash FAS series, particularly the top-end 8080 EX, would say that not only do they have the performance of a VMAX, VSP or DS8000 monolithic array, they also have scale-up attributes as they can use more than two controllers through, for example, clustering of nodes.

The 8080 EX can scale up past 4.5PB of flash and HP's 7450 can have 460TB of raw flash and 1.3PB of effective capacity after deduplicating redundant data. The capacity scale obstacle goes away.

Risky disks

These vendors would assert that their array operating systems have many years of development behind them and are battle hardened. They also have features such as replication and snapshots to provide the same rich data-management services as VMAX and VSP-class systems.

The vendors would also say that the most unreliable element of a disk-drive array is the disk drive. When an array has a thousand or more drives then disk failure will be a regular occurrence. Using SSDs instead of disk drives removes that mechanical risk from the equation.

As flash storage responds to requests so much faster than disk you don't need so many storage controllers to handle the same number of access requests from users.

All in all, an enterprise array previously rated as a high-end mid-tier array, such as FAS and 3PAR arrays, can with flash storage inside satisfy tier-1 enterprise-class array requirements. Acquisition, power, cooling and operating costs are also lower than for a disk-drive legacy array.

Flash-based arrays take up far less data-centre space than disk-drive arrays of equivalent capacity and performance, need less power to operate and generate much less heat.

If nothing else, workloads could be selectively moved from a legacy enterprise array onto newer all-flash arrays. We might envisage workloads with low latency requirements or a high proportion of random IO requests being candidates for this.

Customers for these high-end arrays are famously conservative and require a lot of convincing that a new candidate array is as good as their existing ones.

It begins to look, though, as if high-end flash arrays from HP and NetApp can now compete at this level and provide the incumbents with a run for their money. ®