This article is more than 1 year old

HDS embiggens its object array by feeding it more spinning rust

Gets erasure coding as well

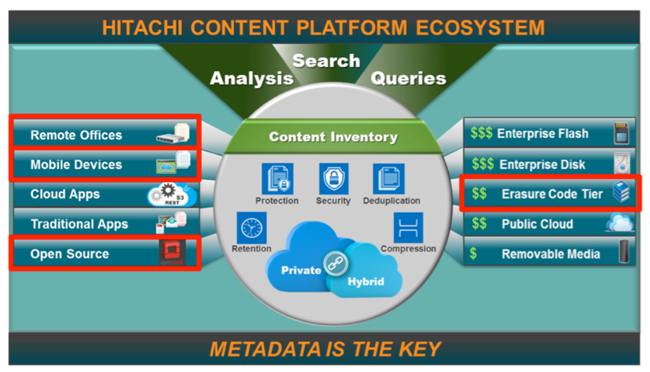

HDS has bulked up its HCP object storage offering with an erasure coding capacity tier, remote office and mobile access to objects, filer data stores and OpenStack support. Why? Preparations for the coming Internet of Things, we think.

Hitachi's Content Platform (HCP) is the central object storage facility in a 3-part offering. It's flanked by HDI, the Hitachi Data Ingestor which sucks in data, and HCP Anywhere, the access provider and manager facility. All three have been updated.

Back in June last year HDS added a public cloud backend to HCP as a storage tier and more front-end access. We wrote: "HCP Anywhere provides mobile device access to HCP storage and HDI inputs data into HCP, being a kind of cloud data on-ramp. The latest releases of HCP Anywhere and HDI provide always-on, secure access to data from any IP-enabled device, including mobile phones, tablets, and remote company locations."

Public cloud backends include Hitachi's Cloud Service for Content Archiving, S3, Azure, Verizon Cloud and Google Cloud Storage.

Just six months later HDS has added an on-premises capacity tier and more access options. A chart neatly illustrates the territory HCP (Hitachi Content Platform) has moved into:

The erasure coding comes in the shape of a new storage tier using a new box, the HCP S10. Existing tiering software routines move data to it from other tiers and off it to the other tiers as needed.

This has 60 x 3.5-inch disk drives in a 4U enclosure driven by a pair of 6-core CPUs. Each S10 is a node and 80 nodes can be connected to the HCP controller for a total of 18PB. The nodes connect to the HCP using an S3 interface across Ethernet. They implement erasure coding for data protection and faster-than-RAID rebuilds.

The 80-node/18PB maximum capacity implies 225TB/node. That would, in turn imply 3.75TB drives. HDS is presenting usable capacity, after erasure coding overhead, and confirms it’s using 4TB drives. HDS says the capacity range starts at 112TB, which implies a half-populated enclosure.

Why has HDS added an on-premises capacity tier? It's not as inexpensive to use as public cloud storage but it provides faster, local access. Our best guess is that HDS is looking forward to data flooding in from the coming Internet of Things (IoT), being able to store it cost effectively, protect it better than RAID with erasure coding, and then feed it fast to on-premises analytics routines.

The data provider, HDI, has become an access gateway, a file-serving gateway for remote and branch offices, as well as being a data on-ramp to the cloud. It can be used to manage quotas at remote site and for cloud storage from a single interface. HDS says that HCP can be used instead of traditional file servers.

HCP Anywhere has added mobile users access to files in existing NAS systems as well as providing file sync ’n share capability. Users can choose from multiple languages for their client systems. Also HDI can be provisioned and managed remotely through HCP Anywhere.

HCP can be used instead of SWIFT in OpenStack projects as it supports the SWIFT APIU, the Keystone API for authentication plus Horizon management interfaces and Glance for VM images.

To find out more, read two HDS blogs by HDS' HU Yoshida –this one and this one. ®