This article is more than 1 year old

Internet Doomsday scenario: How the web could suddenly fall apart

Small tweak needed to global network's foundation

Analysis Engineers have recommended a small but important change to the internet's underlying structure in order to avoid a possible doomsday scenario.

A report [PDF] from the Root Server System Advisory Committee (RSSAC) argues that a key parameter in the internet's address books – namely, how long the information should be stored – needs to be increased in order to avoid a possible shutdown of parts of the global network.

The internet's underlying infrastructure – root servers and top-level domains (TLDs) – is expanding, and is physically spreading across the planet. While this adds greater stability overall, it also means an increase in the likelihood of key parts of the network going offline.

A natural disaster such as an earthquake, or an accident such as a ship cutting a submarine cable, could take root server nodes or name servers offline for several days or longer.

While the rest of the internet would continue as normal, the loss of key components of the network could cause outdated information to be stored and broadcast by some parts of the network, that would then conflict with more up-to-date information in other parts of the network. Result: conflict, confusion, and possible chaos as people lose a reliable internet.

The report notes that the change is not "urgent" and the problem has yet to occur, but the group did manage to create the problem on a test bed and wants the issue fixed before it happens in the real world.

Why has this popped up now?

This doomsday scenario has only appeared recently with the adoption of the new DNSSEC security protocol. DNSSEC adds digital signatures to the information sent between the internet's foundational building blocks to make it harder for the system to be hacked.

Due to that additional security, however, it is possible that if one of the many root servers goes offline for four days or more, it could have a dangerous knock-on effect on millions of servers across the globe, which could then provide conflicting information for up to six days.

That conflicting information would likely cause servers to refuse to send or receive information from large parts of the internet and as a result, millions of internet users would not be able to access websites or email. In other words, a breakdown of the system.

Although theoretical at this stage, the continued expansion of the internet through the rollout of hundreds of new TLDs, an increase in root server mirrors, and greater adoption of DNSSEC makes it increasingly likely.

The doomsday scenario

So what are we talking about in technical terms?

All information sent across the internet's infrastructure has a key component – time to live, or TTL.

TTL tells whatever machine receives it how long that information should be deemed valid. After the TTL expires – which can be anywhere from seconds to days – the machine will discard it and look for updated information. This is a critical function for a global network that is being constantly updated.

In deciding a particular TTL, engineers need to balance a number of things. If the data is updated frequently, say, every minute, then it makes sense to have a short TTL so that you stay up-to-date. If updated once a day, then a longer TTL makes sense.

Tied in with this is the actual system. It not only doesn't make sense to have a system look for updated information every second if the information only changes every hour, but it would also cause unnecessary network congestion.

If you consider the millions of servers/devices online, then you are looking at billions of pointless queries. It's poor network management. Additionally, if you set an unnecessarily short TTL, you are making the system less stable, since the information would be stored for less time than is necessary.

Changes to the internet's root zone and individual top-level-domain zones are much, much slower than the rest of the internet. Typically they are made once a day (the report found that 60 per cent of TLDs have a TTL of 86,400 seconds, or one day). The root zone itself is updated twice a day.

Related to that, the root servers – which act as the authoritative servers for copies of the main root zone – have a "start of authority," or SOA, of 10 days. That means that the data can be stored and used for up to 10 days before being discarded (although it should have been updated multiple times before that).

The big change, however, has come with the introduction of DNSSEC and its two signing keys – the main ZSK (zone signing key) and the related KSK (key signing key).

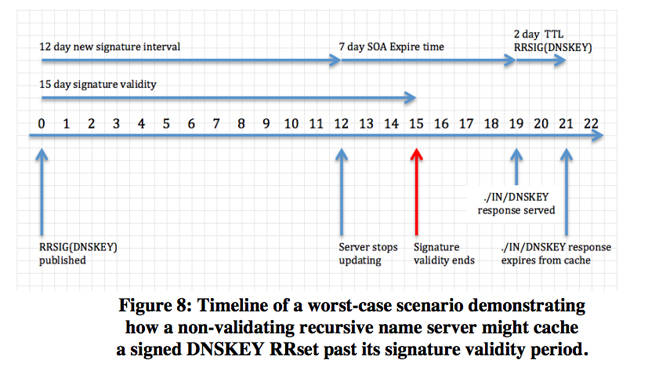

These keys are used to validate that the information coming from root servers and TLDs is coming from them and has not been interfered with. Currently the ZSK has a "validity period" of 10 days and the KSK, 15 days.

It is the combination of the root zone files' TTL and the DNSSEC keys' validity period that causes the potential problem. The paper identifies a worst-case scenario for the KSK:

- A root name server instance stops receiving zone updates for at least four days.

- A non-validating recursive name server queries the out-of-date root server instance for the root zone NS RRset (aka "priming query") and caches the response.

- A validating recursive name server forwards its queries to the non-validating recursive server described above.

In this case, a "non-validating recursive name server" is simply a server that grabs the root zone information (as millions of servers across the internet do) and stores it for end users.

Whereas a "validating recursive name server" does the same job, but also checks whether the DNSSEC keys are correct. Again, there are millions of servers and devices that do this every day, even just web browsers with validating plug-ins. The paper identifies a similar worst-case scenario for the ZSK.

In both of these situations, the simple fact of a root server failing to update for a few days could lead to bad and conflicting information being spread through the network for nearly a week.

While it's impossible to know exactly what would happen from the end-user perspective, conflicting information between servers would cause enormous disruption to the underlying architecture, and so almost certainly result in error messages to users.

It's a situation that internet engineers spend their entire lives designing ways to avoid.

OK, so the solution is?

The RSSAC group looked at three main ways to alleviate the problem, two of which would change key root zone parameters (reduce the NS RRset TTL to three days or less; reduce the SOA to four days or less), and one that would change the DNSSEC parameters.

Ultimately it decided that the best option was to keep the root zone system the same as it has been since the early 90s, and instead tweak the DNSSEC parameters. It proposes increasing the ZSK validity period from 10 days to 13 days (or more) and increasing the KSK validity period from 15 days to 21 days (or more).

While this makes the digital signing process slightly less secure, it avoids messing about with the underlying root zone system.

There was already a change to the ZSK validity period earlier this year over a similar concern. In that case, the ZSK's validity period was increased from seven to 10 days and there have been no noticeable negative impacts.

What it may also mean is that the grand key signing ceremony that takes place every three months will only need to create seven digital keys, rather than nine. That won't, however, reduce the amount of time that internet engineers will have to sit in a secure box creating them.

The report [PDF] represents the first time that the RSSAC has recommended changes be made to the internet's underlying architecture. ®