This article is more than 1 year old

Forget anonymity, we can remember you wholesale with machine intel, hackers warned

Resistance coders, malware writers, and copyright infringers take note

32c3 Anonymous programmers, from malware writers to copyright infringers and those baiting governments with censorship-foiling software, may all be unveiled using stylistic programming traits which survive into the compiled binaries – regardless of common obfuscation methods.

The work, titled De-anonymizing Programmers: Large Scale Authorship Attribution from Executable Binaries of Compiled Code and Source Code, was presented by Aylin Caliskan-Islam to the 32nd annual Chaos Communications Congress on Tuesday.

It was accompanied by the publication of an arxiv [PDF] titled When Coding Style Survives Compilation: De-anonymizing Programmers from Executable Binaries, written by researchers based at Princeton University in the US, one of whom is notably part of the Army Research Laboratory.

The researchers began trying to identifying malicious programmers, noting that there is "no technical difference" between security-enhancing use-cases for mapping the style of posts, and privacy-infringing use cases. In other words, writing style betrays the writer.

Many of the distinguishing features (such as variable names) in the C/C++ source code compiled and analysed by the researchers are removed when that code is compiled, and compiler optimisation procedures may further alter the structural qualities of programs, obfuscating authorship even further.

However, in examining the authorship of executable binaries "from the standpoint of machine learning, using a novel set of features that includes ones obtained by decompiling the executable binary to source code," the researchers were able to show "that many syntactical features present in source code do in fact survive compilation and can be recovered from [the] decompiled executable binary."

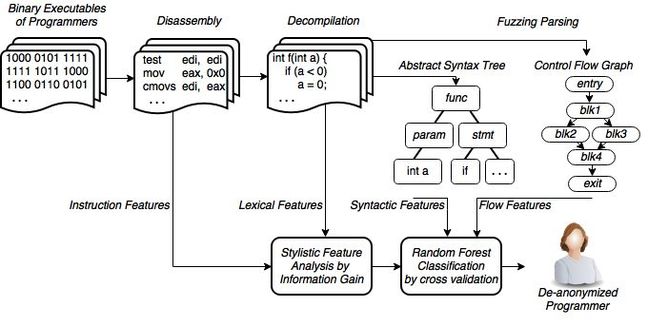

The researchers used "state-of-the-art reverse engineering methods" (as displayed in the above graph) to "extract a large variety of features from each executable binary" that would represent the stylistic quirks of programmers using feature vectors.

Practically, this meant querying the Netwide disassembler and then the Radare2 disassembler, before using both the "state-of-the-art" Hex-Ray decompiler and the open source Snowman decompiler to extract 426 stylometrically significant feature vectors from the binaries for comparison.

A random forest classifier was then trained on eight executable binaries per programmer, to generate accurate author models of coding style. It was thus capable of attributing authorship "to the vectorial representations of previously unseen executable binaries."

The researchers noted that: "While we can de-anonymize 100 programmers from unoptimized executable binaries with 78 per cent accuracy, we can de-anonymize them from optimized executable binaries with 64 per cent accuracy."

"We also show that stripping and removing symbol information from the executable binaries reduces the accuracy to 66 per cent, which is a surprisingly small drop. This suggests that coding style survives complicated transformations."

In their future work, the researchers plan to investigate whether stylistic properties may be "completely stripped from binaries to render them anonymous" and also to look at real-world authorship attribution cases, "such as identifying authors of malware, which go through a mixture of sophisticated obfuscation methods by combining polymorphism and encryption." ®