This article is more than 1 year old

Flash and trash? You could begin with cache and trash

Putting a cache in front of object storage as a solution

Comment Last year I wrote many times about object storage, flash memory, caching, and various other technologies in the storage industry. And I also coined the term “Flash and Trash” (see video here) to describe a trend of two-tier storage built on latency-sensitive, flash-based arrays on one side and capacity-driven, scale-out systems on the other.

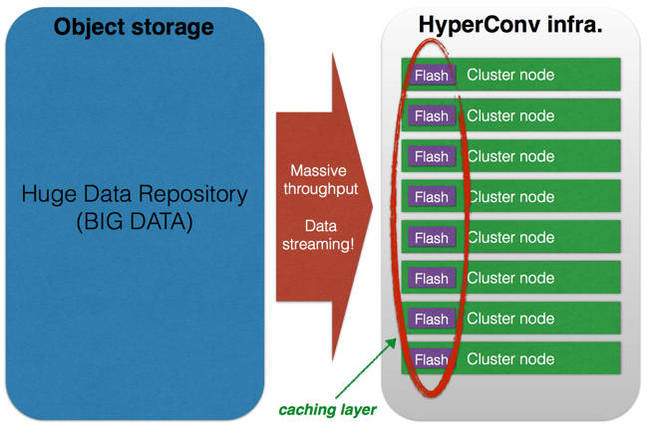

At times, I used a slide you can see below (where the flash tier is collapsed into the compute layer) talking about possible scenarios with a huge distributed cache at the compute layer and object storage at the backend.

At the SC15 conference I got further confirmation that some vendors are looking into this kind of architecture. But, this will take time. Meanwhile, I think there are some other vendors that, coupled together, could help to implement this model very easily.

Here and now, I’m just wondering about possible alternative solutions. I don’t really know if it would work in the real world but, at the same time, I’d be rather curious to see the results of such an experiment.

Why?

In large deployments, file systems are becoming a real pain in the neck for several reasons. And even scale-out NAS systems have their limits. Furthermore, if you add that data is now accessed from everywhere and on any device, NAS is not a technology that can be relied on.

Flash cache and trash idea

At the same time, file systems are just a layer that adds complexity and don’t bring any benefit if your primary goal is to access data as fast as possible.

Putting a cache in front of object storage could solve many problems and give tremendous benefits.

OK, but why in the enterprise? Well, even if enterprises don’t have these kinds of very large infrastructures yet (above I was talking about Big Data and HPC), you can see the first hints of a similar trend. For example, VVOLs are targeting the limits of VMFS while organisations of any size are experiencing an exponential data growth which is hard to solve with traditional solutions.

An example

I want to mention just one example (there are more, but this is the first that comes to mind). I’m talking about Cohesity coupled with a caching software.

If you are a VMware customer this solution could be compelling. On one side you have Cohesity: scale-out storage, data footprint reduction of all sorts, integrated backup functionality, great analytics features and ease of use (you can get an idea of what Cohesity does from SF8 videos if you are interested in knowing more).

I’m not saying the performance is bad as such, but the system could run many different workloads (and internal jobs/apps) at the same time and IOPS and latency could be very far from your expectations. It doesn’t have any QoS functionality either and, again, it could mess up your primary workloads.

If it wasn’t for the fact that Cohesity is an all-but-primary storage outfit, it would have the potential to be the “ultimate storage solution” (I’m exaggerating a bit here!). Well, it works for 80 per cent of storage needs and, anyway, you could fill the gap with a caching solution like PernixData or SanDisk FlashSoft (or Datagres if you are more of a Linux/KVM shop).

Reducing complexity and costs

For a mid-size company this could be a great solution from the simplification perspective. It would provide total separation between latency-sensitive and capacity-driven workloads/applications. The first would be managed by the caching layer while the latter would be “all the rest.”

I’d also like to do a cost comparison (both TCA & TCO) between a Cohesity+Pernix bundle and a storage infrastructure built out of the single components, so here goes.

Other interesting alternatives

If you don’t like having too many components from different vendors you could look at Hedvig as an alternative. It doesn’t have the same integrated backup features as in Cohesity but it is an end-to-end solution from a single vendor.

In fact, if you look at its architecture, the Hedvig Storage Proxy can run on the hypervisor/OS (also enabling a distributed caching mechanism), while the storage layer is managed on standard commodity x86 servers through the Hedvig Storage Service.

This is an interesting solution with great flexibility for both high IOPS and capacity-driven workloads. But, to be honest, I haven’t checked if it has a QoS mechanism to manage them at the same time. Still, I’m sure it is worth a look.

And, of course, any object store with a decent NFS interface could be on the list of possible solutions, as well as other caching solutions.

Closing the circle

As said previously ... this is just an idea. But I’d like to see someone testing it for real. Coupling modern secondary storage prices (and features) with incredible performance of server-based flash memory, could be a very interesting exercise.

In same cases, like for Cohesity for example, it could also help to collapse many other parts of the infrastructure into fewer components, aiming towards a more simplified overall structure. ®

If you want to know more about this topic, I’ll be presenting at the next TECHunplugged conference in Austin on 2/2/16. A one-day event focused on cloud computing and IT infrastructure. Join us!