This article is more than 1 year old

Mangstor busts out smash chart for flash pocket rocket

RoCE road to banishing storage array network access latency

Comment Mangstor and its NVMe fabric-accessed array has been mentioned a couple of times in The Register, with claims that is pretty much the fastest external array available in terms of data access speed.

NVMe is the standardised PCIe flash drive block access protocol over a PCIe connection inside a server. The NVMe over fabric (NVMeF) concept logically externalises the PCIe bus and provides RDMA (Remote Direct Memory Access) by using, for example, RoCE (RDMA over Converged Ethernet). The effect is to remove network access latency from the external array access equation and vastly, literally vastly, increase data access speed in terms of IO operations per second.

At a December IT Press Tour in Silicon Valley, Mangstor marketing VP Paul Prince talked about this and showed a chart depicting this level of performance.

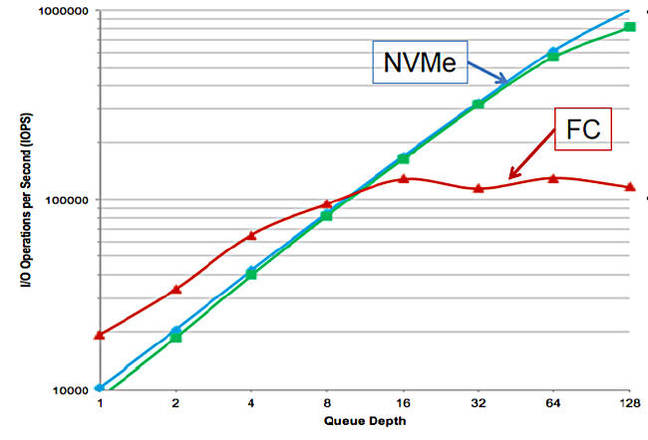

This chart measures the IO performance of a Mangstor NX Series flash array fitted with NVMe flash media across either Fibre Channel or an NVMe fabric.

It shows IOPS using 4K reads with increasing queue depth, and the number of IOPS is on a log scale. The higher the IOPS number, the higher the performance.

4K random read IOPS performance with Fibre Channel and NVME fabric ports compared

The blue line charts Mellanox single port reads with the green line being Chelsio single port reads. The red line is the system using a 16Gbit/s QLogic SANBlaze Fibre Channel adapter with on-board DRAM, which was tested to this on-card memory and so is, Mangstor says, the best case and not a real world result.

The Fibre Channel line rises as the queue depth increases from 1 to 8, then starts flattening out as levels at a 16-deep queue and stays flattish after that up to a 128-deep queue.

The two NVMe fabric lines are pretty much the same, tracking slightly below the FC line until the queue depth passes 8, and then carries on rising linearly as the FC line flattens. The Mellanox (InfiniBand-based NVMeF) starts to flatten slightly but reaches 1 million IOPS with a 128-deep queue, almost 10 times better than 16Gbit/s FC. The Chelsio system is not far behind.

By using NVMe over a fabric you need fewer switch ports.

Prince said a 60TB flash array was replaced by a 16TB Mangstor array in one trial for VDI. He also told us a MySQL database benchmark showed Mangstor has vastly better throughout than a competing flash array. He mentioned a 5X advantage with a 24 hour job taking 4-5 hours in a Storage Review test.

Developments

Prince told us Mangstor’s array has 16TB capacity with 4 PCIe flash cards (gen 3 with 8 lanes). It’s developing a 32TB version of this, populating the cards with more advanced Toshiba flash chips.

A 1U version of the array could arrive in the first 2016 quarter with even higher capacity, and possibly be the highest-capacity 1U flash array available. It would feature more serviceability than the current array and a proof-of-concept (POC) bundle could be available before July.

It’s also engaged in development work, with Mellanox looking at 100 and 150Gbit/s InfiniBand.

He said Mangstor was planning parallel NFS (pNFS) and object support in the first half of 2016. In that instance, an accessing server does not use NVMeF and so the NX Series array is an external flash-based filer or object store. This is a different value proposition than the NVMeF-based tier 0 data access.

Prince said the NS array could provide a block client for pNFS and still get NVMeF advantage. It could, for example, be used as a metadata server for pNFS.

Mangstor has a partnership with object storage software supplier Caringo, and some Caringo customers “want super-fast storage.” He said Mangstor “will support a native object key:value interface in storage SW running on our box.”

There are no data services available on the Mangstor array, not even encryption, the proposition being all about data access speed at present.

We were told the NX array can support two tiers to aggregate across multiple arrays so as to create one large volume presented to accessing servers. That volume has a UUID.

Prince said Mangstor was approaching 20 customers. It is an authorised Mellanox reseller and has its own set of VARs.

With EMC’s high-end, rack-scale DSSD flash array (which uses NVMeF) expected before the end of the quarter, storage buyers will be able to evaluate two super-fast alternatives to iSCSI and Fibre Channel for in-data centre external array access. We might also think that NVMeF is the inevitable and logical iSCSI/FC replacement needed for all-flash arrays.

Flash media banishes disk access latency and NVMe replaces the disk-based SATA and SAS drive protocols.

NVMe over fabrics banishes, pretty much, external array network access latency and is the logical replacement for the, arguably, disk-based iSCSI and FC array access protocols.

Check it out if your servers are connecting over choked iSCSI and FC pipes to your drive arrays. ®