This article is more than 1 year old

After all the sound and fury, when will VVOL start to rock?

The truth is...it's complicated

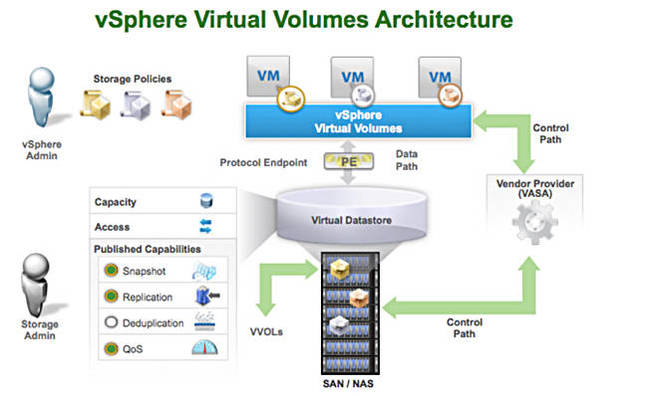

Comment VMware virtual machines need storage and VVOLs (Virtual Volumes) are a way of automating this process, avoiding delay as VMware admins talk to storage admins to get storage provisioned. Virtually all storage array vendors support VVOLs yet the facility has not been taken up by users’ data centres in any large scale. This poses the obvious question; why not?

Perhaps the VVOLs scheme is too complex? Possibly it is too limited in its focus.

To recap, a virtual machine (VM) can be thought of as VMDK files stored in a VMFS datastore which is a logical unit (LUN) in a storage array. With NFS-based arrays the datastore uses the array’s file system. With a SAN the VFMS is overlaid on top of the block store by the array software.

In a SAN the LUN, used by a VM’s VMDK files, has to be allocated to the VM. With the VVOL scheme a VMDK is stored in a VVOL which itself is a LUN.

This is all part of VMWare- software-defined storage (SDS) strategy, and an over-arching software-defined data centre vision. SDS provides the ability to abstract and virtualise the storage plane of a (VMware-using) data centre, and align its consumption to the requirements defined by policies in a control plane.

VMware states that the Virtual Volumes datastore defines capacity boundaries, access logic, and exposes a set of data services accessible to the VMs provisioned in the pool. Virtual Volumes datastores are purely logical constructs that can be configured on the fly, when needed, without disruption and without being formatted with a new file system.

The virtual disk (VVOL datastore) becomes the primary unit of data management at the array level. This turns the Virtual Volumes datastore into a VM-centric pool of capacity, enabling execution of storage operations with VM granularity and provisioning of native array-based data services to individual VMs. Admins can now ensure the right storage service levels are provided to each individual VM.

In order for this to happen the VMware system has to know which storage arrays in a data centre can respond to VVOL provisioning instructions and which can not. This is where things get complicated.

VVOL Architecture

This is done through a VMware API for Storage Awareness (VASA) scheme. Storage arrays tell VMware’s ESXi (publish) what services they support using VASA; they are VASA providers. ESXi builds a service availability list. VMware admins select capacity and various classes of data services from this list when creating a VM policy. Policies can be tiered in a gold, silver, bronze kind of way reflecting that some VMs are mission-critical, others not so important, and others having low-level priority.

So a gold policy could specify fully mirrored data that isn’t deduplicated and is stored on flash devices.

A VM or set of VMs can be created against a policy and the provisioning of storage is then automated. This all comes under a Storage Policy-Based Management (SPBM) heading.

VVOLs have metadata describing what they can do, such as capacity available, RAID level, snapshot and replication status, availability, caching, security (encryption), deduplication, tiering, protocol access route, thick/thin provisioning, etc.

An array has protocol end-points which define the access protocol used, such as Fibre Channel, NFS or iSCSI. These end-points are implemented as LUNs on block-based arrays and are also called IO de-multiplexers as multiple VM-level VVOL requests from multiple hosts low through an end-point to be split up and dealt with individually by the array software.

The array then receives IO requests through these end-points, which come from different VMs and apply to an individual VM’s VVOLs. The array controller software then has to translate these requests into its own internal abstractions such as RAID groups and LUNS, before actioning and routing the request to the individual SSDs or disk drives it controls.

A VM addresses a single VM component, not a whole VM. The number of VVOLs for an individual VM could be 16 or more, made up from a basic VM config, swap, C: and d: disk files, plus a cloned set, and two snapshots for example. We can say an array needs to support enough volumes to cover:

- Twice the number of virtual disks per VM

- Multiplied by the total number of VMs

- Plus by the number of clone backups - (number of virtual machines x number of virtual disks ) x clone backup

- Plus the number of differential backups - (number of virtual machines x (number of virtual disks + 1)) x ( number of snapshot generations to save + 1)

- Volumes for snapshot - ( 1 + number of virtual disks) x number of snapshots

- Volumes for suspension - number of suspension

- Volumes for system configuration - number of volumes for flexible tier function + number of RAID groups + number of optionally created volumes + number of VVOL datastores + 1

Arrays will have limits on the total number of volumes they can support, with a Fujitsu ETERNUS DX200F supporting up to 1,536 and a DX200 S3 supporting up to 4,096.