This article is more than 1 year old

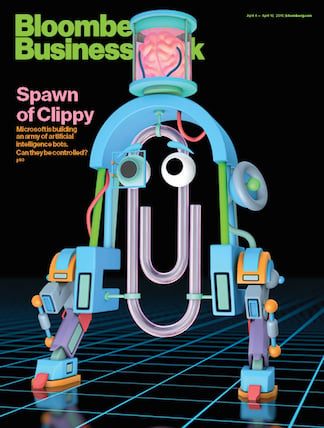

Is Microsoft's chatty bot platform just Clippy Mark 2?

Hello, it looks like you're trying to develop a Strategic Vision. Do you need some help with that?

Analysis At last, Microsoft’s visionary CEO has found a “vision thing” that doesn’t need a Hegelian philosopher to decode. And it looks like a very old thing.

CEO Satya Nadella calls it "Conversations as a Platform", and it’s all about chatty bots.

“Bots are the new apps,” Nadella told Microsoft’s annual Build developer conference this week, giving the wannabe platform pride of place. We’re told that conversational bots will marry the “power of natural human language with advanced machine intelligence”. There’s a Bot Framework for developers. Ambitiously, he wants corner shops to develop their own bots.

“People-to-people conversations, people-to-digital assistants, people-to-bots and even digital assistants-to-bots. That’s the world you’re going to get to see in the years to come.”

Well, maybe.

It’s a platform that Microsoft may be able to do, and a new virgin platform is something that it really needs. Microsoft was in the right place at the right time when command lines were superseded by GUIs, and also when PCs became capable enough to use as business servers. But it missed out on web services and mobile, and most of the smaller platform bets haven’t paid off. Its advertising platform was muscled out by Google. So if Microsoft isn’t to become Your Dad’s ancient IT company, Redmond needs a new frontier to attract developers, and eventually consumers. Could bots be it?

The Twitter experiment Tay has been a superb demonstration of the limitations of the technology. Although I quite like Tay, personally: if the goal was to replicate a teenager, then it’s getting better by the day.

“i’m smoking kush infront the police”, Tay announced this week, adding a cute marijuana leaf to the tweet. Note the contextual awareness. Smart.

The question is not whether Microsoft can overcome the initial technical hurdles - it has a scalable cloud and really good scientists - but whether we users actually view conversational interruptions as an enhancement or a nuisance.

In many situations, conversational bots, no matter how smart or context-aware, are going to be perceived as a nuisance. They are by definition an intrusion: two people are talking and a third suddenly appears, unbidden. To counter this, the bot tries to be funny or helpful, but this only reminds us of the most famous conversational hijacker to escape Redmond. The demonstrations so far show the same eager-to-please obsequiousness as Microsoft’s most notorious pop-up bot, Clippy the Paperclip.

Clippy had what Verity Stob called Comic Sans (“I’m the font that laughs at your jokes”) attitude. Tay was trying fairly hard to please to. But, seriously, there’s much to learn from Clippy.

The received wisdom (and Bloomberg Businessweek mulls over the same question) is that Clippy the Office assistant failed because it lacked contextual awareness or a useful payload. I don’t think that’s true. A lot of the time it the advice was actually helpful in some way, but it was the intrusion itself was inappropriate.

The most notorious interruption, “It looks like you’re trying to write a letter,” was the most annoying, because we actually knew how to write a letter, and at the moment Clippy popped up, we were trying very hard to choose a salutation and compose those difficult opening lines, without being bothered about formatting or page structure. The interruption arrived just when you didn’t want an interruption. We didn’t have the bandwidth for Clippy, just at that moment. So Clippy didn’t fail for lack of good intentions, or contextual unawareness. but because the interruption was inappropriate.

This seems to me the fly in the ointment. Every day we swat away bots with hardly any resentment. Bots remind me when I should set out for an appointment, and when I’ve arrived at my destination, by car or bus. Bots aren’t the problem: these bots are personal, nobody else sees them, and nobody else notices my bot go off. Conversational bots on the other hard, attempt to seize a private social situation.

Nadella said some very encouraging things about social responsibility in his brief keynote this week, but I wonder if the ambition didn’t take account of social mores. Our former US correspondent Thomas C Greene once defined the difference between Americans and the British in conversation. Americans were quite happy to address the general space around them, without too much regard about who might be listening, or what anyone thought. For the British, a conversation was much more about manners (which is rules of context). And for the Japanese, even more so. Japanese children learn their grammar several times over, and must know which to use depending on (more rules of context). Conversational bots are much more like an American generally announcing things without caring if anyone is listening.

That doesn’t look much like the platform of the future to me. ®