This article is more than 1 year old

You keep using that word – NVMe. Does it mean what I think it means?

The lowdown on how this lightning fast network connection works

Tech explainer NVMe fabric technology is a form of block-access storage networking that gets rid of network latency delays, magically making external flash arrays as fast as internal, directly-attached, NVMe flash drives. How does it manage this trick?

EMC DSSD VP for software engineering, Mike Shapiro, defines NVMe fabrics as: "the new NVM Express standard for sharing next-generation flash storage in an RDMA-capable fabric."

Starting with this, let's define a couple of acronyms – NVMe and RDMA.

NVMe

NVMe stands for Non-Volatile Memory Express and came into being as the standard way to access flash cards or drives, connected to a server’s fast memory bus by the slower PCIe (Peripheral Component Interconnect Express) bus. Using the PCIe bus is vastly faster than connecting such drives by the SATA or SAS protocols.

This NVMe protocol is a logical device interface built to take advantage of the internal parallelism of NAND storage devices. Consequently NVMe lowers the I/O overhead, and improves data access performance through multiple and long command queues and reduced latency. Before NVMe, every operating system had to have a unique driver for every PCIe card it used. After NVMe they all used the single NVMe driver.

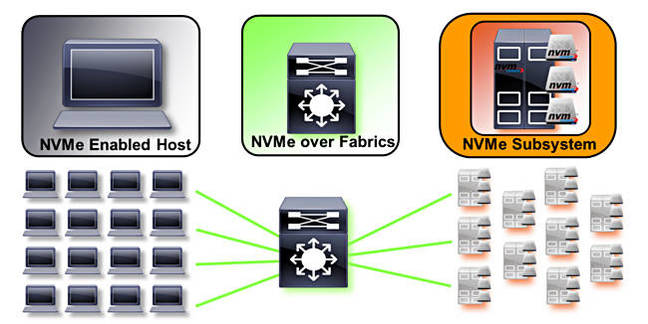

Basic NVMeF concept (SNIA slide)

This driver provides direct block access to the storage media without needing a network access protocol, such as iSCSI or Fibre Channel, or a disk-based protocol stack such as SAS or SATA. All the levels of code used in such protocol stacks are bypassed by NVMe, consequently making it both a lean protocol and a quick one.

For example, NVMe's latency is more than 200μs lower than a 12Gbps SAS drive's 1,000μs+ latency, depending on the workload.

Storage devices using NVMe are:

- PCIe flash cards, 2.5-inch SSDs with a 4-lane PCIe interface through their U2 connector,

- SATA Express devices (with 2 PCIe gen 2 or 3 lanes),

- M.2 internal-mount expansion cards supporting 4 PCIe gen 3.0 lanes.

A PCIe bus can be extended outside a server, to link servers together for example or attach more peripherals, and so form a fabric. NVMeF (NVME for Fabric) became the scheme a server OS can use to access externally-connected flash devices at PCIe bus speed when the actual cabling used to carry the NVMeF protocol messages is implemented using Ethernet, InfiniBand or some other computer network cabling standard.

This has led to such things as iSER, iWARP and ROCE, which we will come to after taking a diversion into RDMA.

RDMA

RDMA stands for Remote Direct Memory Access and enables one computer to access another’s internal memory directly without involving the destination computer’s operating system. The destination computer’s network adapter moves data directly from the network into an area of application memory without involving the OS involving its own data buffers and network I/O stack. Consequently the transfer us very fast. It has the downside of not having an acknowledgement (ack) sent back to the source computer telling it that the transfer has been successful.

There is no general RDMA standard, meaning that implementations are specific to particular servers and network adapters, operating systems and applications. There are RDMA implementations for Linux and Windows Server 2012, which may use iWARP, RoCE, and InfiniBand as the carrying layer for the transfers.

So now we are at the point where we understand that NVMeF, using iWARP, RoCE, and InfiniBand, can be used to provide RDMA access to/from external storage media, typically flash, at local, direct-attach speeds and in a shared mode, turning a SAN into DAS, as some would say.

You may come across two other acronyms – NoE and NVMeoF. The former is NVMe over Ethernet, and the latter NVMe over Fabrics. We use what we think of as the middle way NVMeF acronym, which may be written as NVMe/F. They all mean the same thing.

iWARP - Internet Wide Area RDMA Protocol

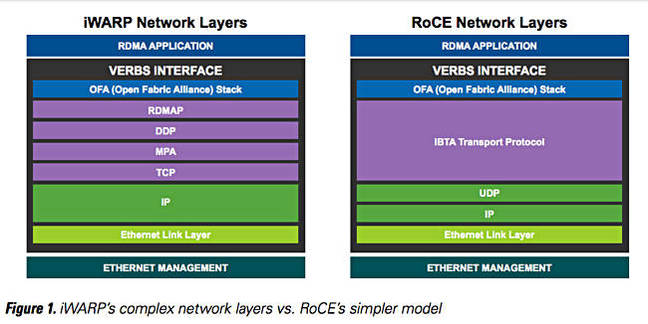

iWARP (internet Wide Area RDMA Protocol) implements RDMA over Internet Protocol networks. It is layered on IETF-standard congestion-aware protocols such as TCP and SCTP, and uses a mix of layers, including DDP (Direct Data Placement), MPA (Marker PDU Aligned framing), and a separate RDMA protocol (RDMAP) to deliver RDMA services over TCP/IP. Because of this it's said to have lower throughput, higher latency and require higher CPU and memory utilisation than RoCE.

For example: "Latency will be higher than RoCE (at least with both Chelsio and Intel/NetEffect implementations), but still well under 10 μs."

Mellanox says no iWARP support is available at 25, 50, and 100Gbit/s Ethernet speeds. Chelsio says the IETF standard for RDMA is iWARP. It provides the same host interface as InfiniBand and is available in the same OpenFabrics Enterprise Distribution (OFED).

Chelsio, which positions iWARP as an alternative to InfiniBand, says iWARP is the industry standard for RDMA over Ethernet is iWARP. High performance iWARP implementations are available and compete directly with InfiniBand in application benchmarks.

Mellanox chart comparing iWARP and RoCE

RoCE - RDMA over Converged Ethernet

RoCE (RDMA over Converged Ethernet) allows remote direct memory access (RDMA) over an Ethernet network. It operates over layer 2 and layer 3 DCB-capable (DCB - Data Centre Bridging) switches. Such switches comply with the IEEE 802.1 Data Center Bridging standard, which is a set of extensions to traditional Ethernet, geared to providing a lossless data centre transport layer that, Cisco says, helps enable the convergence of LANs and SANs onto a single unified fabric. DCB switches support the Fibre Channel over Ethernet (FCoE) networking protocol.

There are two versions:

- RoCE v1 uses the Ethernet protocol as a link layer protocol and hence allows communication between any two hosts in the same Ethernet broadcast domain,

- RoCE v2 is a RDMA running on top of UDP/IP and can be routed.

RoCE has been described as Infiniband over Ethernet; strip the GUIDs (Global Unique Identifiers) out of the IB header, replace them with Ethernet MAC addresses, and send it over the wire.

We understand RoCE is sensitive to packet drop, needing a dedicated channel for its traffic. Chelsio tells us that a RoCE network must have PAUSE enabled in all switches and end-stations, which effectively limits the deployment scale of RoCE to single hop. RoCE does not operate beyond a subnet and its operations are limited to a few hundred metres, not a constraint in the environment (servers linked to external storage inside a data centre) for which it is being considered.

RoCE does not use a standard IP header and cannot be routed by standard IP routers.

iSER - iSCSI Extensions for RDMA

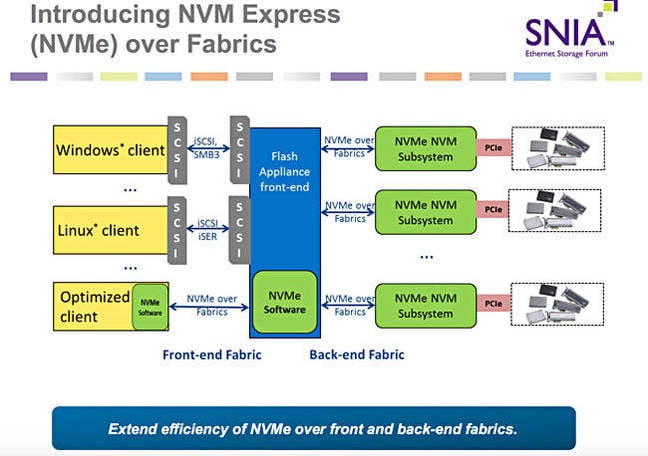

There is a third protocol we can introduce now: iSER. This is, or they are, iSCSI Extensions for RDMA – iSCSI being the Internet Small Computer System Interface. The RDMA part is provided by iWARP, RoCE or InfiniBand.

The iSER scheme enables data to be directly read from/written to SCSI computer memory buffers without any intermediate data copies. SCSI buffers are used to connect host computers to SCSI storage devices.

However, remote SSDs attach over a fabric using SCSI-based protocols, which means protocol translation and that adds latency to the data transfer. The aim is to bypass SCSI and have direct host to remote SSD resource over an NVMe fabric with no protocol translation.

SNIA NVMeF introduction slide

NVMeF standardisation

The SNIA (Storage Networking Industry organisation) is involved with the standardisation of NVMe over a range Fabric types, initially RDMA (RoCE, iWARP, InfiniBand) and Fibre Channel, and also FCoE.

Brocade and QLogic have already demonstrated an NVMeF link to a Fibre Channel subsystem. This was a proof of concept, with performance at 16Gbit/s Fibre Channel level. Brocade said it was aiming to reduce transport latency by 30-40 per cent, which would be good but not achieve pure NVMeF latency levels.

It is active in trying to make educative materials about NVMeF available, such as this excellent December 15, 2015 "Under the Hood with NVMe over Fabrics" presentation, crafted by staff from NetApp, Cisco and Intel. Read and study this to gain a view of NVMeF controller structures, queuing and transports.

The NVM Express organisation is also involved in NVMeF standardisation. Here is an NVMeF presentation by DSSD's Mike Shapiro, courtesy of NVM Express.

Another excellent SNIA presentation is "How Ethernet RDMA Protocols iWARP and RoCE Support NVMe over Fabrics" (PDF) by Intel and Mellanox staff. A transcribed Q and A from a webcast of that presentation can be found here.

NVMeF products

There are several emerging NVMeF products at the array and components levels:

- Arrays:

-

- EMC DSSD D5

- Mangstor

- Zstor - think Mangstor in Europe

- Apeiron - a startup with a blindingly fast array

- E8 - ditto

- X-IO Technologies's Axellio system, to be sold through OEMs, ODMs and system integrators

- Adapters

-

- Mellanox Technologies' ConnectX-3 Pro and ConnectX-4 product families implement RoCE

- Chelsio supports iWARP

- QLogic supports RoCE and iWARP

QLogic FastLinQ 45000 Ethernet controller supports NVMeF (RoCE and iWARP).

Infinidat and Pure Storage have both made encouraging statements about possibly adopting NVMeF in the future. Our understanding is that the range of available NVMeF products will steadily increases.

NVMeF use cases

An NVMeF deployment involves an all-flash array, adapters and cabling to link it to a bunch of servers, each with their own adapters and NVMeF drivers. The applications running in these servers require 20-30 microsecond access to data and there is vastly more data than can fit affordably in these servers' collective DRAM.

These characteristics could suit large scale OLTP, low-latency database access for web commerce, real-time data warehousing and analytics and any other application where multiple servers are in an IO-bound state waiting for data from stores that are too big (and costly) to put in memory but which could be put in flash if the value to the enterprise is high enough.

However, applications using NVMeF will, most likely, need to have special consideration given to the point that data written (transferred) to RDMA-accessed flash is persistent and that specific IO writes (as if to disk or SATA/SAS SSD) will no longer be needed. This needs careful and thorough consideration.

Recommendation Explore the idea of a pilot implementation using DSSD, Mangstor or Zstor if you think your application could "cost-justifiably" use an NVMeF shared flash store and can be amended/written to do so. ®