This article is more than 1 year old

Docker taps unikernel brains to emit OS X, Windows public betas

Plus: Container orchestration tools coming this July in v1.12

DockerCon Docker will kick off its DockerCon 2016 conference in Seattle this morning with a bunch of announcements: its OS X and Windows Docker clients will be made publicly available as beta software for anyone to try out; out-of-the-box orchestration is coming to Docker 1.12; and integration with Amazon's AWS and Microsoft's Azure is in the works.

First off, the OS X and Windows clients: these were available to about 70,000 developers as a private beta; you had to ask nicely for access to the software. After its tires were thoroughly kicked, and the crashes ironed out, the code is now available for all to experiment with. The clients allow you to easily and seamlessly fetch, craft and fire up Docker containers on your laptop or workstation.

The OS X client uses Apple's builtin hypervisor framework – yeah, Apple quietly embedded a hypervisor API in its desktop operating system. Previously, you had to use Docker with Oracle's VirtualBox, but it was a rather clunky setup and relied on Oracle not screwing around with VBox. Now, the client is neatly packaged as an OS X app and uses the underlying framework to bring up Linux containers at the flutter of your fingers on the keyboard in a terminal.

Apple's hypervisor framework provides an interface to your Intel-powered Mac's VT-x virtualization hardware, allowing virtual machines and virtual CPUs to be created, given work, and shut down. Docker provides a thin hypervisor layer on top of this to craft an environment for the Linux kernel to run in, and then on top of that sits your software containers. On Windows, Docker uses Microsoft's Hyper-V.

Interestingly, Docker founder and CTO Solomon Hykes told The Register gurus from Unikernel Systems – acquired by Docker in January – helped develop both clients. Unikernel Systems, a startup in Cambridge, UK, includes former Xen hypervisor programmers; the team are otherwise working on creating all-in-one software stacks that roll kernels and microservices into single address spaces running in sandboxes on a hypervisor.

"The OS X and Windows clients use a lot of unikernel technology under the hood to make the software integrate with the underlying hypervisor," said Hykes.

"Eighty per cent of that client work is unikernel tech. We try to use as much as possible the native system features. MacOS bundles its own hypervisor, so we use that, although it still requires developing a thin hypervisor on top. On Windows, there’s Hyper-V, so we use that. We did consider bundling VirtualBox, but it’s such a monster to bundle."

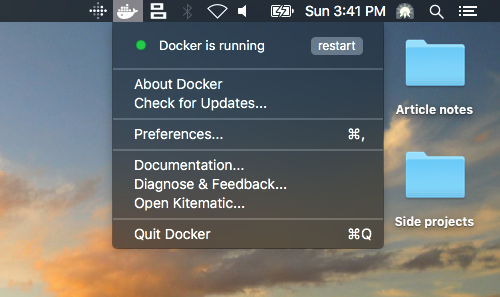

Installing the OS X client is pretty painless, takes up a couple of hundred megabytes of disk space once you get going with it, and requires an administrator password to setup. Once in place, it's accessible from a menubar icon, and you can configure how much memory and many CPUs you're willing to throw at it. Docker hired designers from the mobile gaming world to polish the user interface, we're told.

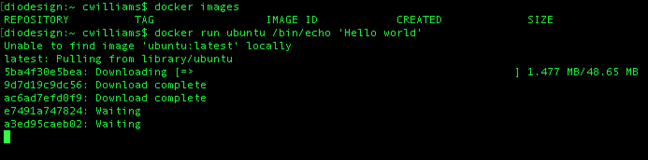

Initially, you have no images installed. So let's pull in the Ubuntu image by firing up a simple Hello World. Open an OS X terminal and run docker run --rm ubuntu /bin/echo 'Hello world'.

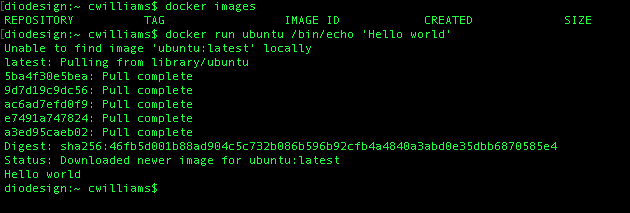

When the download completes and the container is built – which takes a minute or so – it's fired up by Docker, and runs /bin/echo 'Hello world' on Ubuntu Linux, outputting the text to the OS X terminal. That part happens almost instantly on your humble hack's mid-2012 Core i7 MacBook Pro. An instance is brought up, the command run, and it's all torn down.

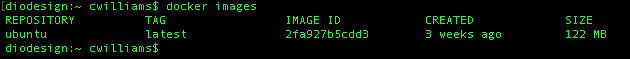

Checking the images present on the system with docker images shows our little Ubuntu is ready and waiting for its next command or workload; there's no need to fetch it again.

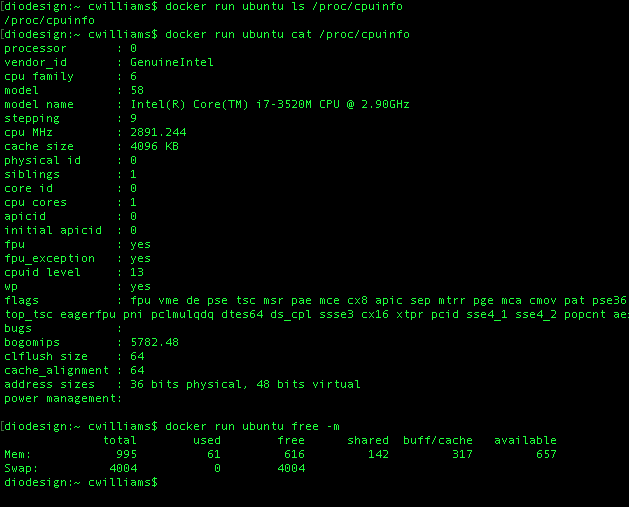

So at this point, you've got a tiny but capable Linux installation running as close to the bare metal as possible on your Mac: you can use it as you would SSHing into a one-shot Linux virtual machine. For example, you can inspect its CPU and RAM info by looking into /proc/cpuinfo and running free -m.

If you want data to persist between runs, you need to create a volume.

If you're scratching your head at this point and wondering why bother with any of this, then – and forgive me if I sound like a character from a Philip K Dick novel – you need to clear your mind and think about containerization. Like plenty of Reg readers, I've got virtual and bare metal systems in the cloud and at home for various tasks, from build servers to VPN boxes, which are all a SSH away. Why would I need Docker to run Linux apps on my laptop?

The point is to create container images of software – little bundles of dependancies and services needed to perform a particular task – and test them locally, let fellow developers also use them knowing they have the same sane environment as you do, and ultimately deploy the containers.

Think of it as you watching the four-hour-long director's cut Blu-ray edition of a movie at home, then going to the bar and chatting about it to your friend, who has only seen the 90-minute cut-for-TV version. There are scenes in the flick you've seen that your pal hasn't so what you're saying isn't making much sense to them. You get into an argument. Evening ruined.

The next day, you both go to Netflix and watch the same damn movie, suddenly everything clicks into place, and everyone's happy again. Containers fix the software side of this story: you both install and run the same damn libraries, tools and services.

Orchestration aka making software just do the thing

That leads us into the next part: how to deploy and control large numbers – potentially thousands – of these containers at scale. Docker Swarm is available to do that, but it's a separate tool from the main client. Meanwhile, there are a bunch of other utilities out there, such as Kubernetes and Mesos, that will manage containers running on your servers for you.

With Docker 1.12 – which is due out in July – that Swarm functionality will be brought into the fold and is designed to be easy to use without any extra tools.

"We're doing for orchestration for what we did with containerization," Hykes told us.

"Orchestration today is not easy to use: it's reserved for experts. What the community is telling us is that they love Docker but that the orchestration is a mess. First of all, they need to choose between 10,000 tools, choose a specific model, and once they’ve chosen a tool or a platform, they discover that setting it up and using it for a Hello World example versus using in a production-ready way is actually a lot of work.

"It's a really specialized job that requires the kind of engineers who work at Google, Uber or anyone else who can afford an ops team. We want to make it usable for anyone who wants to deploy an application and scale it."

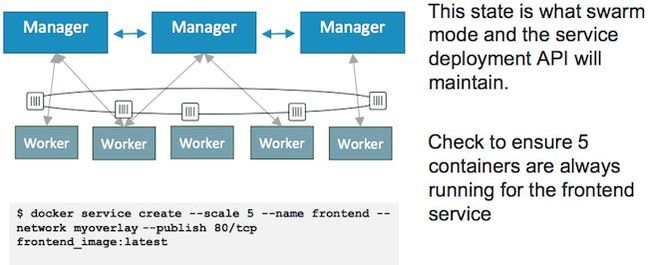

The new so-called Swarm Mode in Docker Engine 1.12 is optional: it can be turned off if you still prefer to use Kubernetes or similar. It is designed to be self-organizing and self-healing, which is a fancy way of saying the software automatically makes sure it just works as configured, rebalancing workloads even if a server falls over.

Basically, you run docker service create and a bunch of managers are fired up. They break the service into tasks and assign these to worker nodes, which run containers to provide that service.

(These services, tasks and containers can be optionally described using a distributed application bundle – a .dab file – which uses a JSON format and is an experimental feature. DAB files are expected to arrive formally in Docker 1.13.)

The managers assign IP addresses, and ensure the workers are kept busy. If something falls over, it's restarted to a given state. A service deployment API will be available so software and admins can configure and control their swarms. There's also a routing mesh feature that provides multi-host overlay networking, DNS-based service discovery, and round-robin load-balancing out of the box.

Swarm nodes are configured, by default, to use end-to-end TLS encryption between themselves to secure their communications with rotating certificates and cryptographically secured identities. This cryptography is handled automatically and silently. Essentially, that's the deal with Docker Engine 1.12: taking Swarm's features and bringing them into Docker and making the setup as automatic and as painless as possible.

Azure and AWS integration

Finally, Docker is integrating Docker 1.12 with Microsoft's Azure and Amazon's AWS clouds. This is a private beta: you can apply for access here. You can already run Docker in these off-premises systems just fine; the integration allows you to deploy a Docker swarm with a few clicks – the Docker Engine nodes are automagically secured with end-to-end TLS encryption and tap into the clouds' autoscaling, load-balancing and storage facilities directly.

So that's an overview of what Docker's announcing today. We'll have more detail from DockerCon this week. ®

Bootnote

Yeah, we know it's called macOS these days, not OS X. We'll accept the change when Apple actually picks up the phone for a change.