This article is more than 1 year old

Stop lights, sunsets, junctions are tough work for Google's robo-cars

If only we had self-driving processors that were stupidly fast, says web giant

Hot Chips After cruising two million miles of public roads, Google's self-driving cars still find traffic lights, four-way junctions and other aspects of everyday life hard work.

To be sure, the hardware and software at the heart of the autonomous vehicles is impressive. But it's just not quite good enough yet to be truly let loose on America's streets. If you're wondering why, after nearly a decade of hype, we still aren't running around town in robo-rides, and why we won't for a long while yet, take a look at Exhibit A:

Highlighted in the yellow box is a red ball on a white stick by the side of a road. It's not a red light – in fact, if you look up, you'll see the signal is green. To a hands-free car, though, it could be a stop light, forcing it to hit the brakes.

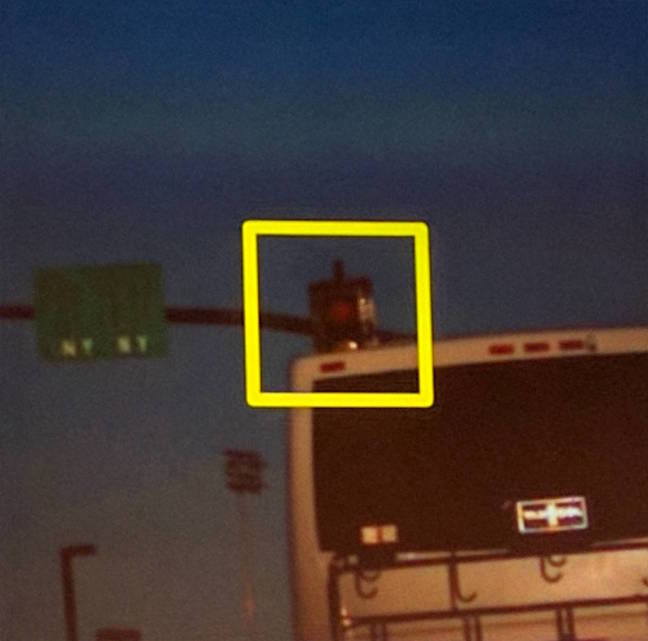

Exhibit B is a traffic light obscured by a bus and, due to the poor light, it isn't clear if it's telling you to stop or keep going. Again, it's confusing for a flash self-driving motor.

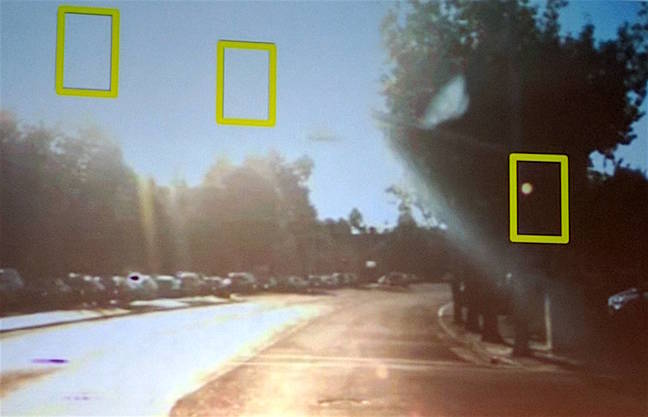

Finally, Exhibit C, in which light from the setting sun completely obliterates the view, leaving an autonomous vehicle potentially lost – is that a green or a red light, or a floating orb? Did the autopilot just run a red light?

What would you do in these situations? Hopefully, you'd use some common sense and clues around you to decide if it's safe to go or stop. The key issue here is common sense: it's tough teaching computers intuition. Despite big leaps in artificial intelligence, natural instincts still elude our software. Machines pretend to be tolerant of our faults; secretly, they expect perfection in an imperfect world – and they struggle when the unexpected happens.

The above examples came from Google hardware engineer Daniel Rosenband, who works on the internet goliath's self-driving cars. He spoke at Tuesday's Hot Chips conference in California to highlight the complex challenges facing his colleagues: in short, producing a commercially viable autonomous ride is non-trivial, as engineers say.

"There are some really hard problems left to solve. Traffic light detection and signal recognition are hard problems," he said.

In most circumstances, the Googler explained, image-processing code could simply search a video frame for a bright red or green circle, and figure out if there's a green or red signal in front of the camera.

But there will be times when that naive approach fails to work properly and things are misidentified as traffic signals – see the sign pictured on the right, for example, which could be mistaken for a go signal. "The car needs to be far more aware," Rosenband said.

(If you're interested to see how Google is tackling this problem, Googlers Nathaniel Fairfield and Chris Urmson wrote a paper on advanced traffic-light detection, here.)

Rosenband added that four-way junctions with no lights can be a nightmare for robot cars. An example intersection is California and Powell in San Francisco, which has the added bonus of two cable car lines going through it. Human motorists rely on eye contact to know when it's safe to go or just take the initiative and move first. A driver-less car can get stuck trying to safely nudge its way across the box.

"At four-way stops, oftentimes cars arrive sorta at the same time and it's a coin flip for who goes first. We have to make it comfortable for the person in the car; you don’t want the vehicle to inch forward and then slam the brakes, and you also want to be courteous to other drivers," Rosenband explained.

Another challenge is the weather. Google is based in Mountain View, California, where the climate is pretty mild, swinging from the low 40s to the high 70s (6°C to 26°C). Meanwhile, one state over, in cloudless Arizona, where the mercury regularly climbs past the 100°F (38°C) mark, the searing sunlight punishes the dome on the roof of the autonomous car. Just a little north and to the west in Nevada, where Google also test-drives its vehicles, it's just as bad.

That roof dome contains sensors and other electronics needed by the machine, and its engineers had to find a way to protect and cool the hardware to survive the desert sun. "Things can get pretty toasty," said Rosenband.

Model behavior

Snow, heavy rain, bad drivers, complex junctions, unusual signage, hipster cyclists; all these have stumped Google's robo-chauffeurs at some point. It seems nearly anything they haven't been trained for flummoxes them. Google's AI systems are excellent learners and once they've nailed a task, they'll keep on nailing it. But you have to throw incredible amounts of information at them, with plenty of trial and error, before they catch on.

You can teach a computer what an under-construction sign looks like so that when it sees one, it knows to drive around someone digging a hole in the road. But what happens when there is no sign, and one of the workers is directing traffic with their hands? What happens when a cop waves the car on to continue, or to slow down and stop? You'll have to train the car for that scenario.

What happens when the computer sees a ball bouncing across a street – will it anticipate a child suddenly stepping out of nowhere and chasing after their toy into oncoming traffic? Only if you teach it.

Creating a competent self-driving product involves building a wise and knowledgable model that has seen nearly every conceivable scenario and knows how to cope with each of them. That takes time and effort to develop, which is why we're still waiting for driver-less taxi cabs.

The cars also use detailed maps, which feature the precise location of signs and traffic lights to look out for, as well as road lanes, turnings and routes to follow. That relies, in part, on GPS, which can cut out if a signal can't get through. Rosenband said a lot of work had been done to make the system cope with a lack of GPS positioning, or if something unexpected appears on the highway that's not on the map.

As well as taking the machines out on public roads to learn, Googlers teach their software valuable lessons by making the code solve tricky and rarely occurring problems, all played out in simulations or on private land.

"We operate on test tracks and simulate unusual scenarios to systematically cover a broad spectrum of scenarios," said Rosenband.

"But nothing replaces putting rubber on the road driving."

Changing the infrastructure – in other words, installing computer-friendly traffic signals or embedding wireless guides under the roads or mandating that autonomous cars are painted in one color and human-driven cars in another – is out of the window. It's just not an option. Google doesn't have that level of clout to overhaul America's faded road network; the worn away lane markings alone cause some self-driving car brains to blow a fuse.

For now, the web giant's deep-learning systems have to make do with the clumsy, messy world we've built.

There is one thing, though, that Google would like. And that's some silicon to accelerate the decision-making process in the cars. Since they'll need large AI models and an awful lot of realtime data to process – the hardware hoovers up live feeds from cameras, radio wave radar and laser-based lidar – they could do with a relatively small and power-efficient dedicated chip to tackle all this.

Right now, the hardware Google uses in its self-driving cars is kept tightly under wraps: Rosenband wouldn't say if it was custom electronics, stock parts or a mix of both. We reckon it's probably a mix of off-the-shelf components and customized silicon.

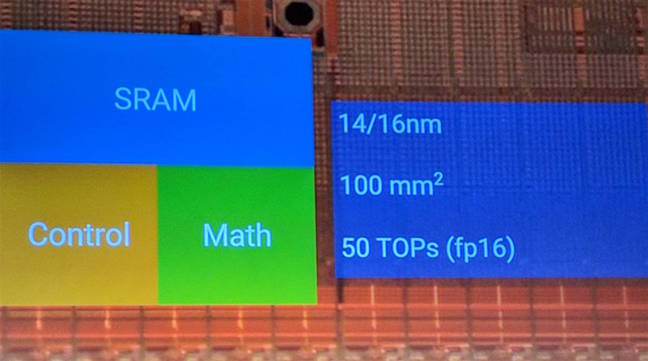

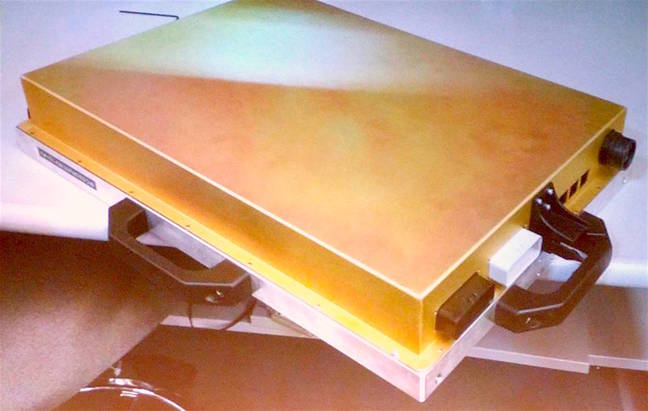

However, he did reveal an ideal chip design for a driver-less vehicle's brain – an inspirational goal, as he put it. It looks like this:

Basically, a 14nm or 16nm processor with a 100mm2 die, half of which is SRAM, a quarter of which is a math unit and the other quarter control logic. The component would ultimately crunch 50 trillion 16-bit floating-point operations per second. Today's 16nm FinFET Parker system-on-chip from Nvidia can perform 1.5 trillion of those calculations a second. Rosenband said 16-bit floating-point represented a "sweet spot" for its AI system; a balance between precision and speed.

Here are some other images he shared with the Hot Chips crowd.

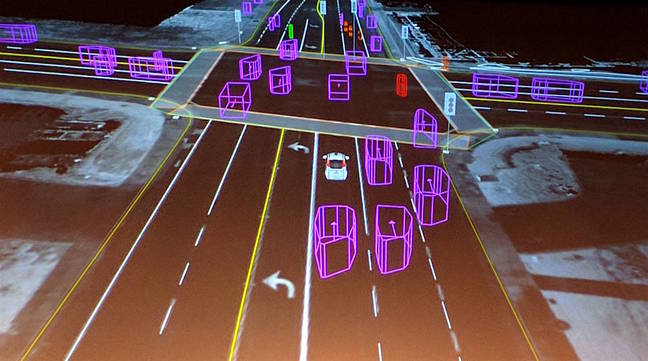

This is an overhead photo of what an autonomous car basically sees, with detected vehicles labelled in pink and lanes marked in white and yellow

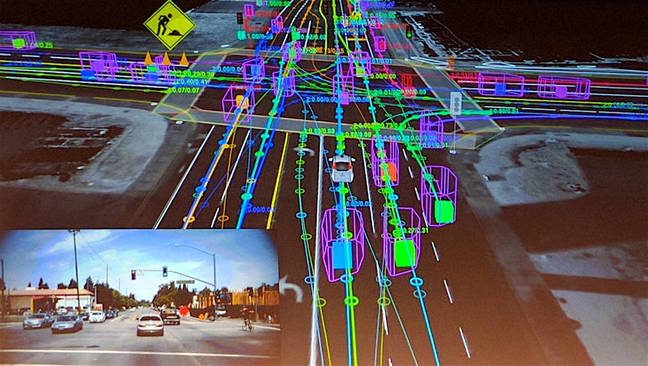

And now this is with all the paths of all the surrounding objects plotted on as the system considers them

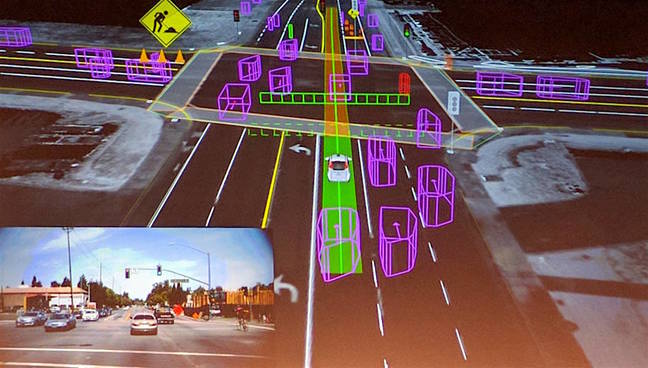

Now the system has stripped down the view to the most important elements: the solid green horizontal fence in the middle represents a virtual barrier the car shouldn't cross because it'll mean crashing into the vehicles in front of it. The dotted green fence is a warning barrier, as a pedestrian could cross the street at that point and force a stop. A clear path ahead has been plotted, taking into account the construction sign and work going on in the middle of the road

This is what the inside of an early prototype looked like, filled with various boxes of gear. 'Not too fun to debug,' said Rosenband

And this is the machine today's Google driver-less cars use: all the compute technology required is packed into this chassis

Google is aiming to have its self-driving cars on sale in 2020. When one of these smashes into something else, though, who is liable? "As engineers, we do everything we can to make a safe system, but we’ll leave that policy to the lawyers," said Rosenband. ®

Updated to add

Google spokesman Johnny Luu has been in touch to say the web giant is "confident" in its autonomous cars' ability to identify and handle traffic lights, signs, intersections and blinding light from the sun.