This article is more than 1 year old

Accessories to crime: Facial recog defeated by wacky paper glasses

AKA how to look like a supermodel on camera to an AI

Researchers armed with some nifty algorithms and a set of paper glasses frames have found a way to trick facial recognition systems.

Users can either evade being recognized – or more interestingly, impersonate another individual – with up to at least 80 per cent success rate, the researchers from Carnegie Mellon University and the University of North Carolina Chapel Hill boasted.

Results from their paper “Real and Stealthy Attacks on State-of-the-Art Face Recognition” [PDF] were presented at last week’s ACM Conference on Computer and Communications Security in Austria.

Criminals and ordinary folks wishing to avoid being recognized simply had to be mistaken for some arbitrary face. For impersonation, the identity must be recognized as someone the system already knows.

The team trained neural networks to recognize 2,622 celebrities, three researchers and two volunteers. During the trials, one of the researchers – a South Asian woman – was incorrectly read as a Middle Eastern bloke 88 per cent of the time. Her paper specs hoodwinked the AI into simply not recognizing her.

Impersonation is a little tricker. Although one male researcher wearing the paper glasses could pass as Milla Jovovich, an actress and supermodel, almost 90 per cent of the time, another male researcher had a hard time trying to impersonate Clive Owen, an actor – only succeeding around 16 per cent of the time.

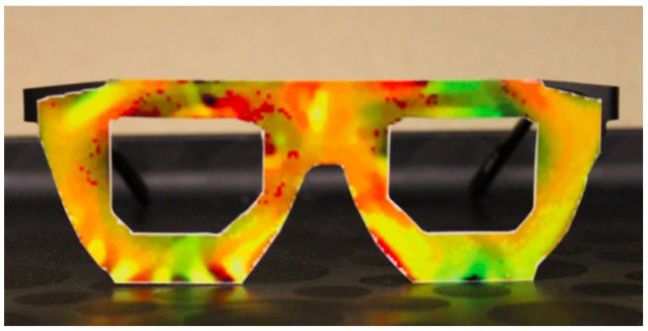

There isn’t anything special about the spectacles themselves. The frames were just printed on paper and overlaid on proper glasses. It’s the “gradient descent algorithm,” which creates a colorful texture for them, that does the heavy lifting.

Magic paper glasses that can trick AI ... Photo credit: Sharif et al

Although well-trained machines can recognize objects faster and better than most humans, they can’t handle change very well. Humans can still recognize the same faces even if they appear slightly different, but for machines it’s more difficult because they process images by searching for particular features. Slight changes to images can throw neural networks.

The minimum change in pixels needed to dodge a facial recognition system or impersonate a person was calculated by the algorithm and projected as a pattern, which could then be printed onto the glasses frames and worn by an attacker.

The changing colors and patterns on the glasses confuse the neural net and cause it to judge the subject's face incorrectly.

"Our algorithm also tries to ensure several desired properties: that the transitions between colors are smooth (similarly to natural images), that the front plane of the glasses can be printed by a commodity printer, and that the glasses will fool the face-recognition system even if images of the attacker are taken from slightly different angles or the attacker varies her facial expression," the researchers told The Register.

Obviously there are simpler ways to trick facial recognition systems. People can wear face masks or heavy makeup, but the authors wanted to look for ways to trick the system inconspicuously, without tampering with the facial biometric software before or during the training process.

“Facial accessories, such as eyeglasses, help make attacks plausibly deniable, as it is natural for people to wear them,” the paper said.

Machine learning and AI are slowly seeping into all areas of technology, including CCTV cameras, so these glasses could prove useful. Just be prepared to look a bit weird, as the frames are garish. ®