This article is more than 1 year old

A closer look at HPE's 'The Machine'

It's supposed to be a server design rule shakeup. Here's what we know so far

Analysis HPE is undertaking the single most determined and ambitious architectural redesign of a server’s architecture in recent history in the shape of The Machine.

We'll try to provide what Army types call a sitrep about The Machine, HPE's "completely different" system: its aims, its technology and its situation. Think of this as a catch-up article about this different kind of server.

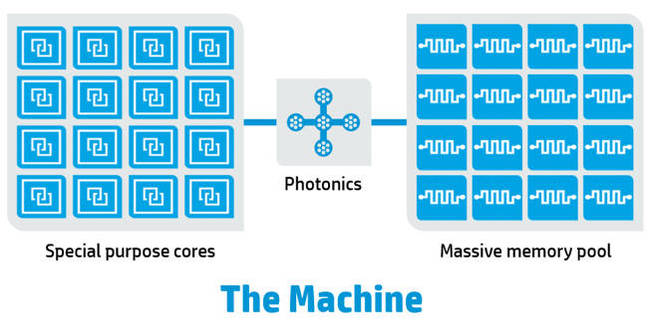

The Machine is being touted as a memory-driven computer in which a universal pool of non-volatile memory is accessed by large numbers of specialised cores, and in which data is not moved from processor (server) to processor (server), but in which data can stay still while different processors are brought to bear on either all of it or subsets of it.

Aims include not moving masses of data to servers across relatively slow interconnects, and gaining the high processing speed of in-memory computing without using expensive DRAM. The main benefit is hoped to be a quantum leap in compute performance and energy efficiency, providing the ability to extend computation into new workloads as well as speed analytics and HPC and other existing workloads.

It involves developments at virtually every level of server construction, from every chip design, through system-on-chips, silicon photonics chips and message protocols, server boards, CPU-memory access and architectures, chassis, network fabrics, operating system code and application stacks from which IO may be completely redesigned.

There is a real chance HPE may have over-reached itself and that, even if it does deliver The Machine to the market, hidebound users and suspicious developers may not adopt it.

The Machine is an extraordinary high-stakes bet by HPE, so let's try and assess the system and its state here.

Core concepts

The Machine is basically a bunch of processors accessing a universal memory pool via a photonics interconnect.

Basic concept for The Machine

We can envisage five technology attributes of The Machine:

- Heterogeneous specialised core processors or nodes

- Photonics-based CPU to universal memory pool interconnect

- Universal non-volatile memory pool

- New operating system

- Software programming scheme

The cores

The cores are processor cores focussed on specific workloads, meaning not necessarily x86 cores. They could also be ATOMs, ARMs or something else or a mix, but probably single socket and multi-core.

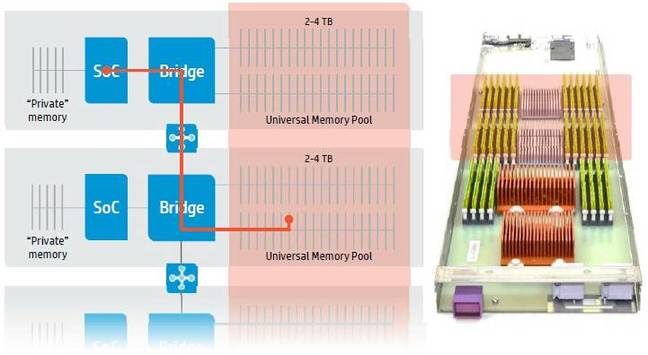

A processor in The Machine is instantiated on a SOC (System On a Chip) along with some memory and photonic interconnect. The SOC, which is a computational unit, could link to 8 DRAM things (DIMMs probably, in a mocked-up system we discuss later). The interconnect goes to a fabric switch and then on to shared memory.

Note: a SOC on one node talks via the fabric (through bridges or switches) to persistent memory on the other nodes without involving the destination node’s SOC.

Photonics is an integration of silicon and lasers to use light signals in a networking fabric that is faster than pumping electrons down a wire.

The Machine’s SOCs run a stripped-down Linux and interconnect to a massive pool of universal memory through the photonics fabric.

This memory is intended to use HPE’s Memristor technology, which is hoped to provide persistent storage, memory and high-speed cache functions in a single, cost-effective device.

When HPE talks of a massive pool it has hundreds of petabytes in mind, and this memory is both fast and persistent.

Accessing memory

We should not think of The Machine's processors accessing memory in the same way a current server's x86 processor accesses its directly connected DRAM via intermediate on-chip caches, with the DRAM being volatile and having its contents fetched from mass storage – disk or SSD. This is a memory hierarchy scheme.

The Machine intends to collapse this hierarchy. Its memory was originally going to be made from Memristor technology, which would replace a current server’s on-chip caches and DRAM, but the delayed timescale for that meant a stop-gap scheme involving DRAM and Phase-Change Memory appeared in April 2015. HP’s CTO Marin Fink, the father of The Machine, said the system would be delivered in a three-phase approach.

it would still be a memory-driven system and use Linux:

- Phase 1 (version 0.9) DRAM-based starting system, with say, a 320TB pool of DRAM in the rack-level starter system that emulates persistent memory.

- Phase 2 Machine using Phase Change Memory, non-volatile storage-class memory,

- Phase 3 would be the Memristor-based system.

Phase 1 is a working prototype, with phase 2 an actual but intermediate system, and phase 3 the full Memristor-based system.

SanDisk (now WDC) ReRAM became part of HPE’s memory-driven computing ideas in October 2015. We imagine that its ReRAM replaced the Phase Change Memory notion in the phase 2 Machine.

The phase 2 machine has server nodes (CPU + DRAM + optical Interconnect) sharing a central pool of slower-than-DRAM non-volatile memory, with potentially thousands of these server nodes (SOCs). HPE’s existing Apollo chassis could be used for it.

Phase 3 would then have a Memristor shared central pool. If the server nodes retain their local DRAM then HPE will have failed in its attempt to collapse the memory hierarchy to just a Memristor pool. We would either still have a 2-level memory hierarchy or a NUMA scheme.

Productisation timescale

The Machine has been beset by delays. For example, HP Labs director Martin Fink told the HP Discover 2015 audience at Las Vegas that “a working prototype of The Machine would be ready in time for next year's event.” Bits of one have been seen. At the time of the 2015 Discover event Fink posted a blog, ”Accelerating The Machine” which was actually about The Machine’s schedule delay.

He wrote at the time:

We’re building hardware to handle data structures and run applications that don’t exist today. Simultaneously, we’re writing code to run on hardware that doesn’t exist today. To do this, we emulate the nascent hardware inside the best systems available, such as HPE Superdome X. What we learn in hardware goes into the emulators so the software can improve. What we learn in software development informs the hardware teams. It’s a virtuous cycle.

He showed a mock-up of a system board in this video:

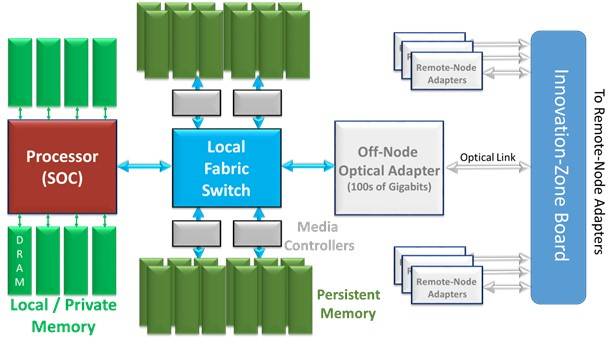

We see here a node board with elements labelled by our sister publication The Next Platform. It has produced a schematic diagram based on its understanding of the mocked-up node board:

From it we can see there is a processor SOC, local DRAM, non-volatile memory accessed by media controllers and off-board communications via an optical adapter controlled by a fabric switch.

Is the Machine’s shared memory pool created by combining the persistent memory on these node boards? It appears so.

We can say then, that, if this is the case, access to local, on-board persistent memory will be faster than access through the fabric switch and optical link to off-board persistent memory will be slower and we have a NUMA-like situation to deal with.

It also appears that the SOCs contain a processor and its cores and also cache memory; so, if this is the case, we have a CPU using a memory hierarchy of cache, DRAM, local NVM and remote NVM – four tiers.

Again, if this is the case, then The Machine’s usage of the Memristor-collapses-all-the-memory-storage tiers idea is exposed as complete nonsense. This is a lot of supposition but backed up by HPE Distinguished Technologist, Keith Packard.

Machine hardware

More information on The Machine’s hardware can be found in an August 2015 discussion of The Machine’s then-hardware by Keith Packard here. It talks of 32TB of memory in 5U, 64-bit ARM processor-based nodes. All of the nodes will be connected at the memory level so that every single processor can do a load or store instruction to access memory on any system, we're told. The nodes have “a 64-bit ARM SoC with 256GB of purely local RAM along with a field-programmable gate array (FPGA) to implement the NGMI (next-generation memory interconnect) protocol.”