This article is more than 1 year old

So, who is the cluster bomb? Student results sliced, diced, analysed

And maybe over-analysed too

HPC Blog It's time to close the books on another highly successful SC Student Cluster Competition. This year was special in a number of ways. First, it was the event's 10th anniversary. At 14 teams, it was also the largest SC competition ever – a far cry from the original five. SC16 was also noteworthy in terms of the performance achieved (more than twice the existing LINPACK record) and the wide variety of cluster configurations designed by the student participants.

We already covered the LINPACK and HPCG results here and here, so let's get right to the applications.

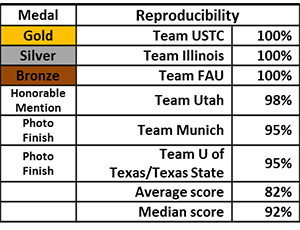

The "Reproducibility" application is a first for any cluster competition. This application has students testing out a scientific paper in order to reproduce the results.

In this test, the students are using ParConnect to analyse pond scum in an exercise of metagenomics. As you can imagine, there are a huge number of different organisms in your typical sample of scum, and assembling their genes is a pretty big compute job.

As you can see from the scores, this application didn't pose a huge challenge to our student teams. The top three teams (USTC, Illinois, and FAU) all scored a perfect 100 per cent on this application, while Utah came in fourth with 98 per cent. Munich and Texas followed with twin 95 per cent scores.

The next application up is Paraview, a visualisation application that is used in a wide variety of fields. It is particularly handy for analysing extremely large datasets, and has been used on supercomputers to analyse petascale-sized datasets.

Team USTC narrowly edged Team Munich on this application by a single point. Running a little bit behind the leaders are Teams Illinois and Utah – which is a very impressive performance for newbie teams. As you can see by the median and mean scores, there was a bit more variability in the scores for the other ten teams.

GROMACS was this year's mystery application – revealed to students just before the application portion of the competition began. Those of you who routinely analyse proteins or lipids, whether for your job or as a hobby, know that GROMACS is one of the mack-daddy programs designed to handle these tasks.

Our students weren't quite as experienced with this program, judging by the average and median scores for this application. Still, the kids from Singapore (Team Nanyang) managed to post a perfect score, followed by the newbie Power-pushing team from Peking at 85 per cent. Team USTC, with their ten P100 accelerators, finished behind Peking by a single point, with USTC finishing in the money with 84 per cent. Illinois gets on the scoreboard with an honourable mention.

The hands-down toughest task for the teams was the Password Cracking challenge. Students are presented with a series of datasets that contain password hashes. It's their task to work through dictionary attacks to decode these hashes into true passwords.

As you can see by the median score, teams were all over the map on this application. This is also the lowest average score of all of the applications in the competition. The organisers really threw the kids a curveball with this one.

Teams were scored by the number of passwords they managed to crack – with Team Taiwan (NTHU) posting the biggest number. Team Utah managed to get within 20 per cent of NTHU's result, which again is a great job by a new team.

But check out Team NEU/Auburn. These are the kids whose cluster was lost in the mail and ended up running a couple of workstations and a single true supercomputer node. Somehow, some way, they were able to grab third place – despite having less and significantly crappier hardware. According to our application experts, it looks like NEU/Auburn was able to find great dictionaries and exploit them to the max in order to achieve this score. Colour me impressed.

The final part of the competition included interviews by HPC experts and the judging of team posters. Each team was interviewed with an eye towards their HPC knowledge, their understanding of their systems, and how well they could explain their optimisations.

Team Munich scored a big win with a 95 per cent score, with Team Texas and Utah knotted at 93 per cent. Teams FAU and Peking were right behind with scores of 91 and 90 respectively.

Overall, the teams did very well on the interview and post part of the competition, with the average and median scores both at 84/85 per cent.

... and the Champion is...

Team USTC topped the other thirteen with an overall score of 89 per cent. They are the first team to ever capture the Overall Championship Award and the Highest LINPACK Award at the same competition – an amazing performance.

What's interesting is that they only won two of the application categories (along with LINPACK and HPCG). This was a very close competition. USTC finished in the money on GROMACS, but didn't make the scoreboard on the other applications. I think the big key to their win was not making any mistakes. They turned in an all-round good performance, while posting wins on Paraview and Reproducibility.

Look at Team Utah! They're both a first-time competitor and the home team – and they brought home the Silver Medal (or, since they were already at home, they just sort of kept it there). They built their second place score by placing on the scoreboard on four out of the five applications, plus doing well on the benchmarks.

This is unprecedented in SC competitions. Typically the home team doesn't do so well, and particularly if they're first-timers. This was a fantastic performance by the Utes.

Veterans NTHU took down third place, fuelled by their dominant performance on Password Cracking and solid scores on the other applications/benchmarks. This is the highest the team has placed for several competitions. Perhaps we're seeing the start of a resurgence in Taiwan student clustering?

The blended team of University of Texas (Austin) and Texas State University grabbed an Honourable Mention for finishing only two points south of third place, the best performance we've seen with a blended team in competition history. Quite an achievement.

This competition was unusual in that all of the teams did pretty well. There weren't a lot of zero scores on the scorecards, which is a break from what we've seen in the past. Students are getting better, the hardware is getting better, and the scores are rising. Things are going well in Student Cluster land.

The next major international competition is scheduled for Asia sometime around April. We'll be there to cover all the action and bring you the most comprehensive overview and results possible. Stay tuned.