This article is more than 1 year old

Hard numbers: The mathematical architectures of Artificial Intelligence

Is your machine learning?

Pity the 34 staff of Fukoku Mutual Life Insurance in Japan, diligently calculating insurance payouts and brutally replaced by an AI system. If you believe the reports from January, the AI revolution is here.

In my opinion, the goings-on in Japan cannot possibly qualify as AI, but, in order to explain why, I have to explain what I think AI means.

In one way, this attempt will be doomed to failure because there is no unified definition of AI. But I can, hopefully, provide a framework of understanding about the topic that may help.

But before I do, I’ll give you an example of what I think may well be a primitive AI system. This story (in my opinion a much more interesting one) was much less widely reported than the insurance one. Google Translate is already in widespread use and, as the name suggests, translates between human languages.

In November it was reported that Google Translate seems to have invented its own language. It was originally trained to translate between specific pairs of languages. So, for example, it would be trained with sentences in, say, Japanese and English so that it could learn how to translate either way between that pair of languages. Then it was trained with sentences from another pair (Korean and English) and so on. To go from Korean to Japanese, one might expect it to translate via English as an intermediary step.

But it appears not to be doing so. As the Google research blog reports:

This inspired us to ask the following question: Can we translate between a language pair which the system has never seen before?

Impressively, the answer is 'yes'.

The researchers then went on to try to work out how Google Translate was doing the new translation, given that it hadn’t been specifically trained to do it. They concluded: "The network must be encoding something about the semantics of the sentence rather than simply memorizing phrase-to-phrase translations. We interpret this as a sign of existence of an interlingua in the network.”

The important words in this quote are “we interpret this as”. In other words, they don’t know how the system that they built managed to solve this problem. Their system (a neural net) has not simply learnt a set of rules from a large number of examples - computers have been doing that for years - it appears to have worked out a novel way to solve a problem.

The essential premise of my argument is that AI is simply a level in a stack that builds up from the bottom, each level dependent on the level below. In practice there is so much blurring between the layers that it is more of a spectrum but spectra are notoriously difficult to describe. We are lucky that all of the levels have clearly defined names so I’ll describe it as a stack.

At the bottom is mathematics.

Mathematics

I hated maths at school, but that was because I didn’t understand the difference between maths and arithmetic. Arithmetic is mechanical, maths is logic. Maths is about working out the patterns that are inherent in numbers; it is about finding the inherent rules that numbers follow. So, a good example is that we have yet to uncover the fundamental pattern that underlies the distribution of prime numbers (or even to prove that such a pattern exists). We can test to see if a given number is prime, but we cannot predict precisely where the next one lies. Humans have been studying mathematics since the dawn of time or, at least, the dawn of tally sticks.

Statistics

Sitting above maths in the stack is statistics. Statistics is about studying how real-world numbers behave. So, for example, we might run statistical tests on some sales figures in order to see if we are selling significantly more fish per head of population in Yorkshire than in Lancashire. We usually perform statistical tests because we want to predict some form of future behaviour. Statistics cannot tell us that something will definitely happen, but it can give us the probability that something will happen (or has happened). We can use this probability to make informed decisions (for example, about the actions of Donald Trump).

Some people consider statistics to be a branch of mathematics; others argue that it is a separate discipline. What few people would question is that statistics is heavily based on mathematics. You can have mathematics without statistics but not the other way around. The origins of statistics are debatable depending on your definition, but modern statistics started surprisingly recently, only around 100 years ago.

Data mining

Above statistics we have data mining, which is the process of using generalised algorithms to find patterns in data. The term appeared in the database community in the 1990s (although its fundamental origins are earlier).

Clearly a computer is not absolutely essential to perform data mining (a pencil and paper approach would be possible but painfully, painfully slow); realistically the process has always been associated with computers.

There is a fundamental difference between querying and data mining. When we query, we usually have a hypothesis; we are testing a theory. “I suspect that we sell more fish in Yorkshire than Lancashire, so I’ll run a query that counts the number sold in each county.” When we data mine we say: “Find me patterns in this data.”

There are multiple flavours of data mining (clustering, regression and so on), so we choose the general type of pattern that we are searching for, but we are not necessarily testing a specific hypothesis.

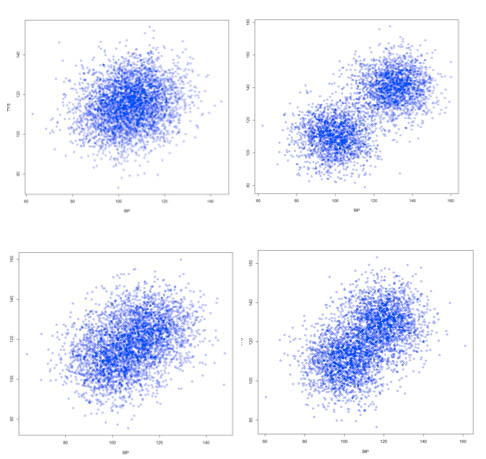

Data mining algorithms rely very heavily on statistics. For example, which of the screenshots below show two clusters and which show one? A clustering algorithm will make a decision based on probability and it gets the probability by running statistics.

One cluster or two, vicar?

Machine learning

On top of data mining we have machine learning, which is about using data mining to learn patterns. So, for example, we could feed a clustering algorithm with a set of data about insurance claims where we know (because we have the prosecutions!) that they were dishonest claims. The algorithm would generate a pattern of clusters and we could get it to store that pattern. By getting it to store the pattern we are teaching a machine and the machine is learning.

We can then feed the system with claims known to be honest and so it learns the pattern of clusters for honest claims. The learnt patterns are called data models. Then we could take some new claims (about which have no opinion re. their honesty or otherwise) and use the data models to give a prediction about the honesty of each claim. This is (one form of) machine learning.

Finally, AI

Machine learning is used to build ever more complex models of the world and we are creating software that makes decisions and gives advice based on those models. At some point this software becomes complex and efficient enough for us to call it artificial intelligence.

That “some point” is, of course, a judgement call. What do you consider to be AI? (Note that AI does not imply machine consciousness.) Do you consider autonomous cars to be an example of AI? What about Siri and Cortana?

Wherever you set the bar, I guarantee that you will find that the system you are calling AI is heavily dependent on machine learning, which only works if we have data mining, which relies heavily on statistics, which is fundamentally founded on maths.

So, for example, autonomous cars. Whether or not they are AI, they have, in the past, collected vast amounts of sensor data from a huge variety of sources and that data was mined in order to generate data models. As they drive they compare the incoming data with the models in order to make decisions about how to control the car. And they continue to learn as they are driven.

So I haven’t precisely defined AI - I think that it so much a matter of opinion - but I hope these underpinning definitions help the debate. They do, at least, allow us to rule out a large number of systems as not AI. We have a good understanding of machine learning so any system that simply learns a set of rules from raw data and then applies them is not AI; it is machine learning. To be AI it has to be doing more than that.

From the evidence I have seen I don’t believe that Fukoku Mutual Life Insurance has an AI system; it sounds like machine learning to me. Part of AI seems to me to include an element of learning about several different aspects of the world and bring that information together to make generalised decisions. For me, the Insurance system is far too focused to be AI. But I do believe that we are on the cusp of developing workable AI systems. I think that autonomous cars probably are demonstrating (or will demonstrate) this.

Certainly I believe that AI is going to start having an impact on our lives in the very near future.

If you view the terms and conditions, you will find that Microsoft refers to Cortana as if it was a person using the word “she” to refer to what must be an “it”.

According to Microsoft: “Cortana is your personal assistant. Cortana works best when you sign in and let her use data from your device.”

So I take it only Cortana, not Microsoft is going to look at my data?

Microsoft, then, clearly thinks that Cortana is AI and, by implication, AI is here. Now. ®